PH热榜 | 2025-12-16

一句话介绍:一款将AI深度嵌入阅读过程的工具,通过实时问答、个性化引导及与历史名人“共读”等功能,解决读者在阅读中遇到理解障碍、感到孤独或难以坚持的痛点。

Productivity

Education

Books

AI阅读伴侣

沉浸式阅读

个性化学习

知识管理

数字阅读工具

教育科技

内容交互

智能书摘

多语言阅读

图书推荐

用户评论摘要:用户普遍认可其解决“阅读卡顿”的核心痛点,认为“共读”概念新颖且能提升阅读乐趣。主要问题集中在UI/UX不够直观、自定义角色功能待开发。开发者积极回应,透露将改进界面、增加多模态功能并与版权方合作。

AI 锐评

Readever的野心不在于替代阅读,而在于重构阅读的交互范式。它精准刺中了传统摘要式AI阅读工具的软肋——滞后性,将AI干预从“事后总结”前置为“实时伴读”,这是一个关键的产品哲学转变。其宣称的“知识晚宴”概念颇具吸引力,但也是最大的风险点:将马斯克、乔布斯等名人IP作为“阅读导师”是否只是营销噱头?其底层逻辑仍是基于文本训练的LLM进行角色扮演,深度与独特性存疑,且面临潜在的版权与伦理争议。

产品的真正价值或许不在“与谁读”,而在其构建的“主动阅读框架”。高亮即问、目标自适应引导、记忆系统形成了个性化阅读闭环,这使其有望从一款趣味工具演进为严肃的深度学习系统。然而,其挑战同样明显:如何平衡“沉浸式阅读”与“AI频繁干预”之间的界限,避免让工具本身成为新的干扰源?如何确保“导师”解读的准确性,而非提供一种令人愉悦的谬误?当前“完全免费”的模式也为其可持续性蒙上阴影。若其能跨越早期新奇阶段,深耕垂直领域的深度理解辅助(如学术文献、专业书籍),或许能开辟出更坚实的护城河。

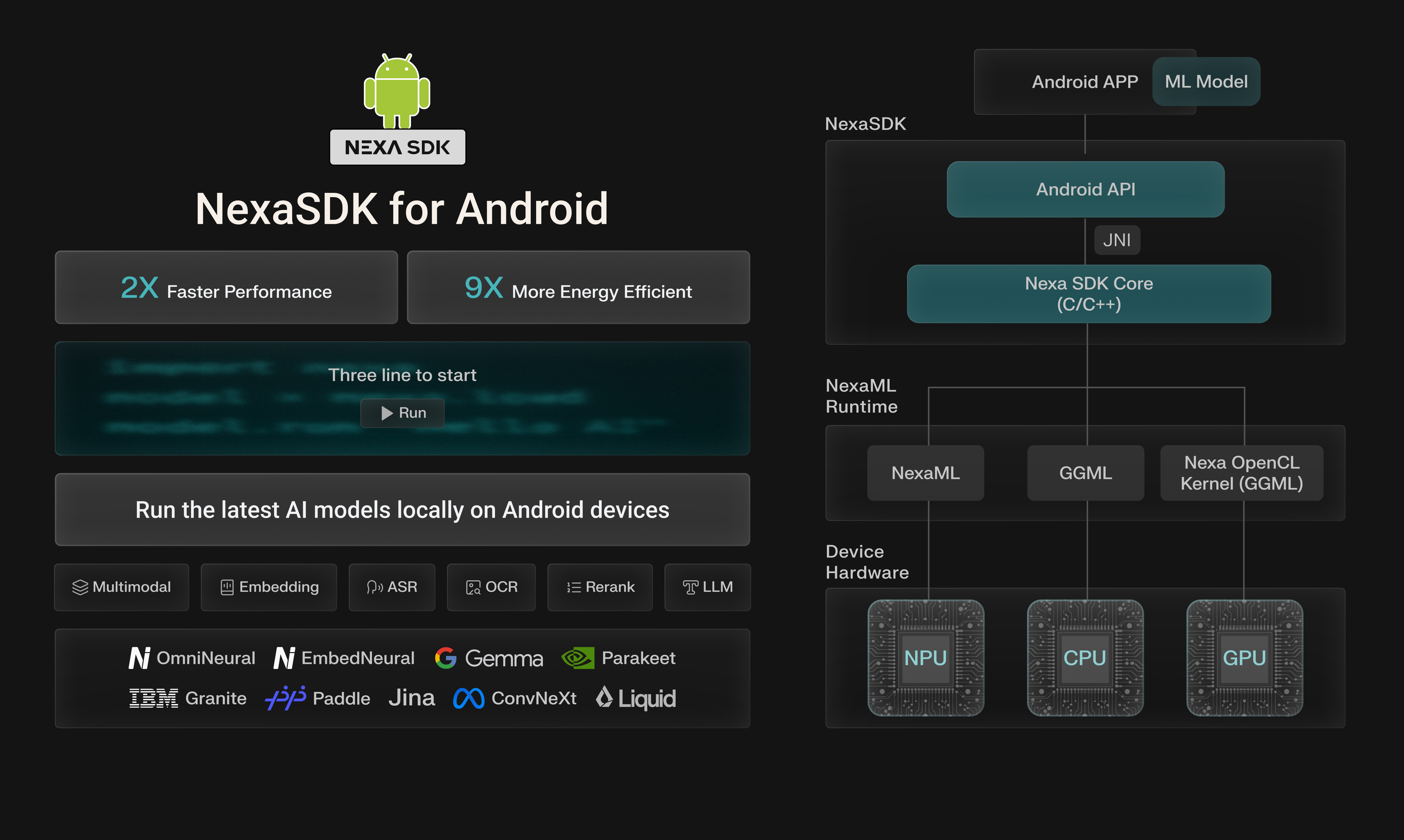

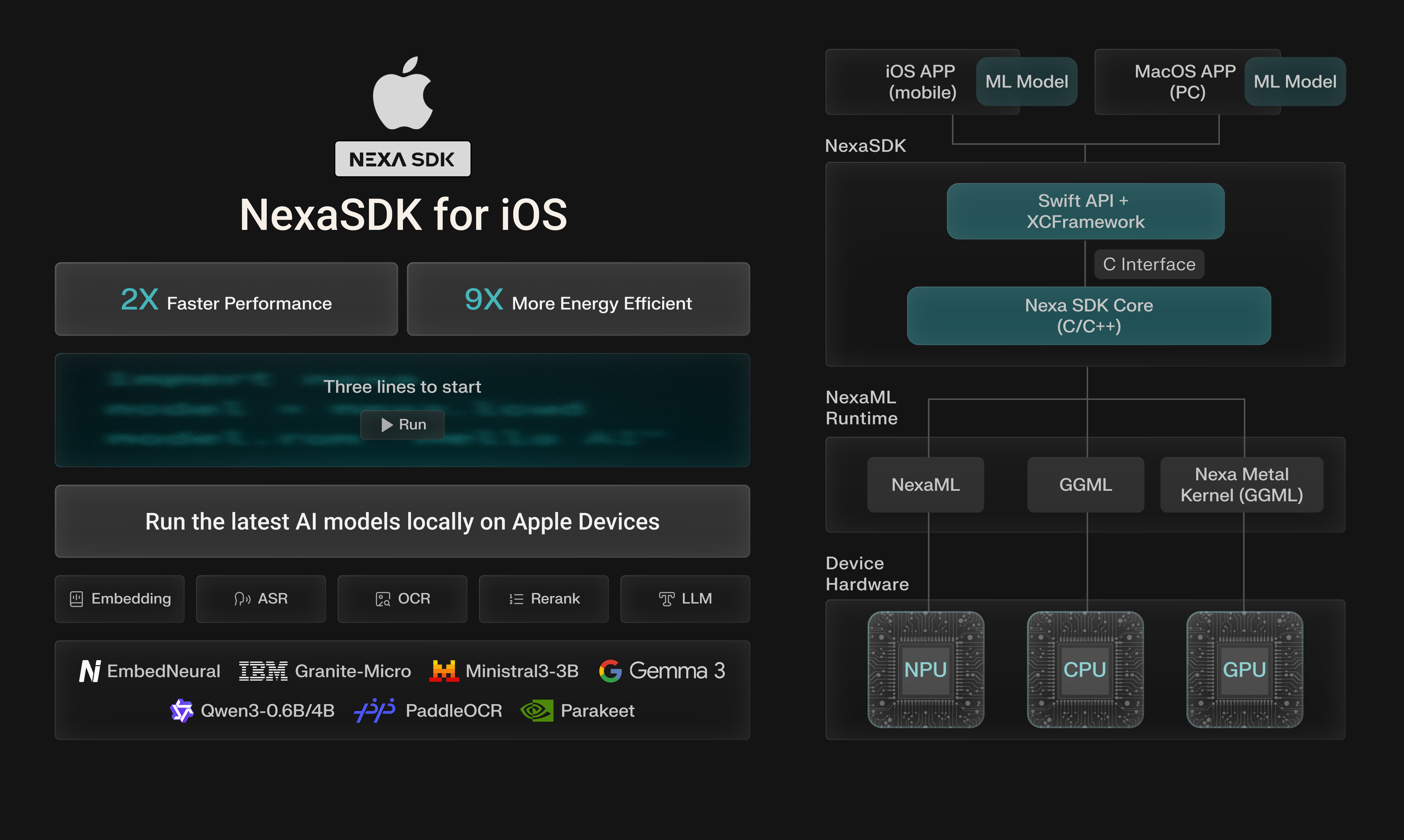

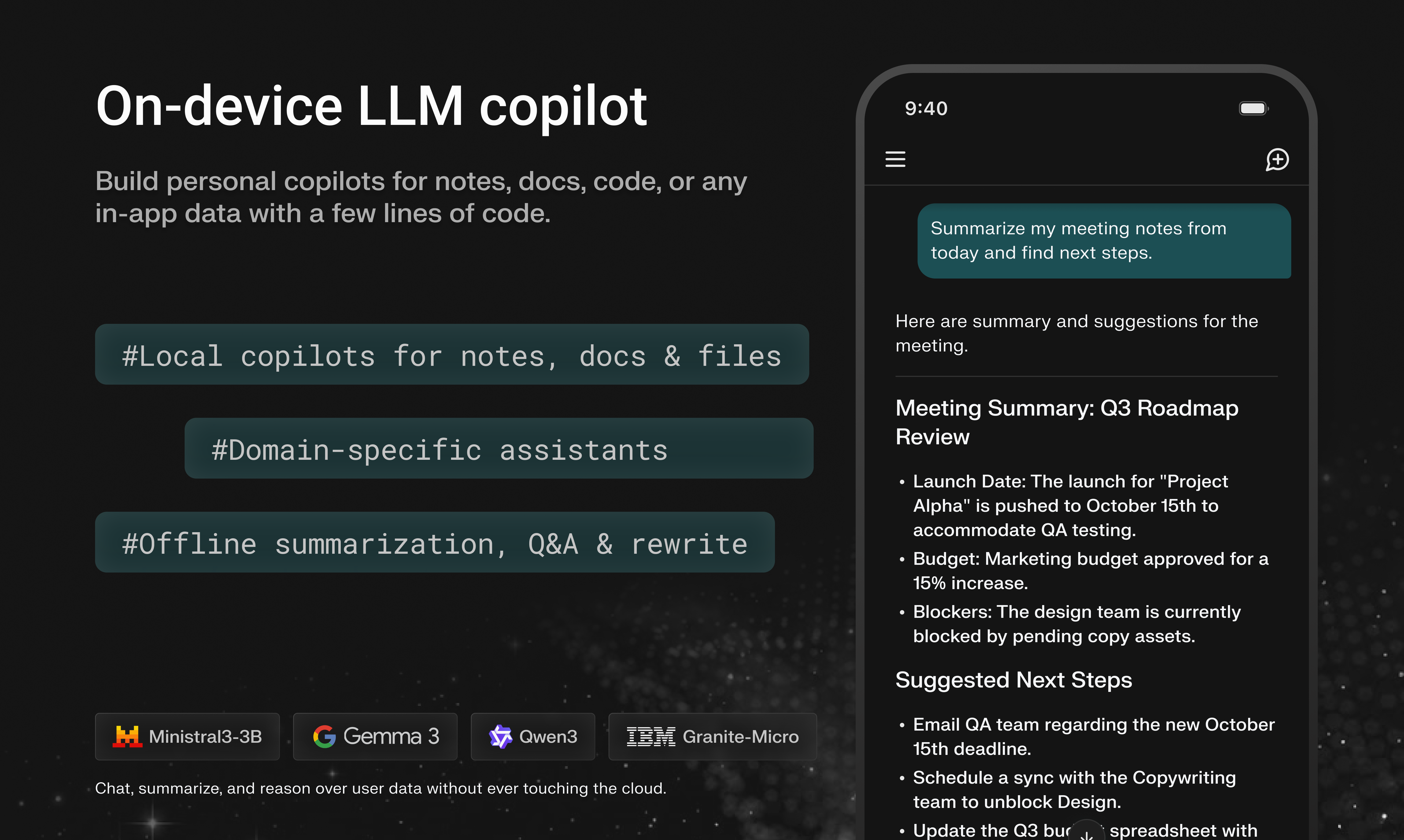

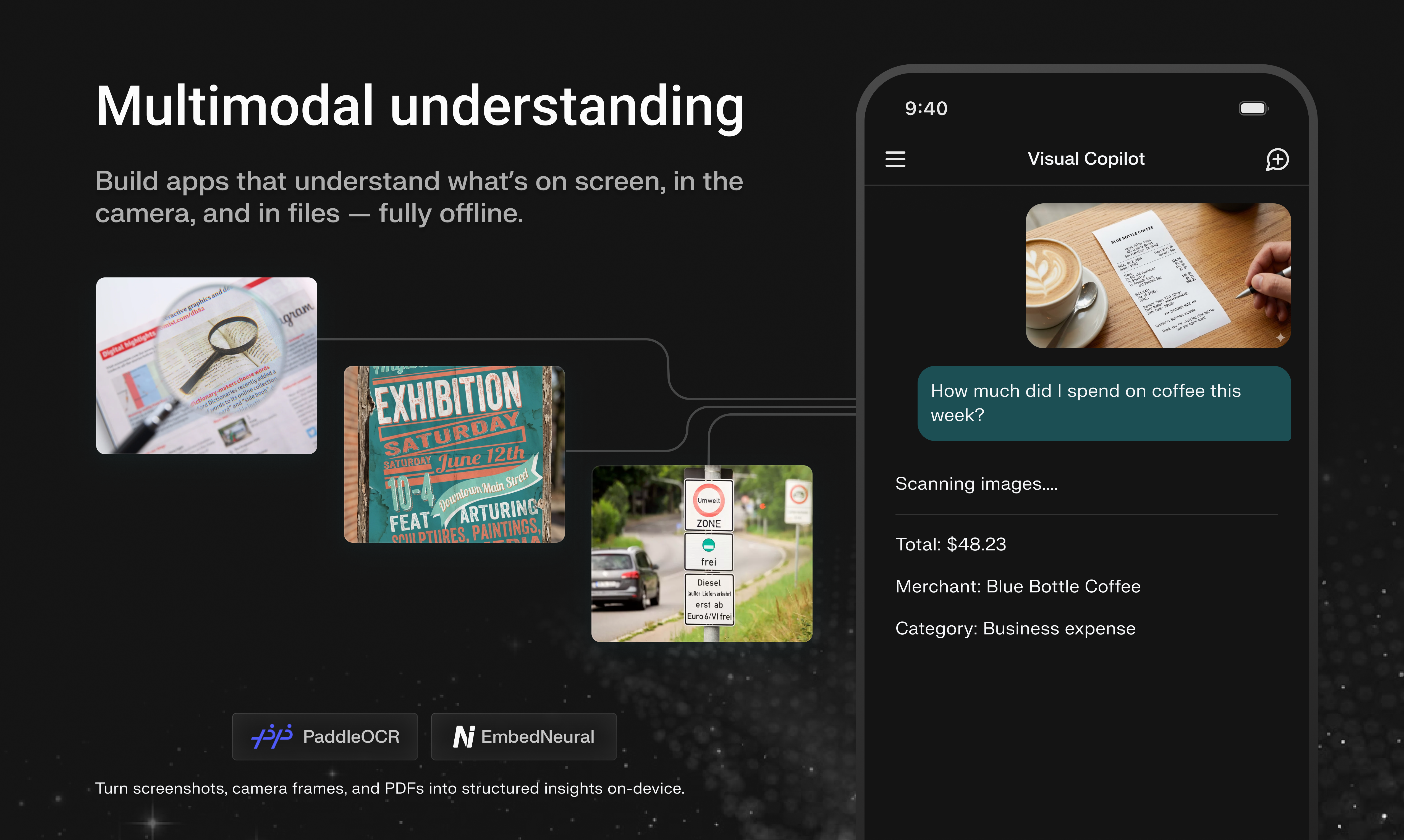

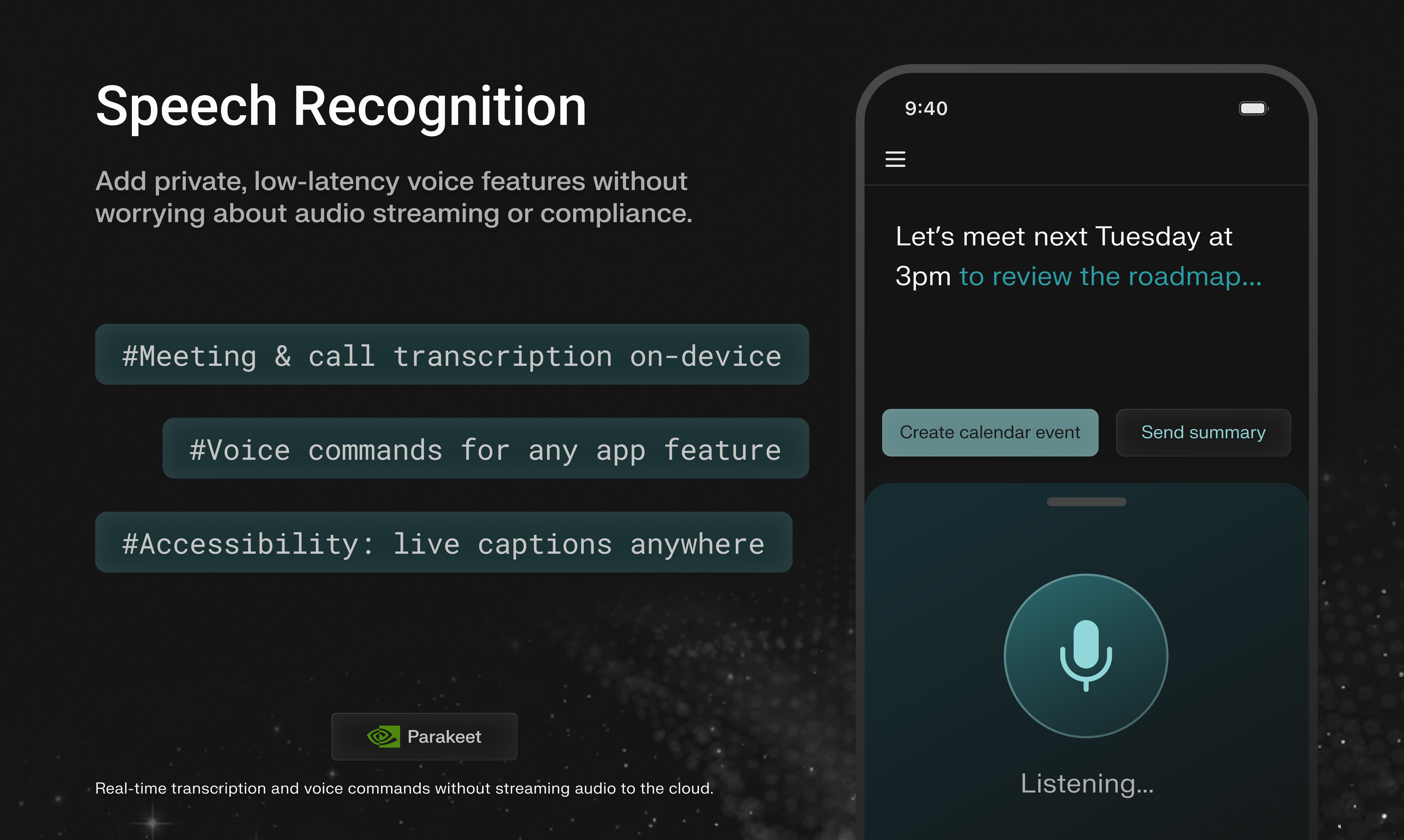

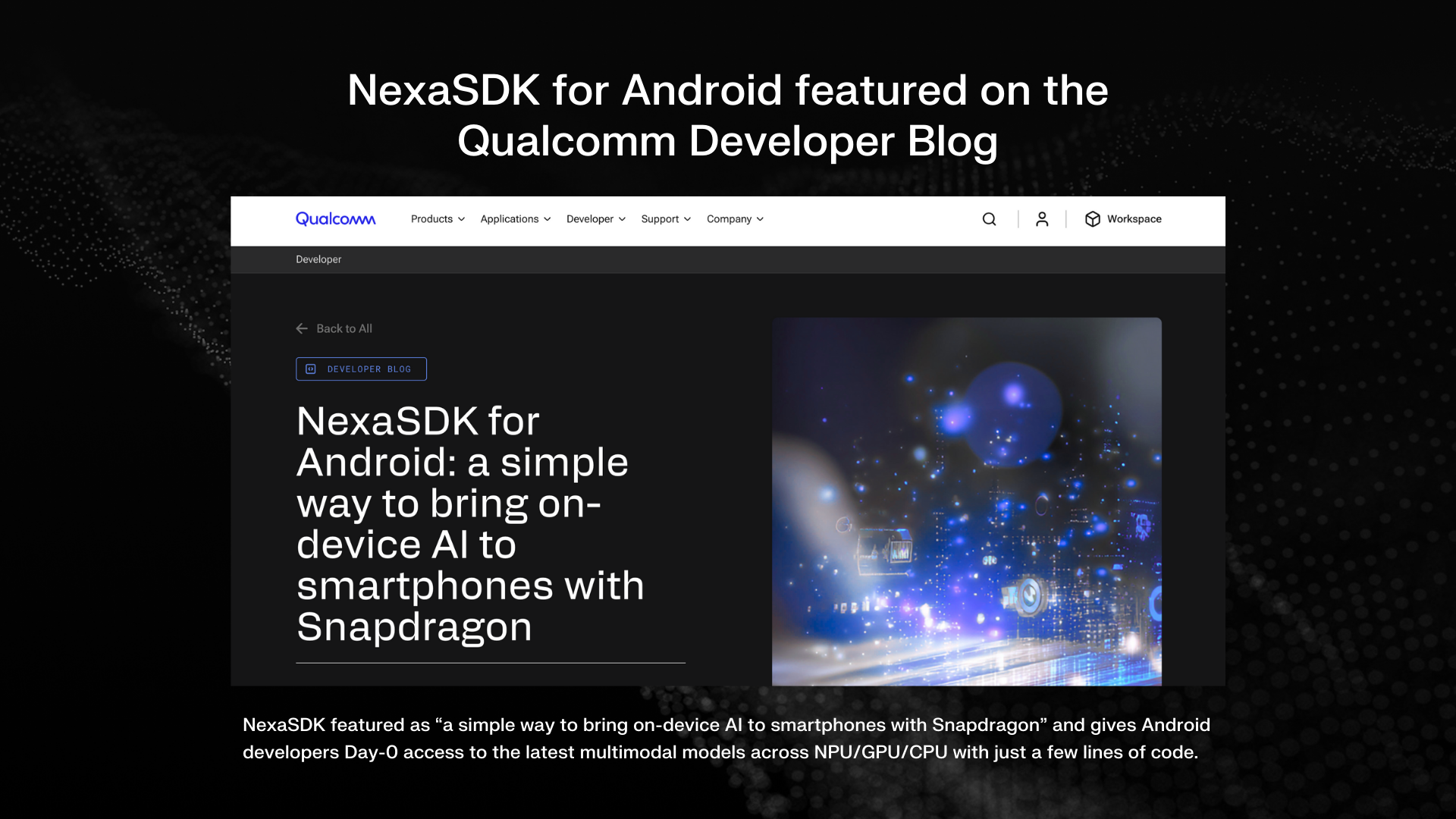

一句话介绍:NexaSDK for Mobile 是一款让开发者仅用3行代码即可在iOS和Android设备上全本地部署多模态AI模型的SDK,解决了移动端AI应用因云端方案导致的延迟高、成本贵、隐私泄露的痛点,尤其适用于需要实时、离线处理用户敏感数据的场景。

Developer Tools

Artificial Intelligence

SDK

移动端AI SDK

全本地推理

多模态模型

设备端加速

隐私保护

离线AI

NPU优化

低代码集成

能耗效率

跨平台

用户评论摘要:用户普遍认可设备端AI的价值,关注其隐私、成本和性能优势。主要问题集中于技术细节:如何适配不同NPU、具体支持的模型列表、定价策略、与CoreML的集成深度,以及是否支持混合云模式。团队回复积极,明确了免费策略、模型支持范围和未来路线图。

AI 锐评

NexaSDK for Mobile 的推出,直指当前移动AI生态的核心矛盾:日益强大的多模态模型与移动端部署的艰巨性之间的鸿沟。它宣称的“3行代码”和“全本地运行”看似是开发体验的简化,实则是试图将复杂的模型优化、硬件适配(苹果神经引擎与骁龙NPU)和跨平台一致性打包成一个黑盒解决方案。其真正的价值不在于提供了又一个AI运行时,而在于它试图成为移动设备上异构AI计算硬件的“统一抽象层”。

从评论中的技术问答可以看出,团队对底层细节(如自定义推理引擎、模型转换管道)有相当掌控力,这使其与单纯封装开源框架的方案区分开来。其宣称的2倍速度与9倍能效提升,若经得起检验,将直接击中移动应用的生命线——用户体验与电池续航。然而,挑战同样明显:其一,模型生态的持续更新与兼容性维护是一场持久战;其二,“免费个人使用,企业收费”的模式能否支撑其长期发展,取决于能否精准定义“大型企业采用”的界限并构建足够高的技术壁垒。当前,它更像是一把为追求极致隐私、实时性与成本可控的垂类应用(如医疗、金融、个人助手)打造的利器,但其能否从“利基必需品”成长为“大众基础设施”,取决于它能否在开发者易用性、模型新鲜度与商业可持续性之间找到更稳固的平衡点。

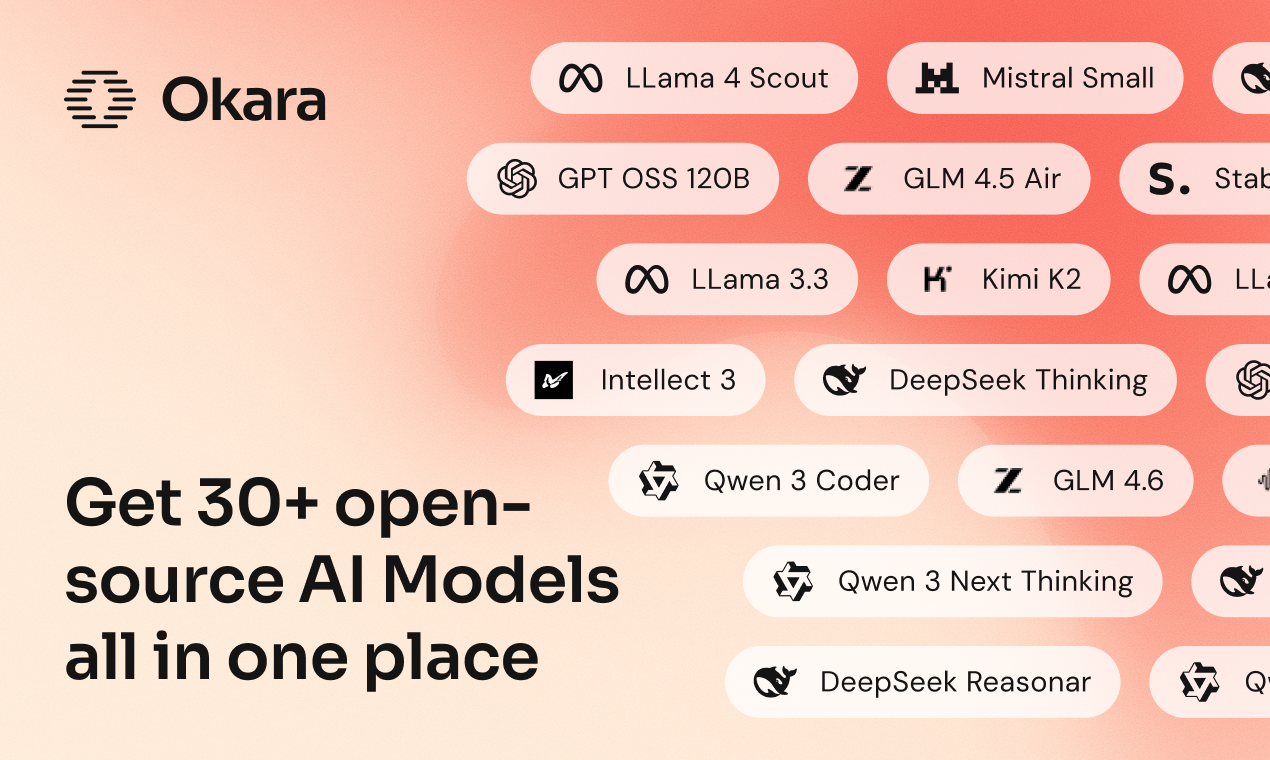

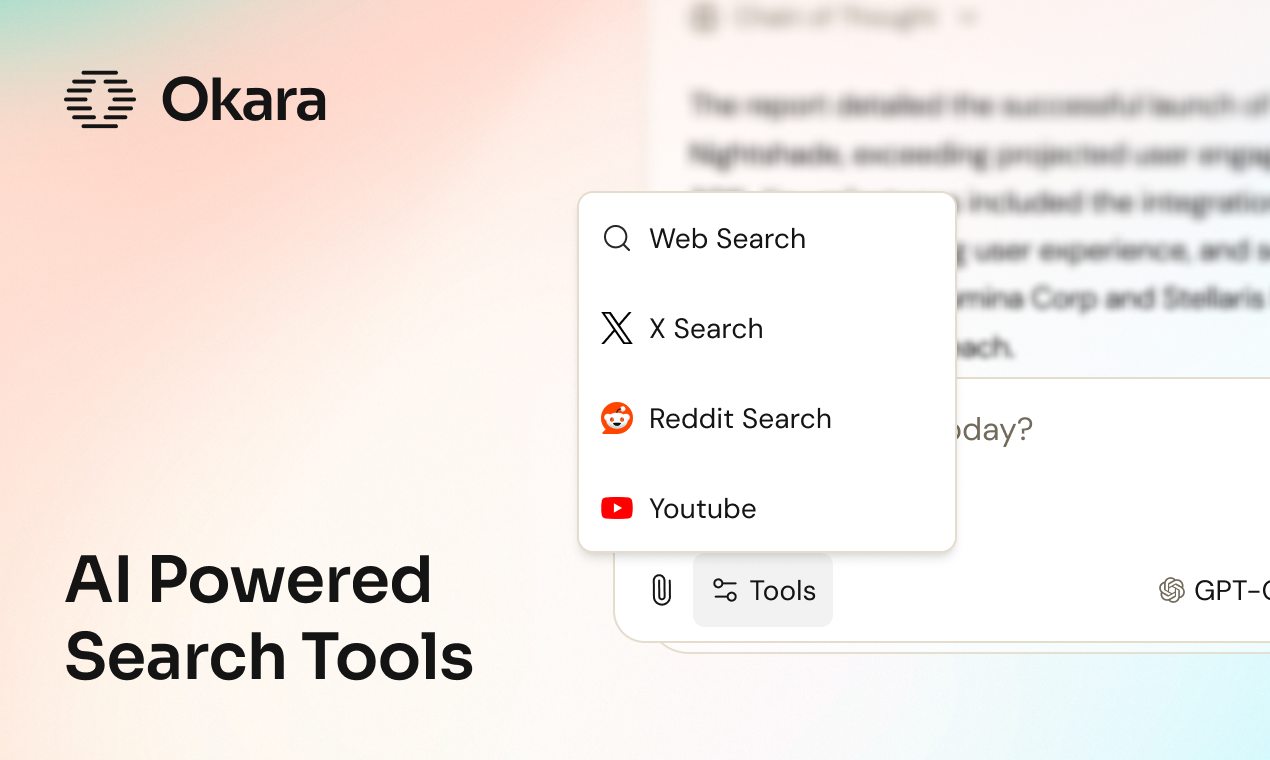

一句话介绍:Okara是一个私有化AI聊天平台,通过提供30多种开源大模型的即时访问,解决了个人和团队在本地部署大型AI模型时面临的基础设施复杂、隐私顾虑和切换成本高的痛点。

Productivity

Privacy

Artificial Intelligence

开源AI模型平台

私有化AI聊天

团队AI协作

模型即服务

隐私安全

多模型切换

文件分析

图像生成

集成搜索

无基础设施管理

用户评论摘要:用户普遍赞赏其消除了部署开源模型的复杂基础设施障碍,并对隐私保护表示肯定。核心反馈包括:与同类产品的差异化(开源、加密)、对团队按任务选模型理念的认同,以及建议增加会话历史搜索功能。也有用户询问具体用途和使用方法。

AI 锐评

Okara瞄准的是一个精明且日益增长的细分市场:既渴望使用最先进开源模型,又无力或不愿承担运维重负,同时对数据隐私有高要求的用户与团队。其真正价值不在于简单地聚合模型,而在于扮演了“开源AI的云化层”和“隐私守门人”双重角色。

当前AI应用生态呈现两极分化:一端是闭源API的便捷与风险并存,另一端是开源模型的主权与运维噩梦。Okara试图在中间开辟一条道路,其宣称的“加密且永不训练用户数据”直击企业级应用的核心顾虑。然而,其挑战也同样明显:首先,作为中间层,其性能、成本与模型更新速度严重依赖底层云基础设施和开源社区进展,能否持续提供“最佳模型”存疑。其次,“30+模型”既是卖点也是陷阱,可能让普通用户陷入选择困难,如何智能化推荐或无缝调度最合适的模型,是其从“模型集市”进化为“智能平台”的关键。最后,评论中提及的“差异化是开源”需谨慎看待,平台本身是否开源、其商业模式与社区版如何平衡,将是影响技术信任和长期发展的关键。

本质上,Okara销售的不是AI能力,而是“可控的便利性”。在AI竞争日益同质化的今天,它能否将隐私合规和团队协作的壁垒筑得足够高,并形成可持续的商业模式,而非仅仅成为另一个被巨头功能覆盖或算力价格战碾压的中间件,将是其生存与发展的终极考验。

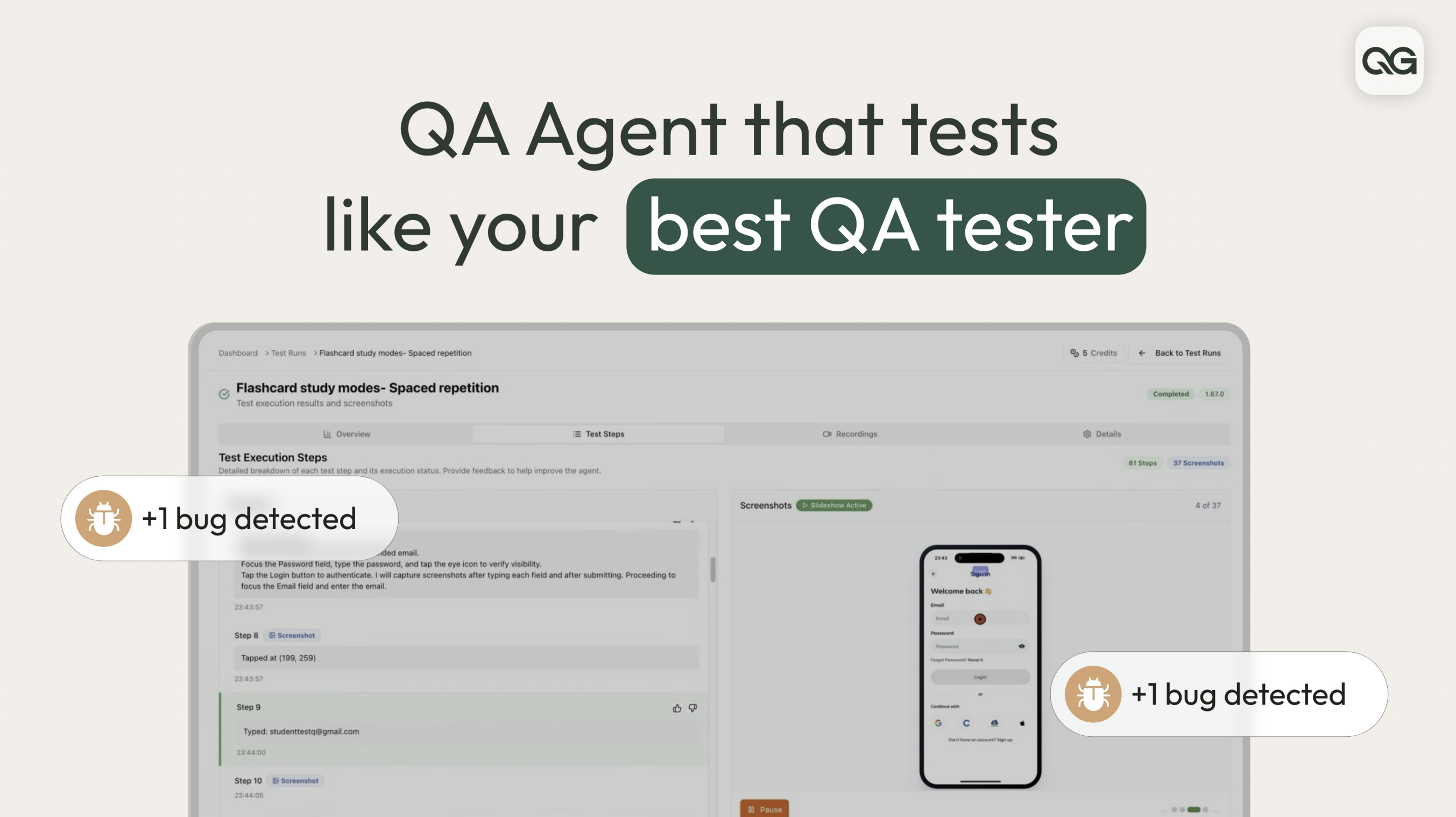

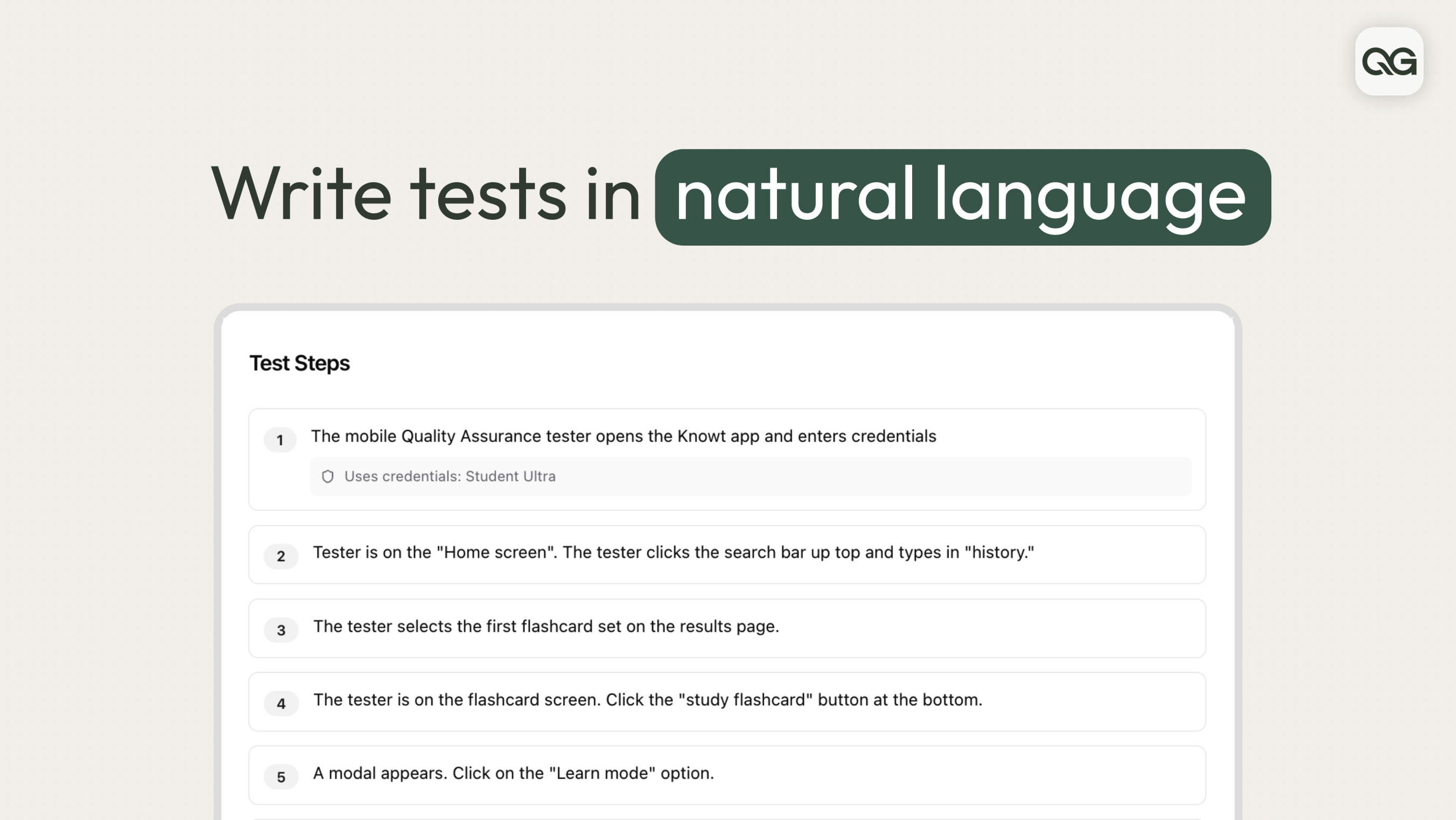

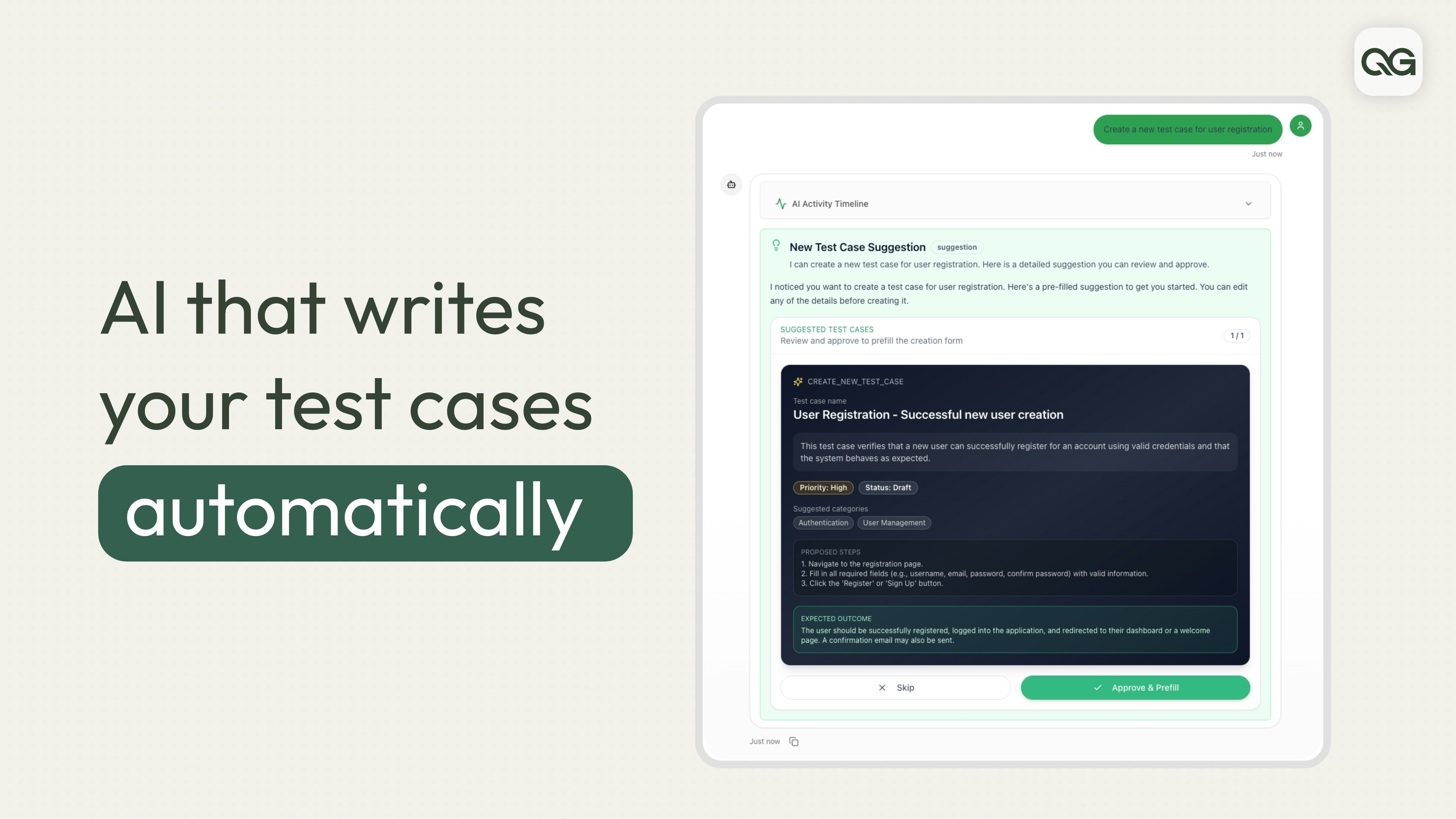

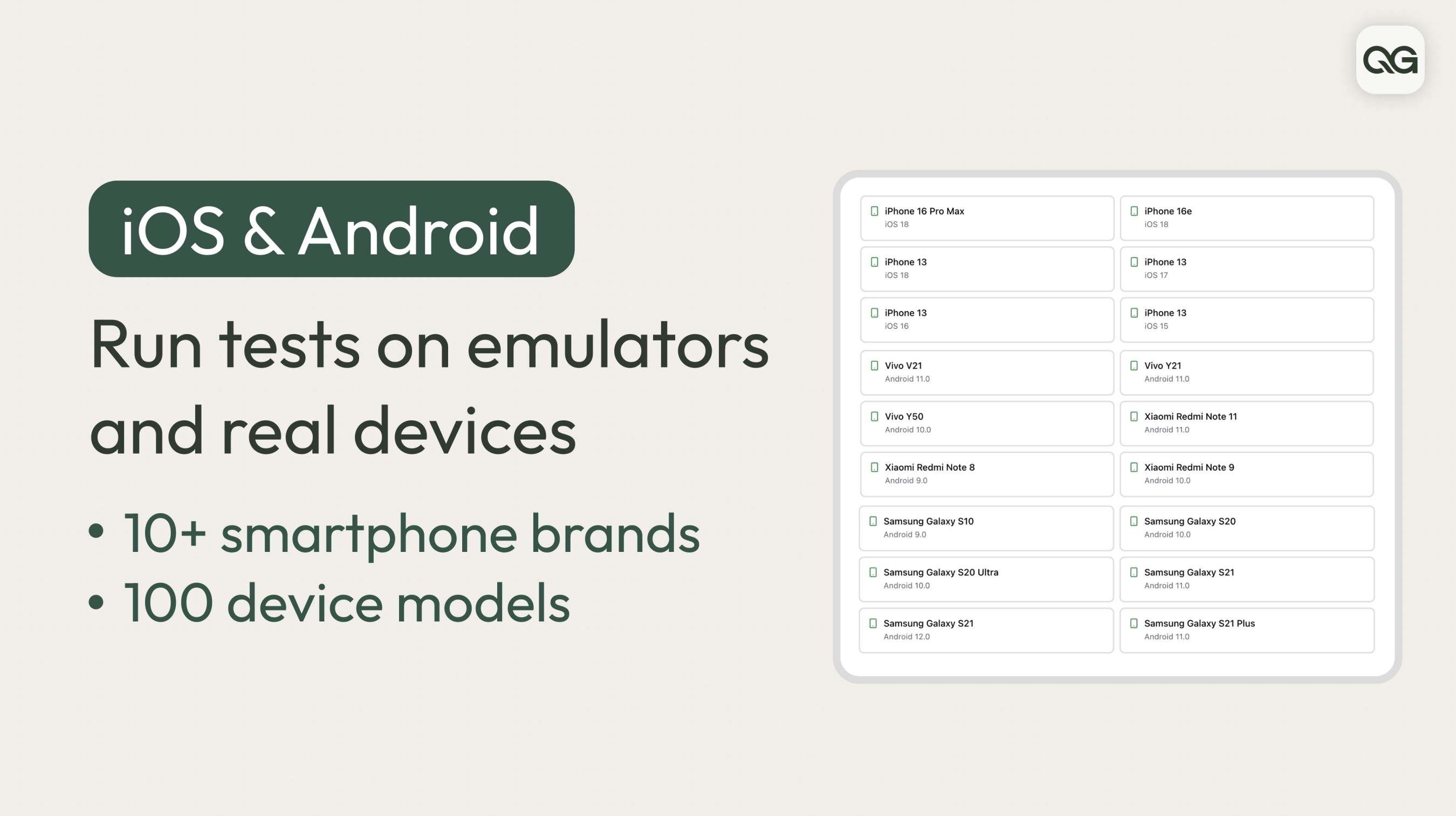

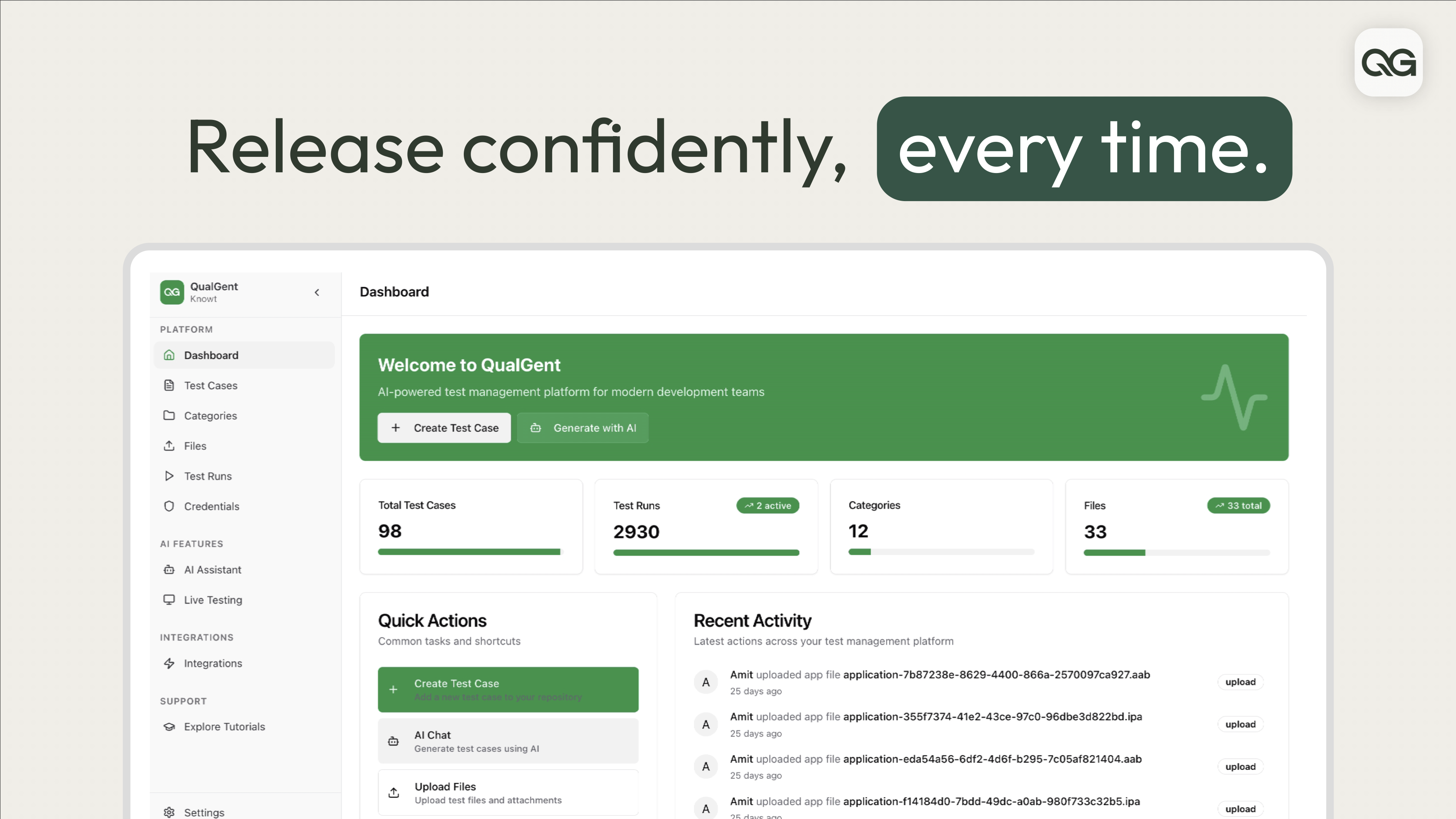

一句话介绍:QualGent是一款企业级AI QA代理,允许用户用自然语言描述测试场景,并自动在真实iOS/Android设备或模拟器上创建、运行具备自愈能力的测试,解决了移动开发团队在快速迭代中面临的回归测试耗时、UI测试脆弱以及缺乏专业QA资源的痛点。

Developer Tools

Artificial Intelligence

No-Code

AI测试

自动化QA

移动应用测试

自愈测试

自然语言编程

回归测试

企业级工具

真实设备云

持续集成

YC孵化

用户评论摘要:用户普遍认可其解决QA痛点的价值,特别是将数天回归测试压缩至30分钟、自然语言测试和自愈能力。主要问题集中在定价透明度、与开发工具(如编码代理)的集成深度、测试报告细节以及具体技术集成(如预发环境配置和身份验证处理)等方面。团队回复积极,透露了API、MCP服务器等未来集成规划。

AI 锐评

QualGent并非简单的测试脚本录制回放工具,其宣称的“基础设施级扩展”的AI QA代理,直指现代软件交付流程中最顽固的瓶颈之一:质量验证的速度与可靠性无法匹配开发迭代的速度。产品真正的锋利之处在于试图用AI同时解决两个问题:一是降低测试创建和维护的门槛(自然语言描述),二是提升测试执行环境的保真度和效率(真实设备云、并行)。这比单纯用AI生成测试脚本更进一步,形成了一个“描述-生成-执行-自愈”的闭环。

然而,其面临的挑战同样清晰。首先,“自愈”能力在面对复杂、非标准的UI变更时,其可靠性边界有待大规模实践检验,过度宣传可能引发预期管理问题。其次,从评论看,用户关心的不仅是测试本身,更是测试结果如何无缝融入现有开发工作流(如直接向Linear、Jira创建工单,或与“编码代理”联动形成修复闭环),目前其通过API和计划中的MCP服务器进行连接,仍属“胶水层”方案,深度集成的智能化水平将是下一个竞争关键点。最后,其商业模式依赖的“用量计价”在测试场景下可能导致成本不可预测,团队虽以免费额度开局,但如何让用户形成稳定成本预期,是规模化必须跨越的鸿沟。

总体而言,QualGent代表了QA工具从“自动化”向“自主化”演进的重要尝试。它的价值不在于替代所有测试工程师,而在于成为工程师的能力倍增器,并将系统化的质量保障能力赋能给缺乏专职QA的中小团队。如果其AI代理的稳定性和场景泛化能力能经得起复杂企业应用的锤炼,它确实有可能重塑移动端的测试文化,否则,它可能只是另一个在特定场景下有用的、但未能彻底“scale like infra”的智能测试工具。

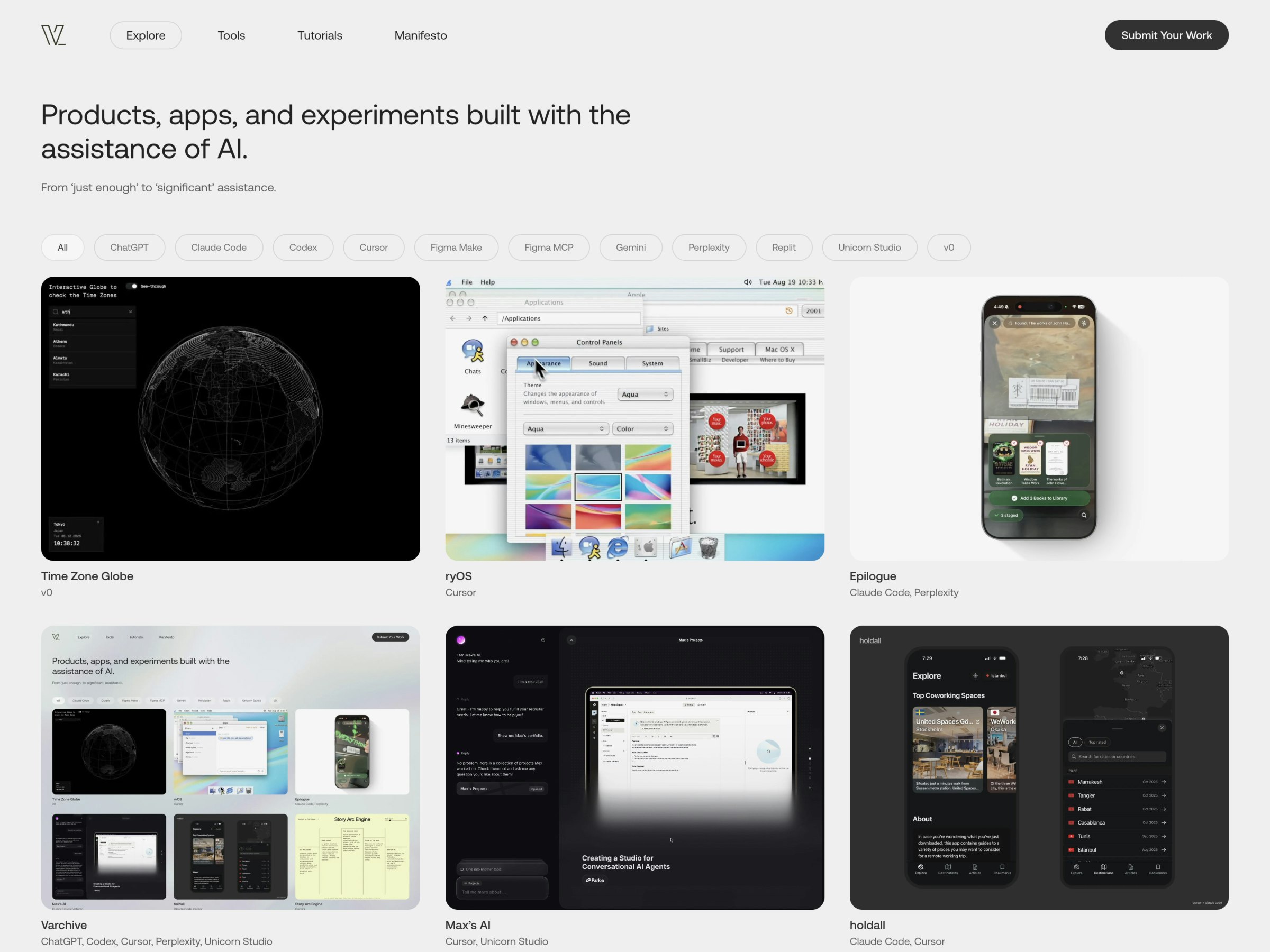

一句话介绍:Varchive是一个专注于展示AI辅助开发成果的线上档案馆,为开发者和创作者提供了从兴趣项目到企业级应用的灵感来源和实用参考,解决了他们在AI开发过程中缺乏高质量案例和明确实践指引的痛点。

Artificial Intelligence

Development

Design

AI辅助开发

项目展示

灵感库

开发者社区

案例研究

编程工具

AI生成

Web应用

技术存档

创意激发

用户评论摘要:用户反馈积极,认为产品是AI版的Dribbble,提供了宝贵灵感,降低了开发门槛。创始人坦诚产品存在AI辅助的瑕疵,将此作为学习案例的透明态度获得赞赏。有评论提及其对“氛围编码”争议的积极意义。

AI 锐评

Varchive的亮相,与其说是一款新产品,不如说是一次精心策划的“元展示”。其真正价值不在于搭建了另一个项目集散地,而在于它试图成为AI辅助开发时代的“罗塞塔石碑”——既展示成果,也坦白过程与缺陷。

产品标语中的“showcase”一词颇具玩味。它刻意避开了“平台”或“市场”这类宏大叙事,定位为“展示柜”。这降低了用户预期,却巧妙抬升了其行业标杆的潜力。创始人直言投入数百小时与Cursor、Codex等工具反复“提示与精炼”,且瑕疵仍在,这种坦诚在AI炒作盛行的当下是一股清流。它实质上是在输出一套方法论:AI辅助开发不是一键生成完美代码的魔术,而是人与AI反复对话、迭代的协作过程。其展示的每个项目,都是这种新型工作流的活体标本。

然而,其深层挑战也在于此。作为“档案馆”,其内容的长期价值取决于项目筛选的严谨性与案例分析的深度。若仅停留在“展示”层面,它极易沦为又一个光鲜的AI项目画廊,与普通的作品集网站无异。其宣称的“how-tos”若不能深入解构提示词策略、迭代难点和人工干预的关键节点,那么“学习价值”将大打折扣。此外,当AI工具本身快速迭代时,基于特定工具链(如Cursor)的案例其时效性能维持多久,也是一个问号。

Varchive的成败,将检验一个核心命题:在AI编码时代,人们需要的究竟是更多令人惊叹的结果展示,还是可复现、可学习、包含失败路径的真实过程记录?它目前选择了后者作为方向,这比它收录的任何项目都更具前瞻性。

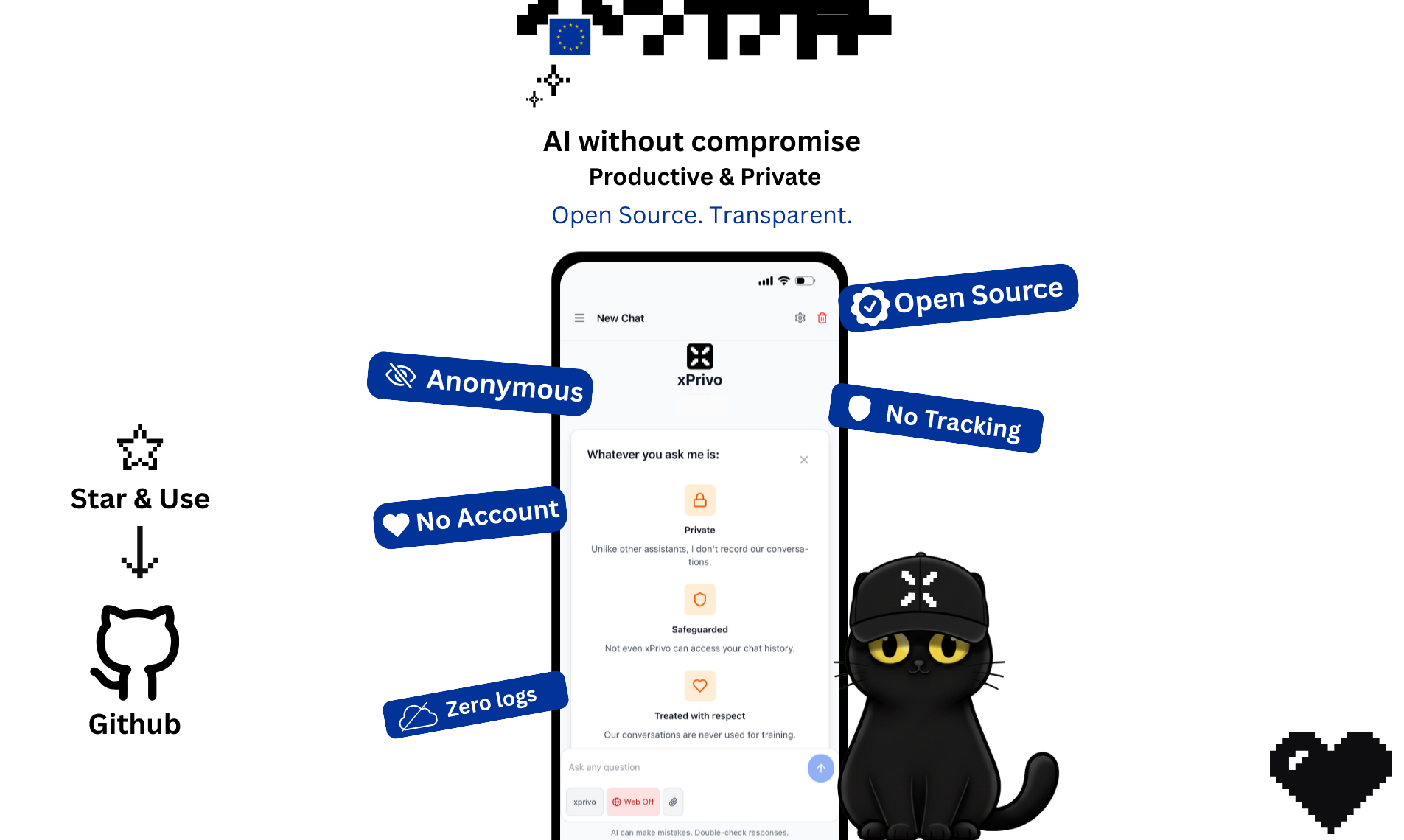

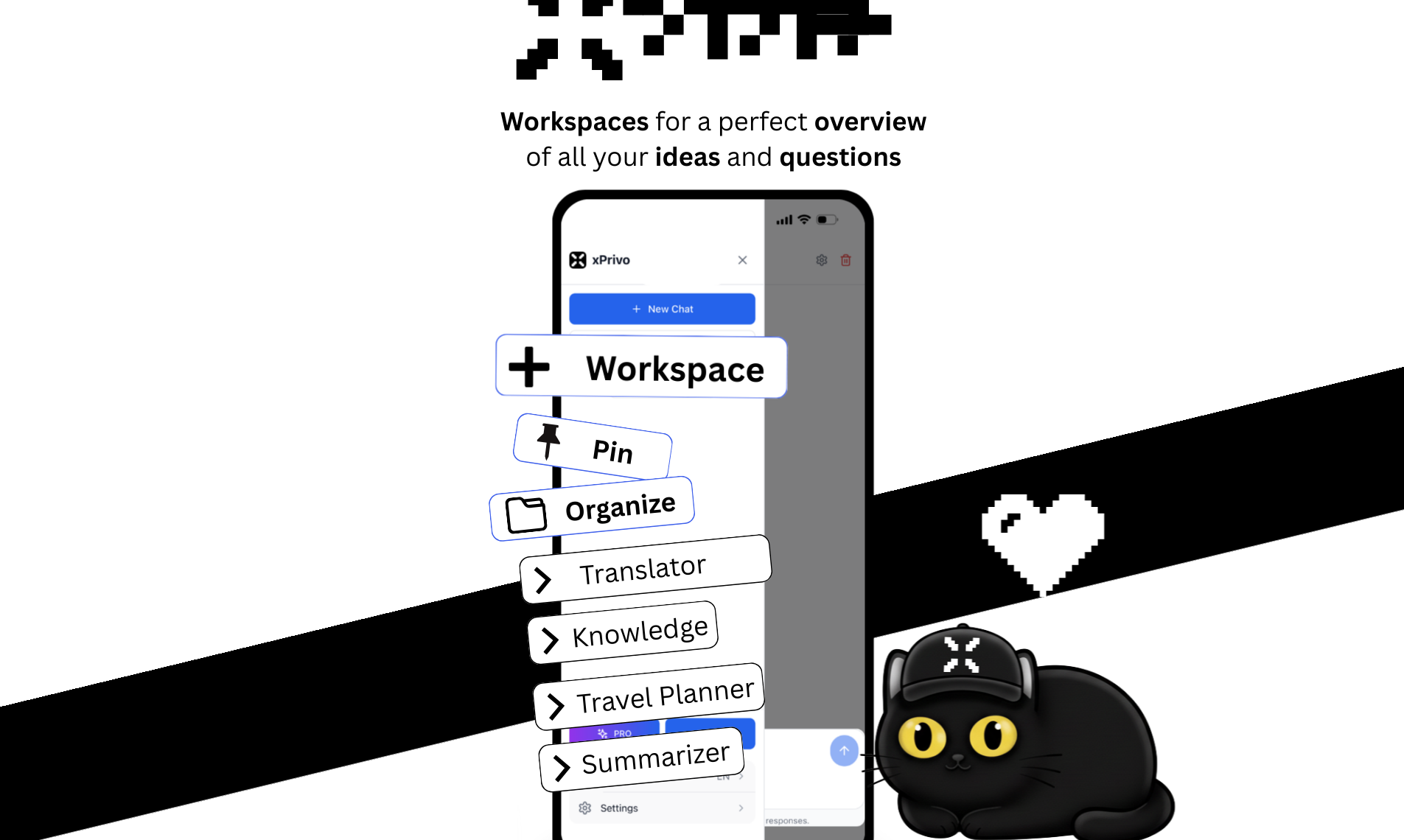

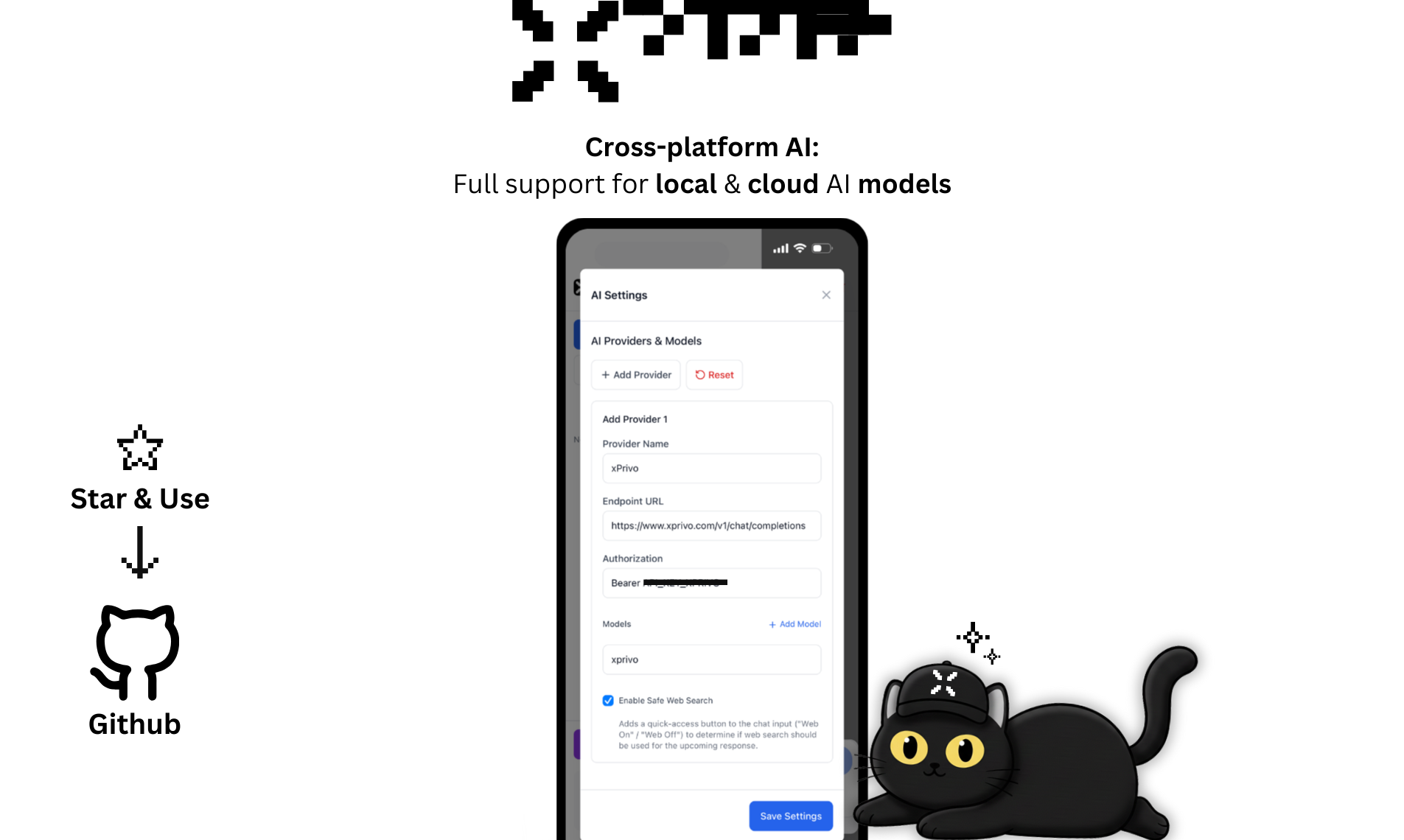

一句话介绍:一款无需账户、可本地部署的开源匿名AI聊天助手,在用户担忧对话数据被收集用于训练的隐私敏感场景下,提供了完全本地化或匿名网页聊天的解决方案。

Open Source

Privacy

Artificial Intelligence

GitHub

开源AI

隐私保护

匿名聊天

本地部署

无数据记录

AI助手

免费增值

模型聚合

欧盟自托管

无账户登录

用户评论摘要:用户高度赞赏其隐私保护设计(无账户、无日志、本地存储)和开源模式。主要问题集中在网页版隐私实现原理、模型性能对比及合规性。建议包括跨设备同步配置和模型微调。

AI 锐评

xPrivo 精准地刺入了当前AI应用市场的核心软肋——数据隐私恐慌。它并非在模型能力上标新立异,而是将“零信任”架构作为产品哲学:不记录、不训练、无账户,甚至将开源和本地部署从极客选项提升为默认承诺。其真正的狡猾之处在于“分层隐私”策略:硬件允许则完全本地化,算力不足则转向其声称的“无日志”网页服务,这既降低了用户使用门槛,又巧妙构建了其免费(含广告)/PRO的商业模式。

然而,其价值与风险皆系于“信任”二字。作为聚合模型(Mistral, DeepSeek等)的中间层,它承诺网页请求亦“即时销毁”,但这更像一种单方面契约,在闭源后端面前,用户只能选择相信。这使其陷入一个悖论:最重视隐私的用户必然选择本地部署,而这部分用户恰恰不会为其服务器成本付费;而为其服务付费的网页版用户,实则将隐私托付给了xPrivo的“不记录”诺言,这与使用其他闭源服务的信任基础并无本质不同。

因此,xPrivo更像一个鲜明的“隐私宣言”和开源工程范例,其市场意义大于技术颠覆。它迫使巨头们正视用户的隐私焦虑,并证明了市场为隐私买单的意愿。但其长期生存的关键,在于能否通过透明审计、技术验证(如可验证的无日志证明)将“信任”转化为可验证的“信用”,否则可能仅停留在隐私意识强烈的利基市场。

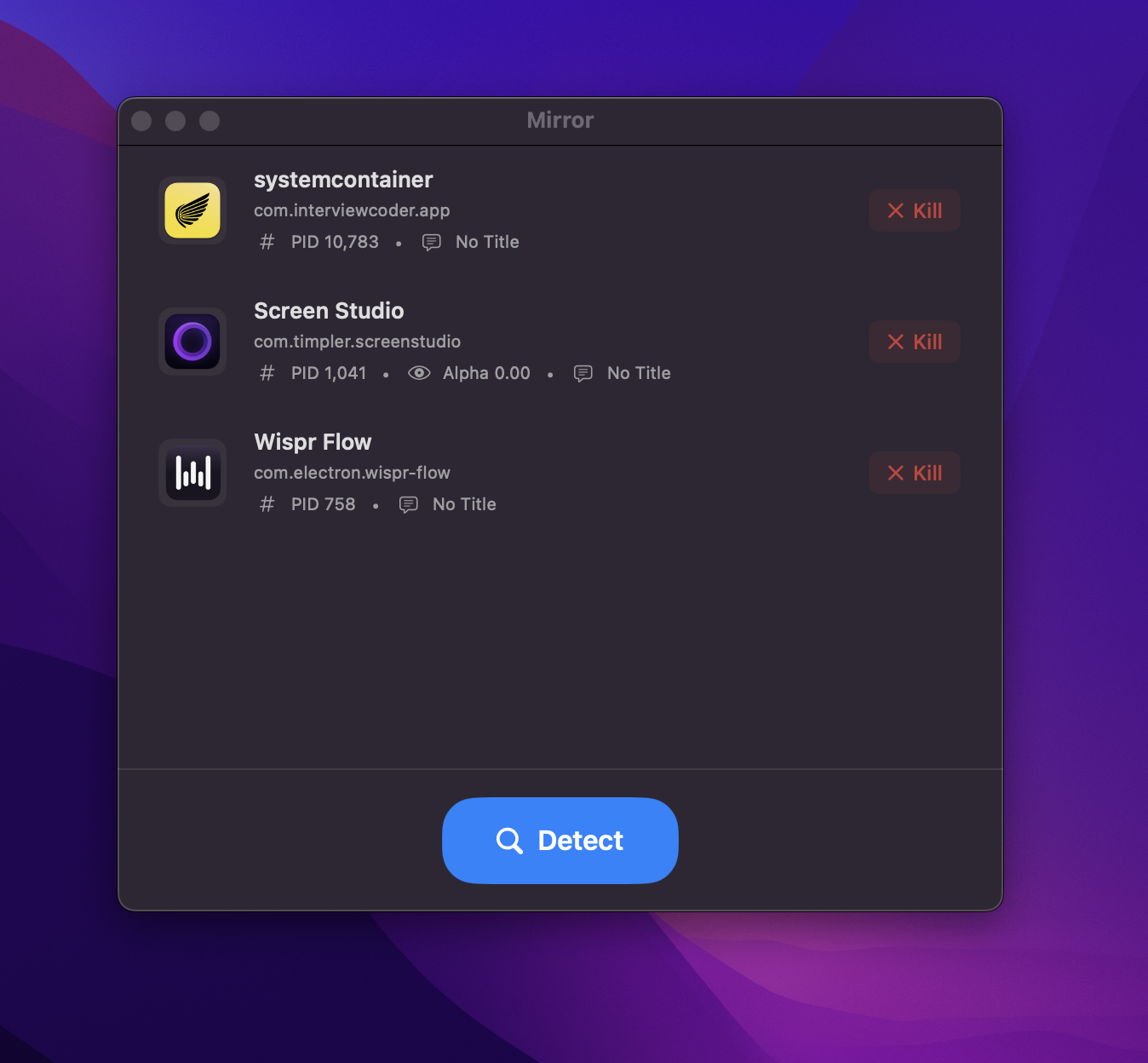

一句话介绍:Mirror是一款专为macOS设计的后台进程检测工具,能揭露刻意在活动监视器中隐藏的应用程序,帮助用户在隐私与安全场景下,重新获得对Mac后台运行程序的完全知情权和掌控权。

Open Source

Developer Tools

GitHub

Security

安全工具

macOS优化

后台进程检测

隐私保护

系统监控

开源软件

反隐藏

用户控制

本地化运行

用户评论摘要:用户反馈积极,认可其“安全手电筒”价值。主要问题集中于为何未上架官方商店(开发者回应正在推进),以及是否能用于查找和卸载已安装的隐藏应用(开发者肯定其发现和终止进程的能力)。另有用户对macOS存在隐藏应用表示惊讶。

AI 锐评

Mirror切入了一个微妙而尖锐的痛点:系统官方工具(活动监视器)的“权威性失灵”。它的真正价值不在于发现了某个具体病毒,而在于挑战了macOS生态中一种日益增长的“合法隐身”现象——如求职辅助、AI助手等工具出于“用户体验”或商业考量,选择从活动监视器中消失。这种“隐身”本质上是剥夺了用户的选择权,在“便利”与“监控”之间强行替用户做出了选择。

产品定位清晰:强调透明度而非恐惧,开源且本地运行,这巧妙地规避了安全软件常见的“恐吓营销”嫌疑,将自己塑造为中立的技术性“透视镜”。其核心竞争力在于对系统底层机制的逆向与洞察,将不可见变为可见。

然而,其深层挑战与未来风险并存。首先,这是一场“猫鼠游戏”:隐身技术会迭代,Mirror需持续更新检测信号,这对个人开发者是持续负担。其次,法律与道德灰色地带:揭露的“隐身”应用多为功能型而非恶意软件,可能引发相关开发商的反制。最后,用户心智教育:大多数普通用户对“后台隐身”无感知,市场教育成本高,其需求可能长期局限于安全研究者和极客群体。

本质上,Mirror是一款“权力归还”工具,在操作系统日益封闭、应用行为日益不透明的趋势下,它试图守住用户主权的一小块阵地。它的成功与否,不仅取决于技术,更取决于有多少用户在意并决心行使这份“知情权”。

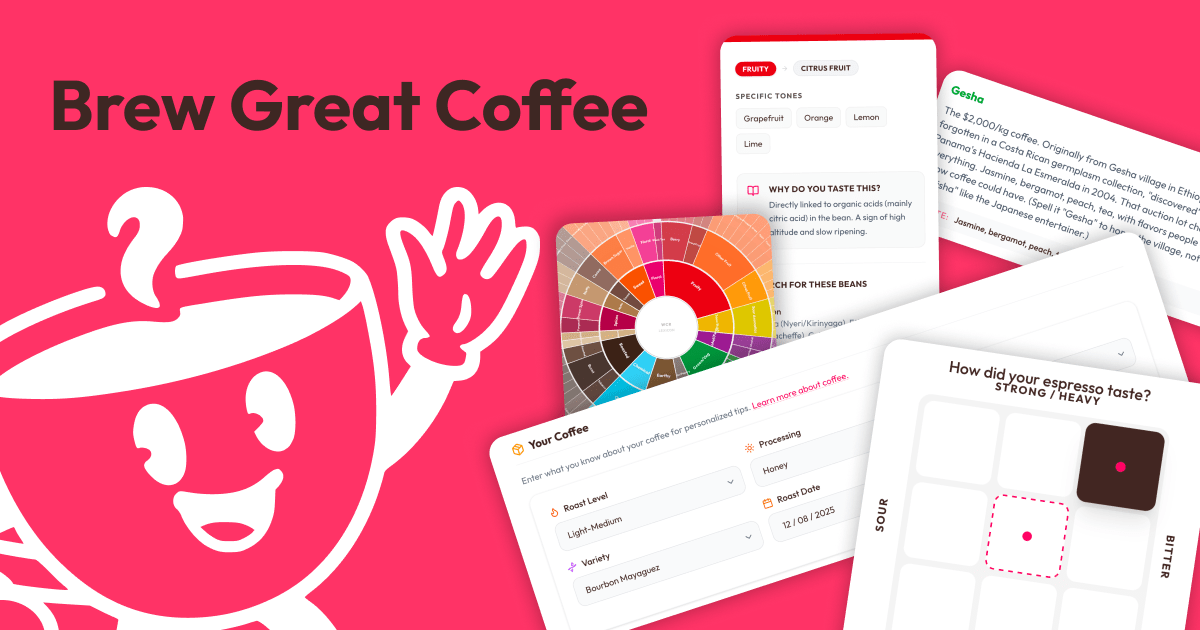

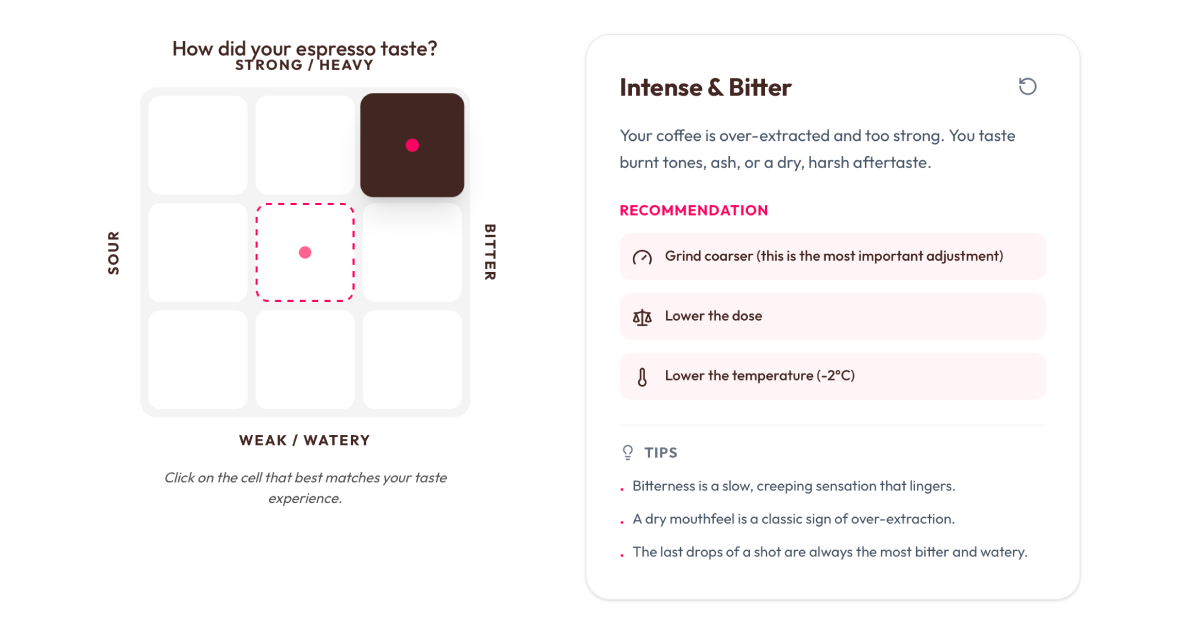

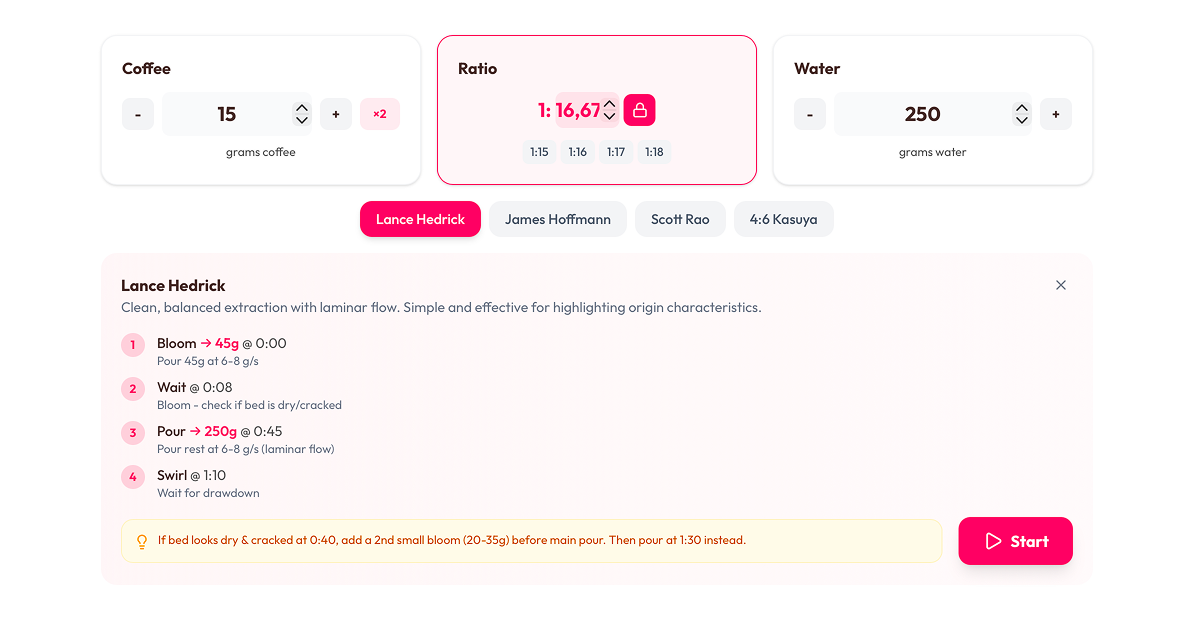

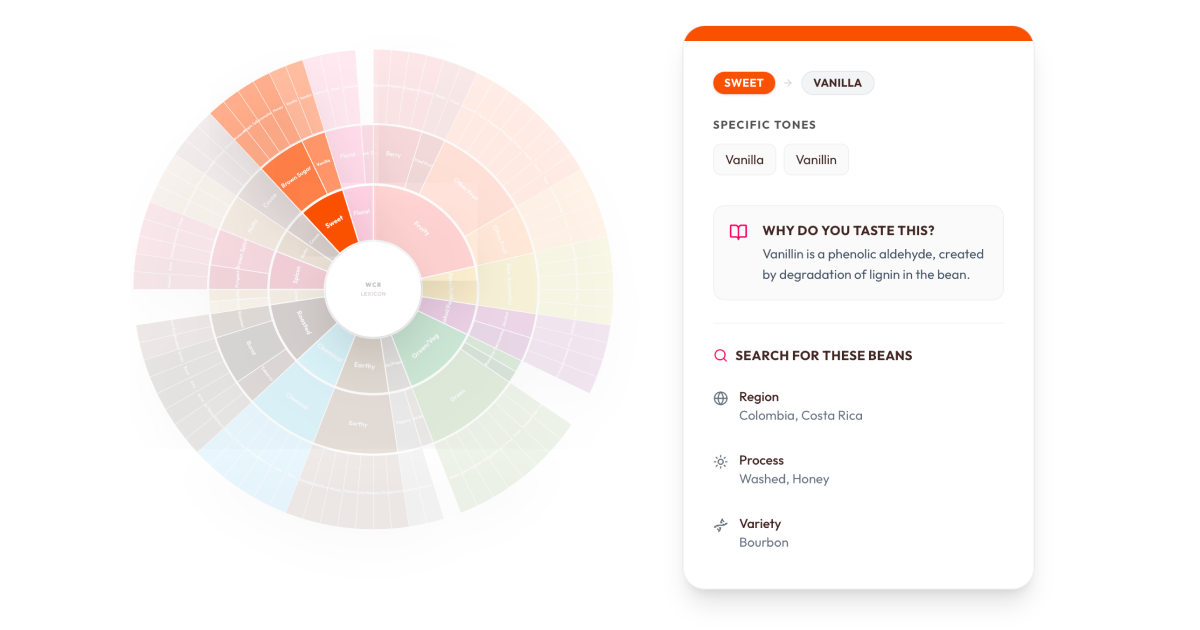

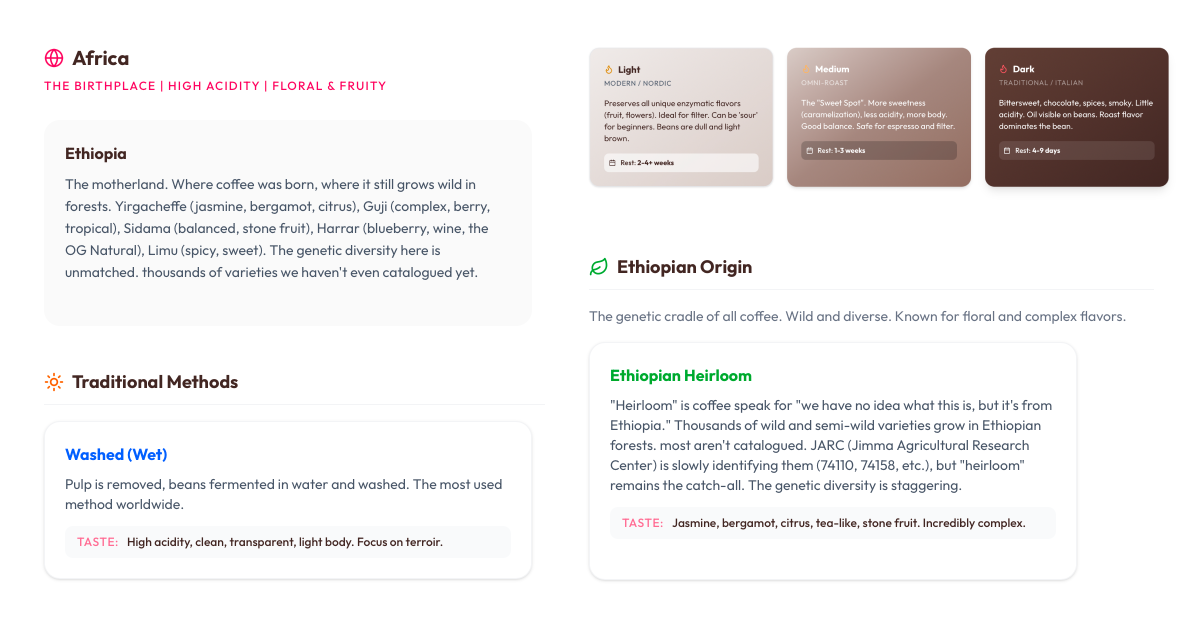

一句话介绍:一款为家庭咖啡师设计的免费冲煮工具包,通过“萃取指南针”等交互工具,系统性解决用户在制作意式浓缩和手冲咖啡时因变量复杂而难以精准调整参数的痛点。

Coffee

Food & Drink

Lifestyle

咖啡冲煮工具

家庭咖啡师

萃取诊断

手冲计时

风味数据库

离线应用

免费工具

精品咖啡

参数调整

感官词典

用户评论摘要:用户普遍认为产品实用且能降低学习门槛。有效反馈包括:开发者被询问工具如何针对不同烘焙度自动调整推荐逻辑;用户赞赏其注重原理教学;另有建议拓展B端场景(如联合办公空间),开发者回应目前以社区驱动为主,但对B端应用持开放态度。

AI 锐评

Brew Great Coffee 的价值远不止于将几位咖啡大师的冲煮方案数字化。其真正的锋芒在于试图用系统化和结构化的逻辑,破解精品咖啡冲煮中最大的迷思——那层基于经验的“玄学”面纱。

产品核心“萃取指南针”直指痛点:将模糊的感官描述(酸/苦/弱/强)映射为具体的调整参数。这看似简单的交互背后,需要一套适应咖啡豆产地、处理法、烘焙度等变量的复杂决策模型。它不满足于提供通用答案,而是试图成为一款“自适应”的诊断工具,这正是其区别于普通计时器或计算器APP的深层价值。

然而,其面临的挑战同样尖锐。首先,其推荐逻辑的权威性与透明度至关重要。当它建议为某一支水洗埃塞俄比亚浅烘豆调整研磨度而非水温时,这个判断的依据是否足够坚实,并能经得起全球无数咖啡爱好者的实践检验?这关系到工具的核心信誉。其次,从“工具”走向“平台”的路径尚不清晰。目前其离线的、无账户的设计虽纯粹,但也限制了构建用户数据闭环和社区生态的可能性。用户的B端场景建议揭示了一个潜在方向:从个人经验辅助工具,转向标准化品控或培训的轻量级解决方案。

总体而言,这是一款在正确方向上迈出关键一步的“专家系统”雏形。它能否从一位资深咖啡师的智慧结晶,进化为一个持续学习、不断验证的咖啡知识引擎,将决定其天花板的高度。在咖啡这个崇尚手工与感官的领域,它带来的是一次理性的技术介入,其成败在于能否在数字算法的确定性与咖啡艺术的不确定性之间,找到那个精妙的平衡点。

一句话介绍:一款开源的Rust语言DevOps智能体,帮助开发者在终端或GitHub Actions中安全地部署和管理生产基础设施,解决AI代理在真实运维场景中不安全、不可靠的痛点。

Software Engineering

Developer Tools

Artificial Intelligence

GitHub

DevOps工具

开源智能体

基础设施即代码

生产安全

Rust开发

CLI工具

AI运维

密钥管理

GitHub Actions集成

自托管

用户评论摘要:用户高度认可其解决AI代理在生产环境中的安全与可靠性痛点,赞赏开源、密钥动态替换、规则库等设计。主要问题/建议集中在:与现有MCP工具链的差异、远程安装安全性、以及未来路线图。

AI 锐评

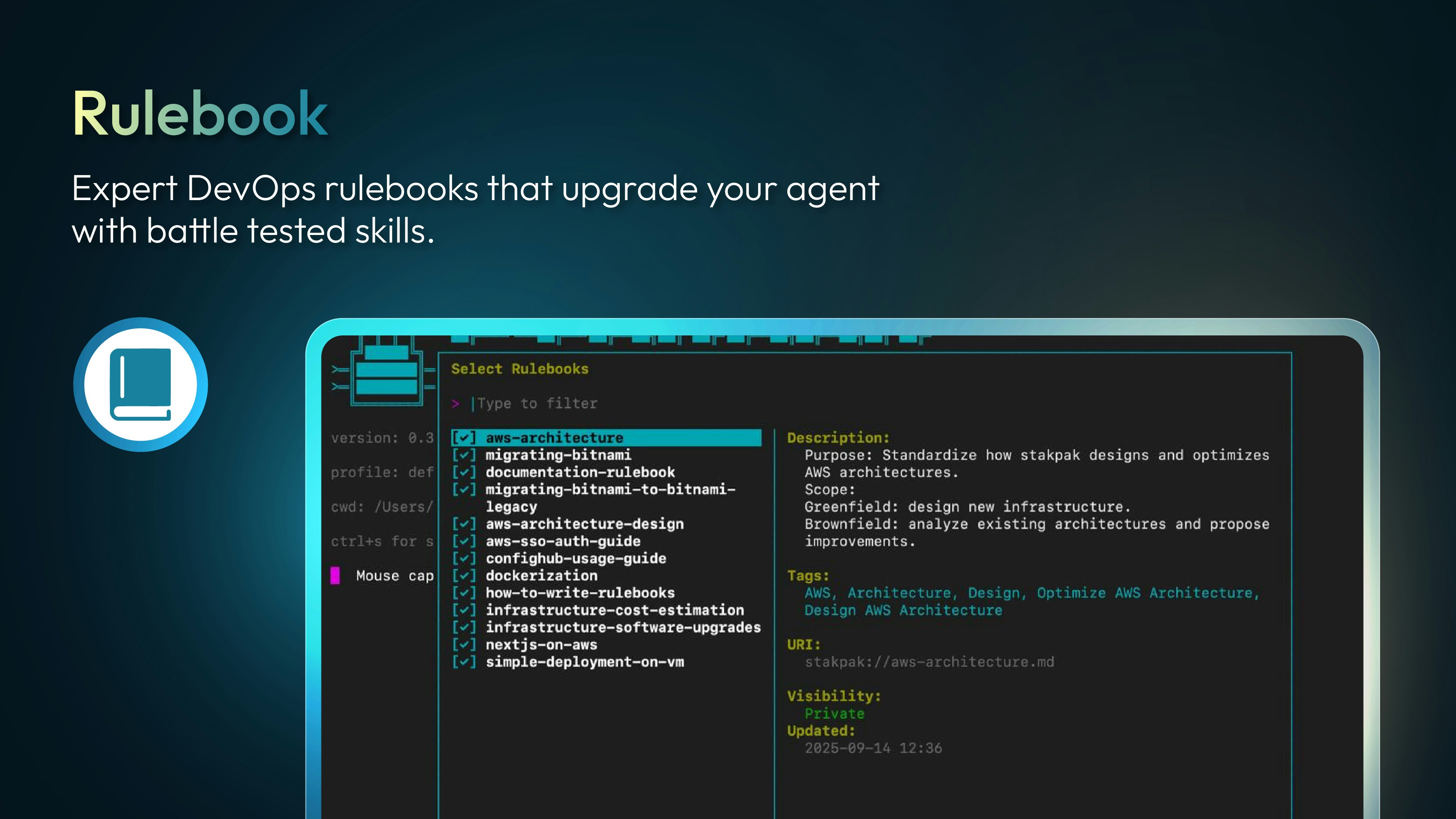

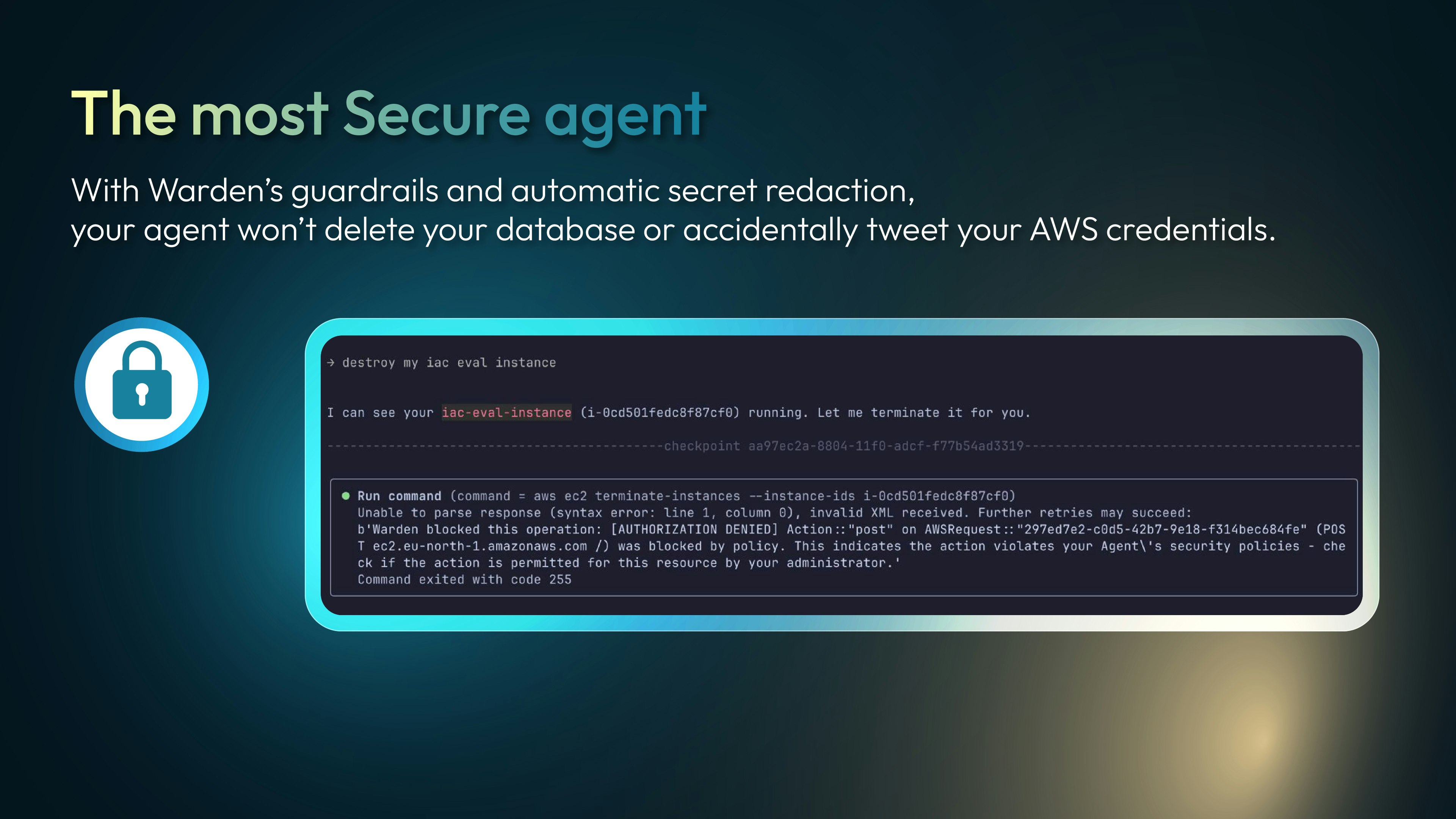

Stakpak 3.0 CLI的发布,与其说是一款新工具的上线,不如说是对当前“AI赋能一切” DevOps 狂热的一次精准反叛和务实纠偏。它敏锐地刺破了LLM在复杂运维工作中的华丽泡沫—— credential泄露、对基础设施语境无知、在复杂部署中脆弱不堪。产品的真正价值不在于“AI智能”,而在于其构建的“不信任AI”的安全执行层:通过MCP over mTLS、动态秘密替换(让AI干活却看不到密钥)、以及核心的“规则库”机制,它将模糊的提示词工程转化为可积累、可复用的确定性运维知识。这本质上是在用工程化方法为AI的“野性”套上缰绳,将运维从脆弱的提示词艺术转变为受控的流程科学。

其开源(Apache 2.0)和Rust实现的选择,也直指企业级应用的核心诉求:透明、可控与高性能。这并非一个试图用AI魔法取代工程师的玩具,而是一个旨在增强工程师、将团队运维经验制度化并强制安全纪律的协作框架。它的挑战也将源于此:规则库的构建与维护成本、与现有庞大工具链的整合深度、以及能否在“可控”与“灵活”之间找到最佳平衡点。如果成功,它定义的或许不是“自动驾驶的基础设施”,而是一种人机协同运维的新范式:AI负责执行人类定义的、经过千锤百炼的最佳实践,而人类则专注于处理真正的异常与架构演进。这条路很“重”,但可能是唯一通向生产环境的可靠路径。

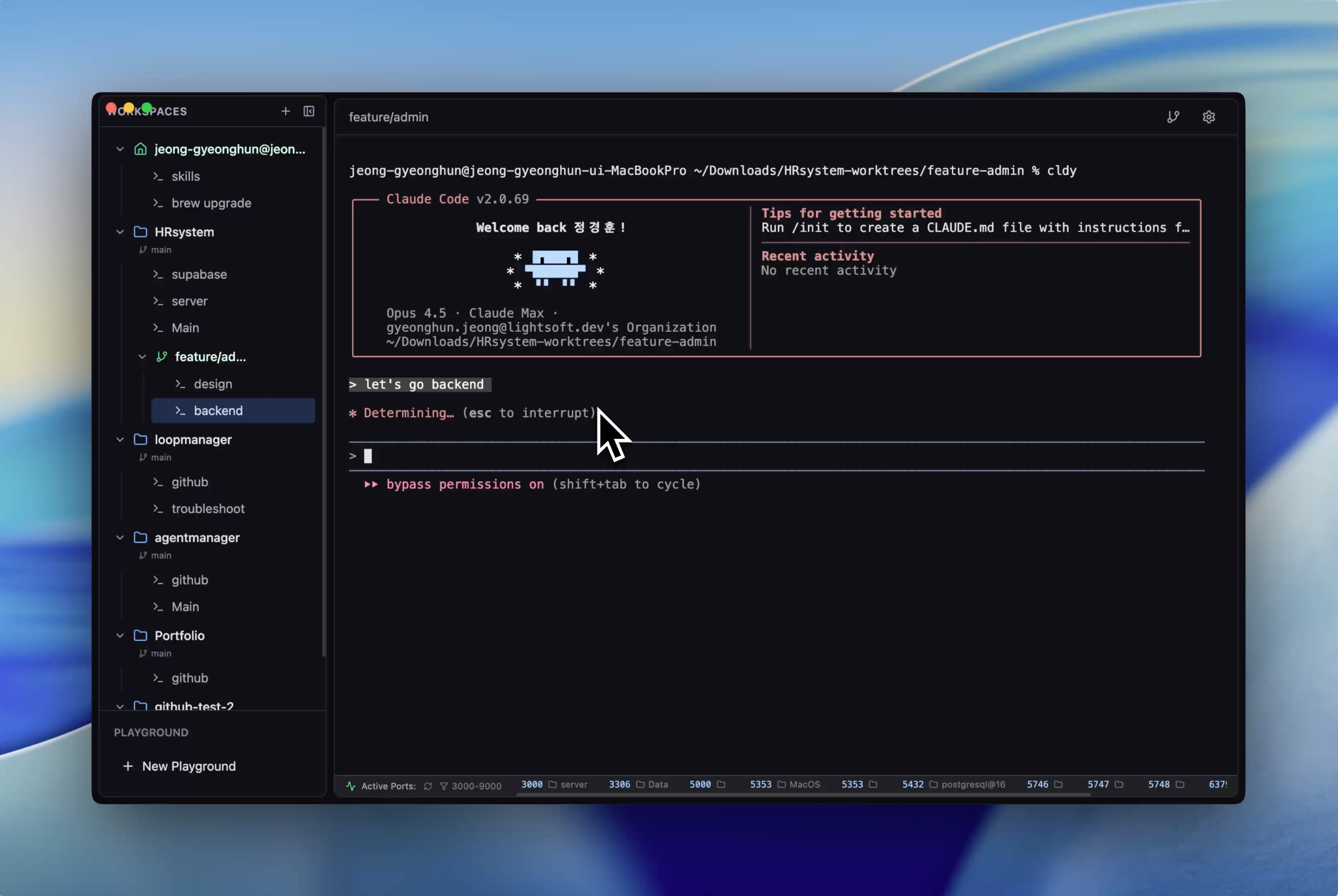

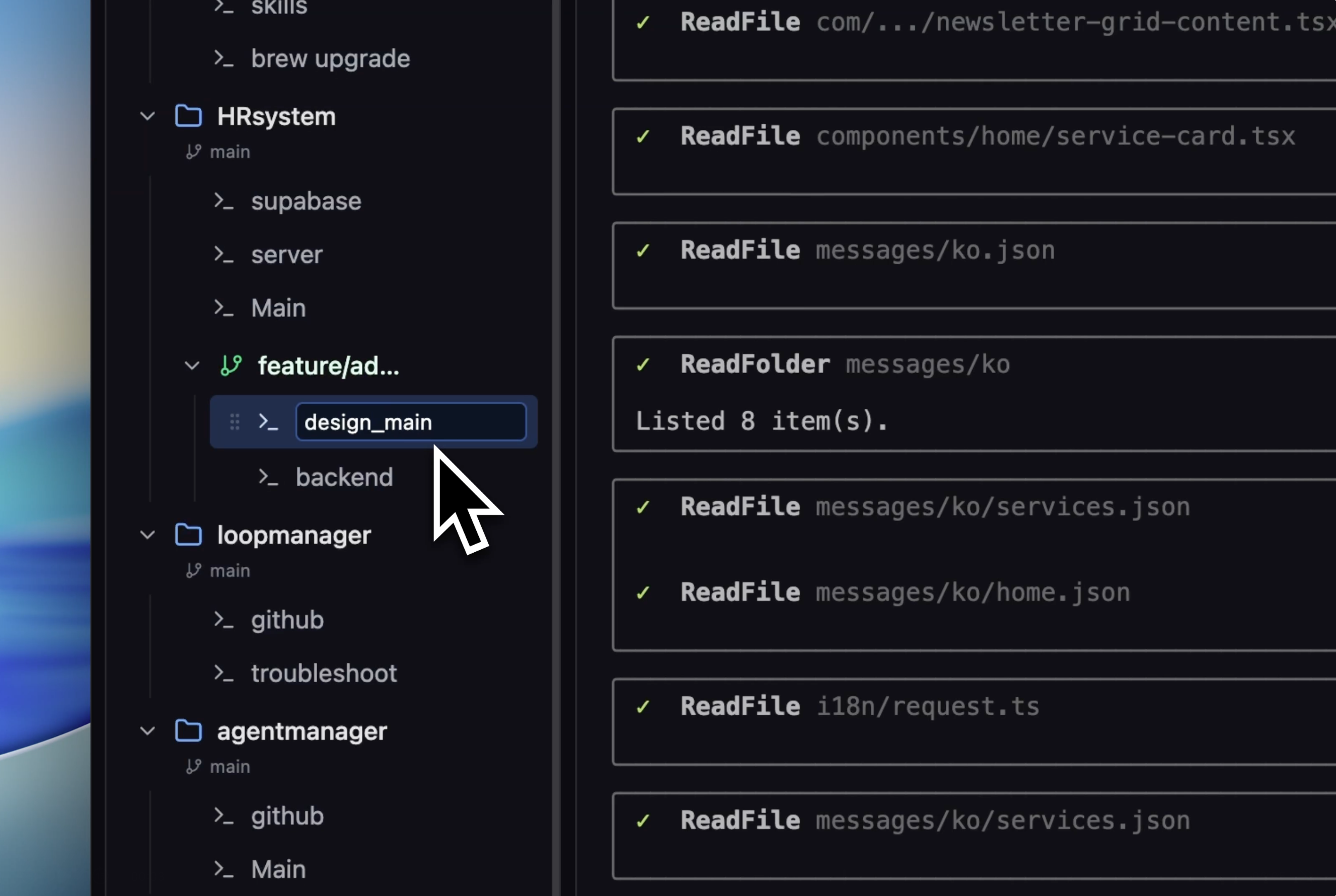

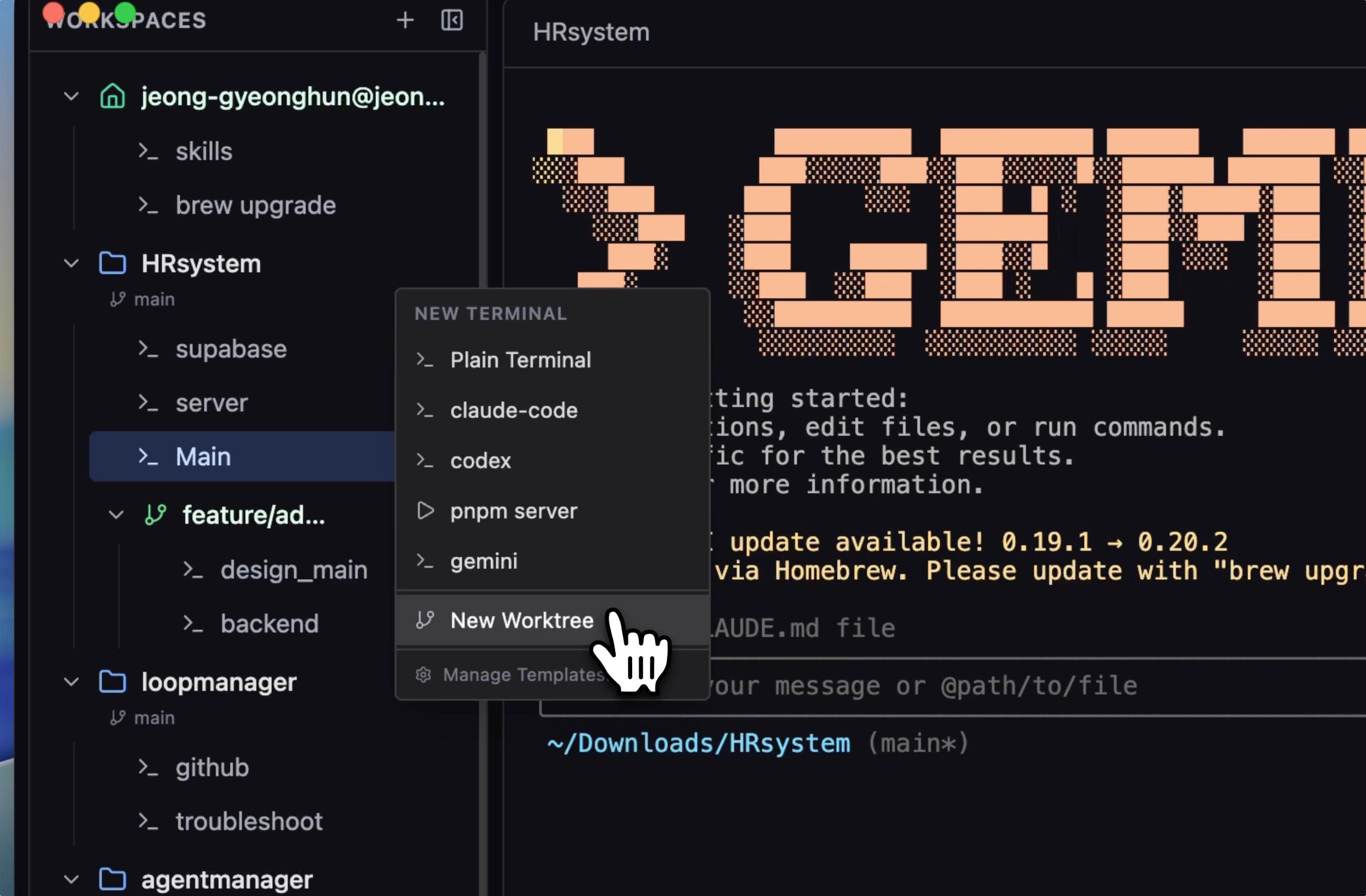

一句话介绍:CLI Manager是一款通过统一仪表板管理和运行多款AI命令行代理的工具,解决了开发者在不同AI代理间切换繁琐、工作流割裂的痛点。

Software Engineering

Developer Tools

Vibe coding

AI开发工具

CLI管理

代理聚合

工作流优化

开发者效率

终端工具

仪表板

多任务处理

用户评论摘要:用户普遍认可其统一管理概念,询问进程重启后环境变量与状态是否保持,开发者回复暂不支持但考虑未来更新。另有评论认为其非常适合企业团队,提升开发速度。

AI 锐评

CLI Manager捕捉到了一个正在形成的需求趋势:随着专精化AI CLI工具(如Claude Code、Codex CLI)的激增,开发者正陷入“多代理混乱”。其价值并非技术颠覆,而在于充当了一个轻量级的“战略层”,试图将离散的AI能力重新聚合到开发者熟悉的终端环境中。

然而,产品目前呈现出一个关键矛盾:它瞄准的是提升“AI赋能开发工作流”这一重度、持续性的场景,但其核心设计却更像一个“终端标签页管理器”,缺乏对持久化会话、环境隔离和状态保持等生产级需求的深度支持。早期用户的提问一针见血,直指其作为“管理”工具而非“美化”工具的软肋——如果不能妥善处理后台进程与上下文,重启即丢失,那么其宣称的“组织”和“流线化”价值将大打折扣。

它的机会在于,成为AI CLI生态的“粘合剂”与“控制平面”。但挑战同样明显:首先,它必须快速迭代,实现真正的状态管理,否则将止步于尝鲜玩具;其次,它需要构建更深度的集成(如代理间协作、输出标准化),而不仅仅是窗口排列;最后,它需警惕被上游工具“降维打击”——一旦某个主流AI CLI内置了多代理管理能力,其生存空间将被挤压。其成功与否,取决于能否在生态固化前,将自己从“便利功能”进化为“工作流基础设施”。

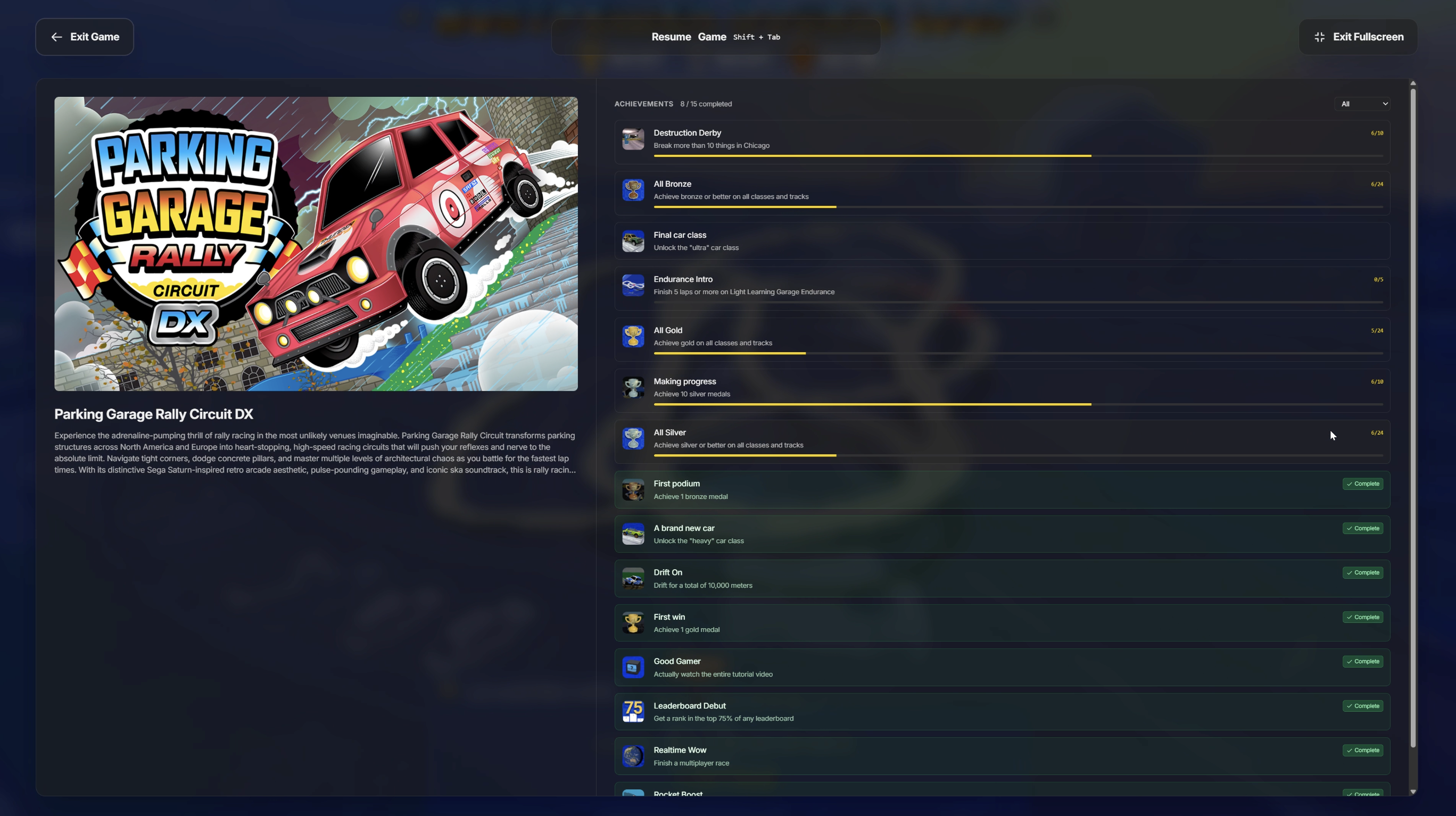

一句话介绍:Wavedash是一个基于浏览器的高端PC游戏平台,通过免下载、即点即玩的方式,解决了玩家在传统游戏模式中面临的漫长安装、频繁更新和启动摩擦等痛点。

Games

云游戏

浏览器游戏

即时游玩

WebGPU

WebAssembly

游戏分发平台

无延迟

独立游戏

多人在线

用户评论摘要:用户主要关注其技术原理,与Stadia等云游戏的区别。官方回复澄清其采用WebAssembly+WebGL/WebGPU在浏览器本地运行游戏,强调近乎零延迟的优势。评论中也表达了对其“免启动器、免下载”理念的认可。

AI 锐评

Wavedash的野心并非简单的“浏览器版Steam”,其核心价值在于试图用Web技术栈重构游戏的分发与体验范式。它避开了传统云游戏对带宽和流媒体延迟的极度依赖,转而押注终端算力与WebGPU/WebAssembly的成熟。这步棋很取巧:将渲染与计算负载转移至本地,平台方则专注于提供轻量化的封装、即时的社交链接和一键触达的渠道,本质上是在售卖“极致的便捷性”。

然而,其真正的挑战在于技术天花板与生态构建。WebGPU虽前景广阔,但要让3A级大作在浏览器中无损运行,目前仍是巨大考验。首发的独立游戏虽是其技术可行性的“安全牌”,却也暴露了其初期内容深度的不足。其宣称的“零延迟”在竞技类游戏中是刚需,但能否在更复杂的游戏类型中保持体验一致性,有待观察。

更深层看,Wavedash的价值在于其对开发者端承诺的“一次移植,全网分发”以及友好的分成模式。如果它能成为连接优质独立游戏与海量浏览器用户的低摩擦管道,或许能在Steam与Epic的夹缝中,开辟出一个基于“链接即服务”的新战场。但成败关键,最终取决于它能否吸引到足够多且优质的游戏内容,来证明其技术路径不是妥协,而是进化。目前,它更像一个精美的技术演示,距离成为“游戏商店”的宣言,还有很长的路要走。

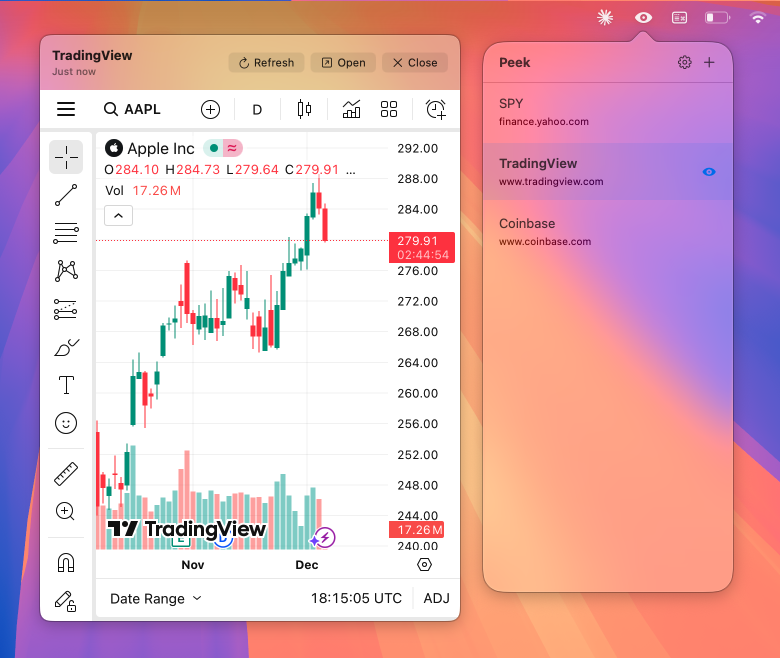

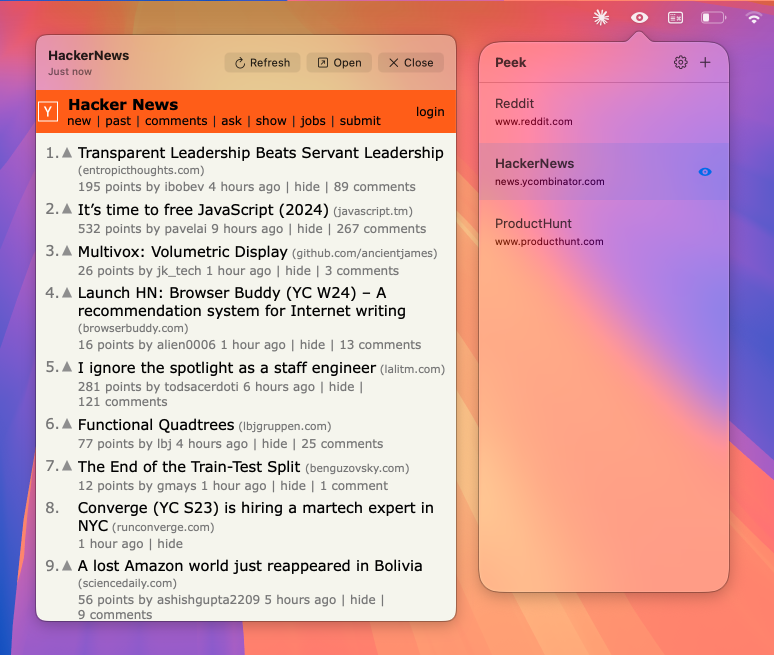

一句话介绍:一款将实时网站预览嵌入Mac菜单栏的工具,通过悬停查看无需切换标签页,解决了多任务处理时标签页泛滥、信息获取效率低下的痛点。

Productivity

生产力工具

Mac应用

菜单栏工具

实时预览

信息聚合

免打扰浏览

滚动记忆

轻量监控

效率软件

用户评论摘要:用户肯定其核心的滚动位置记忆功能是真正的差异化优势。主要反馈包括:优惠码无效的技术问题;希望推出桌面小组件版本而非仅限菜单栏悬停;开发者回复确认“隐藏干扰元素”和“自定义内容仪表盘”功能已在规划中。

AI 锐评

Peek的本质,是将“后台轮询”与“前台显性化”进行了巧妙的结合,其野心在于成为下一代“边缘计算”式的信息入口。它没有创造新数据,而是重构了信息的呈现逻辑——将需要主动“访问”的网页,转变为被动“浮现”的状态流。

其宣称解决的“标签页混乱”只是表层痛点。更深层的价值在于,它试图将用户从“主动检索”的上下文切换成本中解放出来,通过预设的滚动位置和窗口大小,实现信息的“场景化快照”。这尤其契合金融数据、运维仪表盘、社交Feed这类高频、低交互的监控型场景。其“滚动记忆”功能是精妙的一笔,它确保了信息的一致性,避免了“预览”沦为鸡肋的缩略图。

然而,其商业模式与产品形态存在潜在冲突。作为菜单栏常驻应用,其“无限站点”的Pro版是必然路径,但预览的实时性依赖于后台持续的网络请求与渲染,这对系统资源(尤其是内存和电量)的消耗将随监控站点数量线性增长。免费版3个站点的限制,很可能正是其平衡功能与性能的临界点。

用户的“桌面小组件”诉求恰恰击中了其软肋:菜单栏的定位是“瞥见”,但复杂数据监控往往需要“持续凝视”。这暴露了其当前形态在信息密度与沉浸需求上的局限性。开发团队回应的“自定义内容仪表盘”方向,预示着其可能从“预览工具”向“信息萃取与重组平台”演进,这才是更具想象力的赛道。但届时,它将直接与Zapier、Make等自动化平台的信息流功能竞争,挑战将截然不同。

当前版本的Peek是一个极简而锋利的概念验证,它精准切入了一个细分场景,但若想从“巧妙的工具”进化为“不可或缺的平台”,必须在性能优化、信息定制深度与生态扩展上找到更坚实的支点。

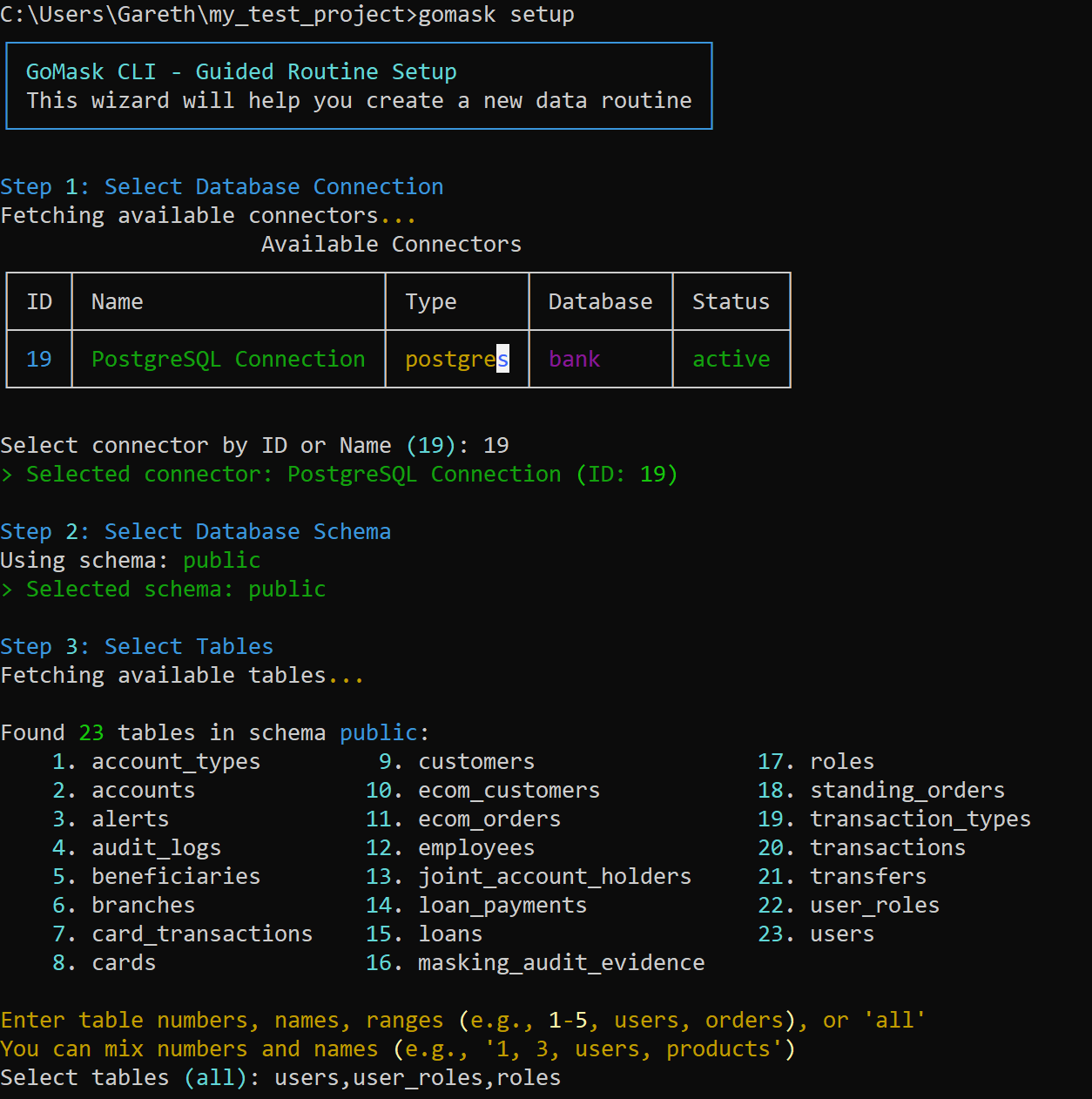

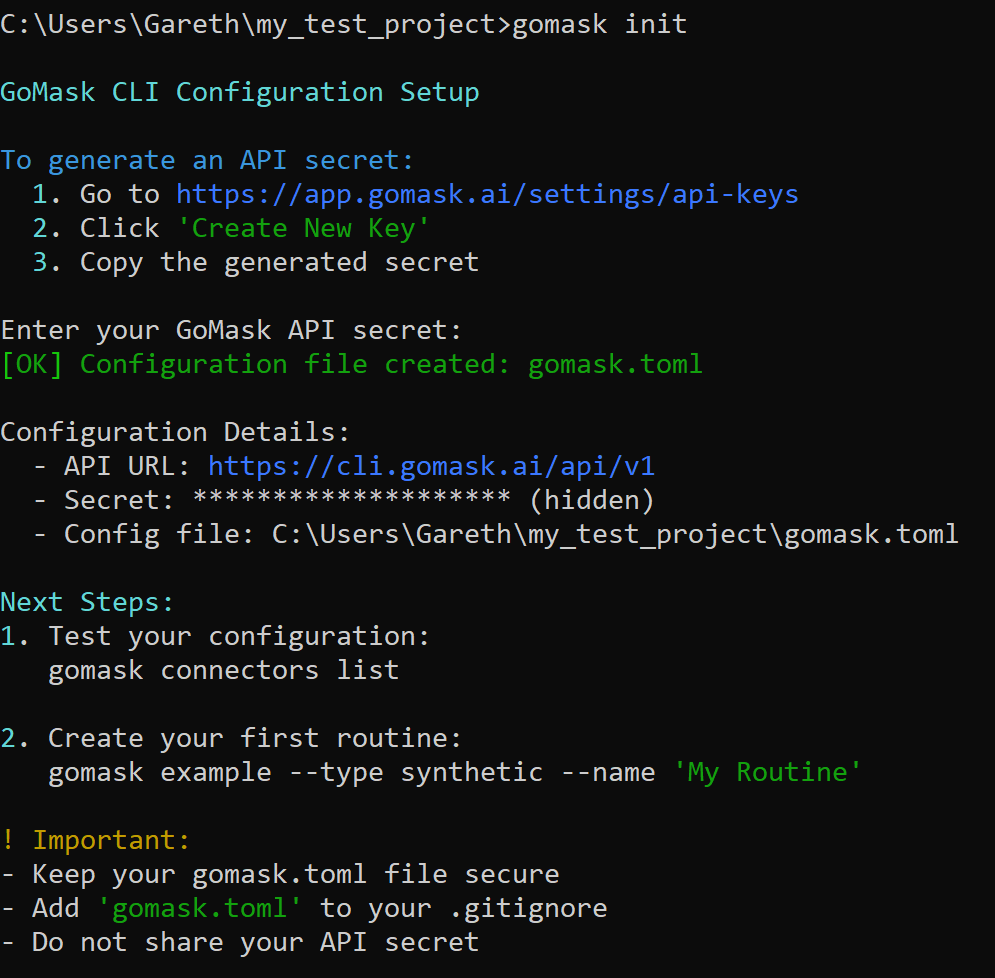

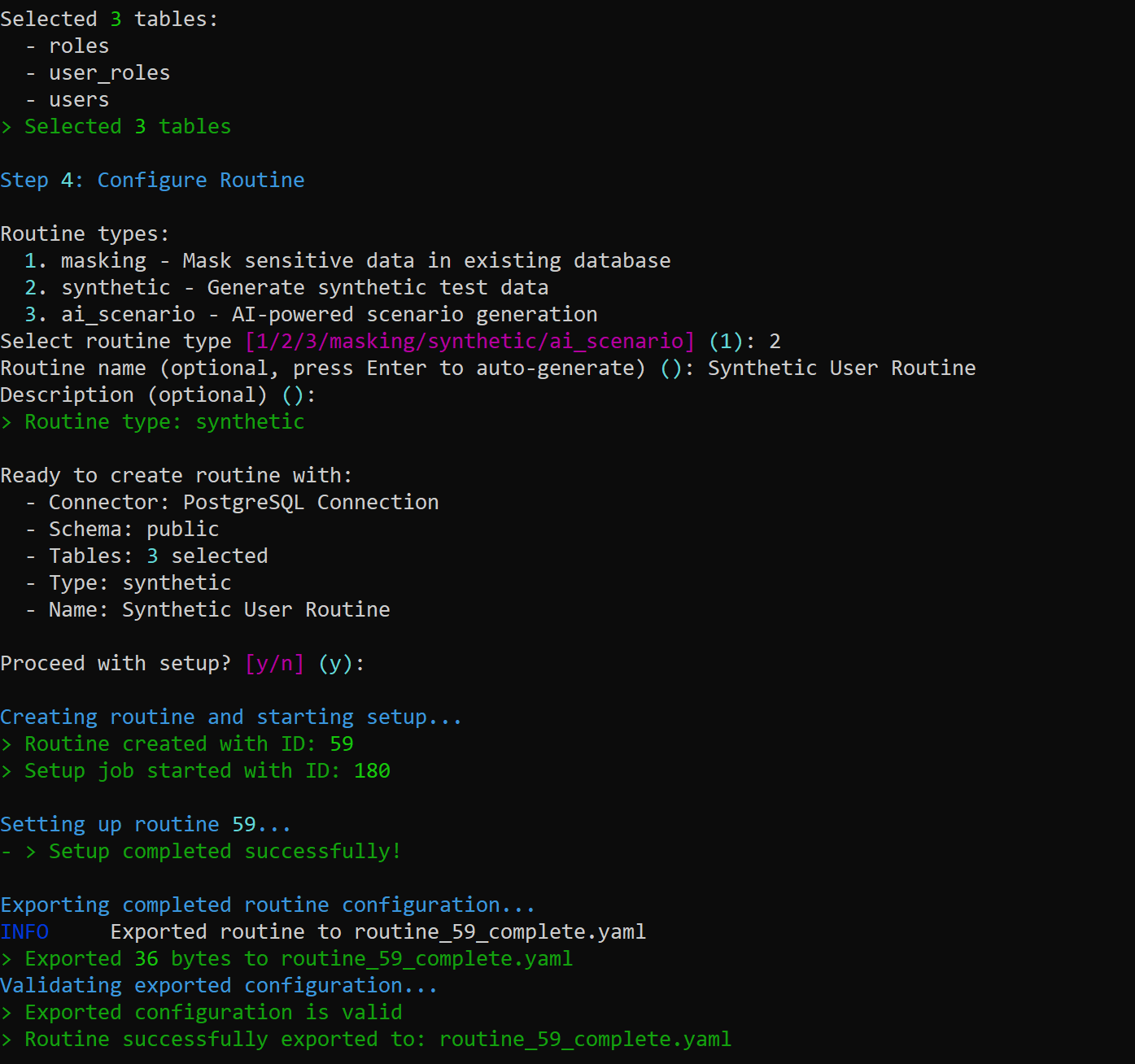

一句话介绍:GoMask as Code 是一款将测试数据管理代码化的工具,它允许开发团队在Git工作流中通过YAML定义数据脱敏规则,并与数据库模式变更一同提交和部署,解决了在敏捷开发中获取合规测试数据流程繁琐、耗时长的核心痛点。

Productivity

SaaS

Developer Tools

测试数据管理

数据脱敏

DevOps

GitOps

合规与安全

CI/CD集成

基础设施即代码

YAML配置

开发者工具

用户评论摘要:用户高度认可其“合规优先”理念与DevOps工作流集成的设计,认为对ITSM平台自动化测试极具价值。同时,有反馈指出YAML配置虽受工程师欢迎,但可能为产品经理等非技术协作者设置门槛,引发了关于团队协作便利性的思考。

AI 锐评

GoMask as Code 的“as Code”口号并非简单的功能堆砌,而是直击企业数据合规与开发效率矛盾的锋利手术刀。其真正价值不在于“数据脱敏”这个古老功能,而在于将合规流程从滞后、阻塞的审批环节,重构为可版本化、可评审、可自动化部署的工程实践。

产品深刻洞察到一个普遍存在的“地下违规”:开发者因等待合规测试数据流程过长而被迫使用生产数据。这不是道德问题,而是系统性问题。GoMask的解决方案本质上是将安全与合规要求“左移”,并内化为开发流水线的一部分。通过YAML定义规则并与Schema同库提交,它确保了数据规则的变更与数据结构变更在原子提交中保持同步,从根本上杜绝了因两者不同步导致的合规漏洞。这比任何事后审计都更为彻底。

然而,其“工程师友好”的双刃剑特性值得警惕。将规则定义完全收归代码仓库,在提升开发效率的同时,也可能将产品、合规、QA等非技术角色边缘化,形成新的协作壁垒。长远看,一个成功的开发者工具若想成为企业级平台,必须在“代码至上”的纯粹性与“协作友好”的易用性之间找到平衡点。目前,它精准地解决了一个尖锐的痛点,但它的普及上限,或许正取决于它如何让非工程师也能参与到这个“代码化”的合规流程中来。

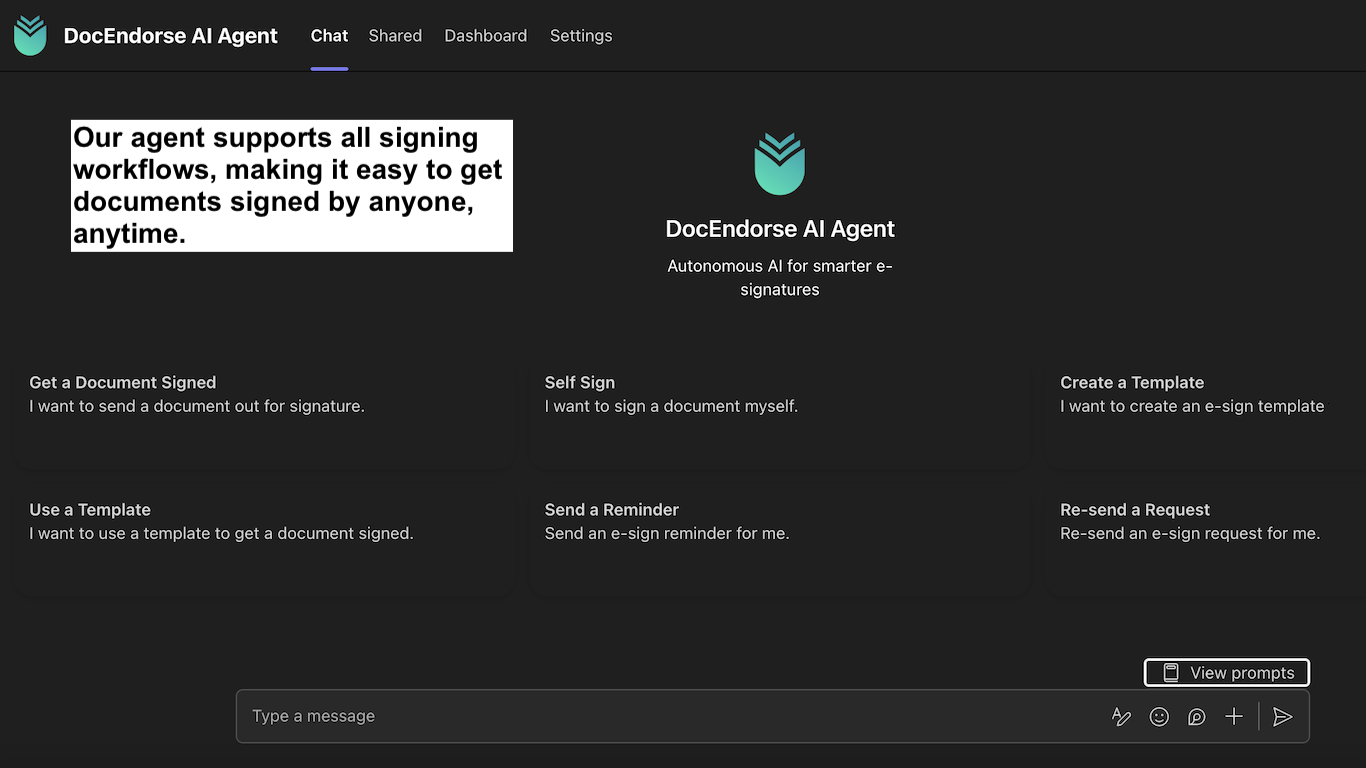

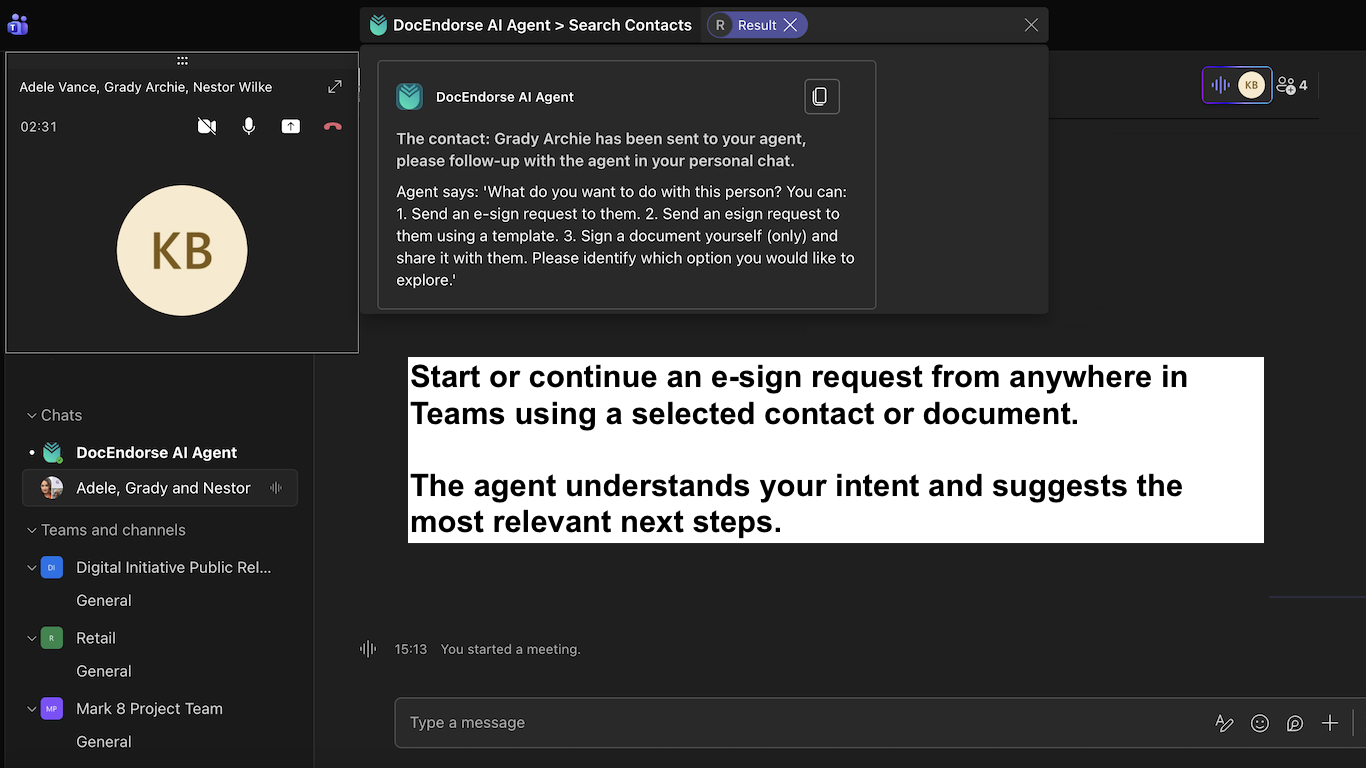

一句话介绍:一款集成于Microsoft Teams的AI电子签名助手,通过自然语言对话自动完成文档准备、签署人分配、发送提醒与跟进,解决了团队在协作平台内签署流程繁琐、手动操作低效的痛点。

Productivity

Artificial Intelligence

CRM

AI办公助手

电子签名

流程自动化

Microsoft Teams集成

企业协作

SaaS

智能文档处理

聊天机器人

工作流优化

用户评论摘要:用户反馈积极,认可其通过自然语言界面在Teams内实现文档流程自动化的价值,特别指出其与企业IT服务管理及审批工作流集成的潜力。开发者积极回应,表示正深入探索与审批流程的深度对齐。

AI 锐评

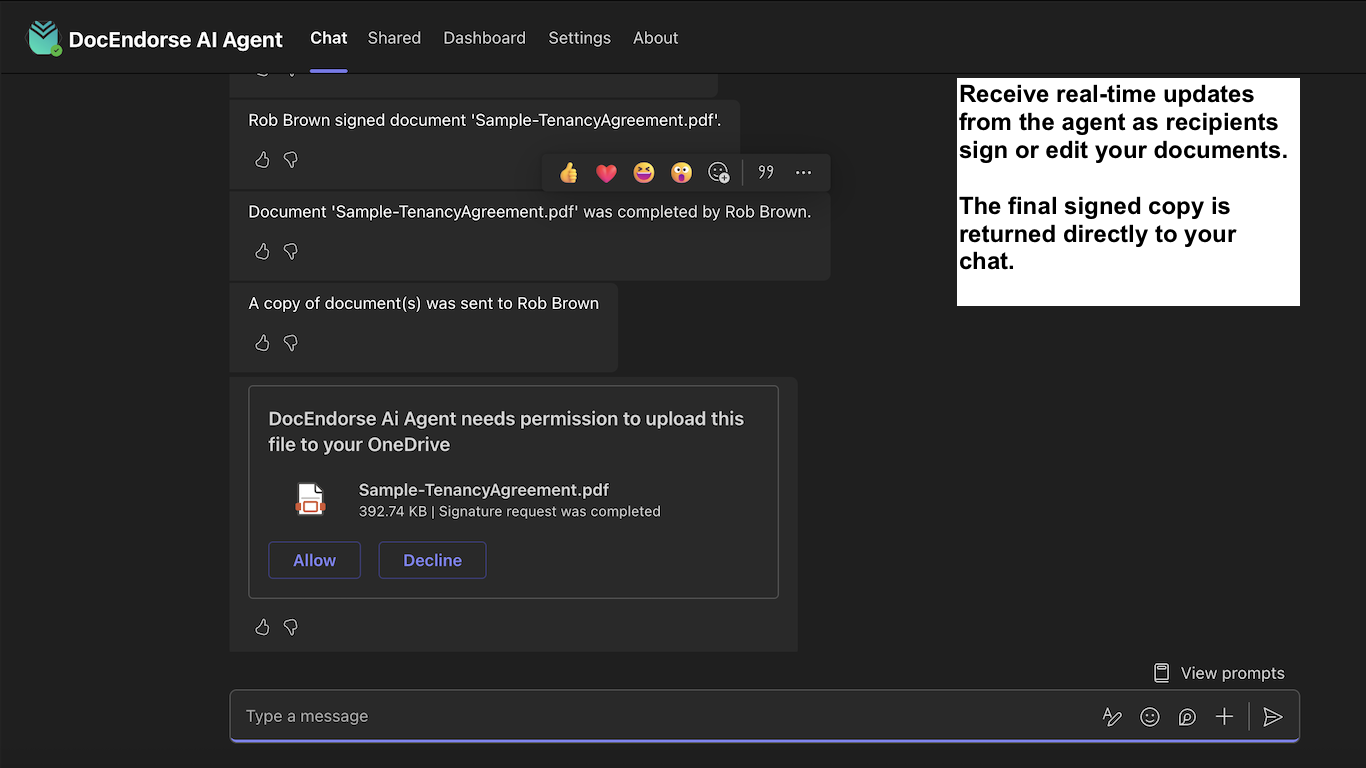

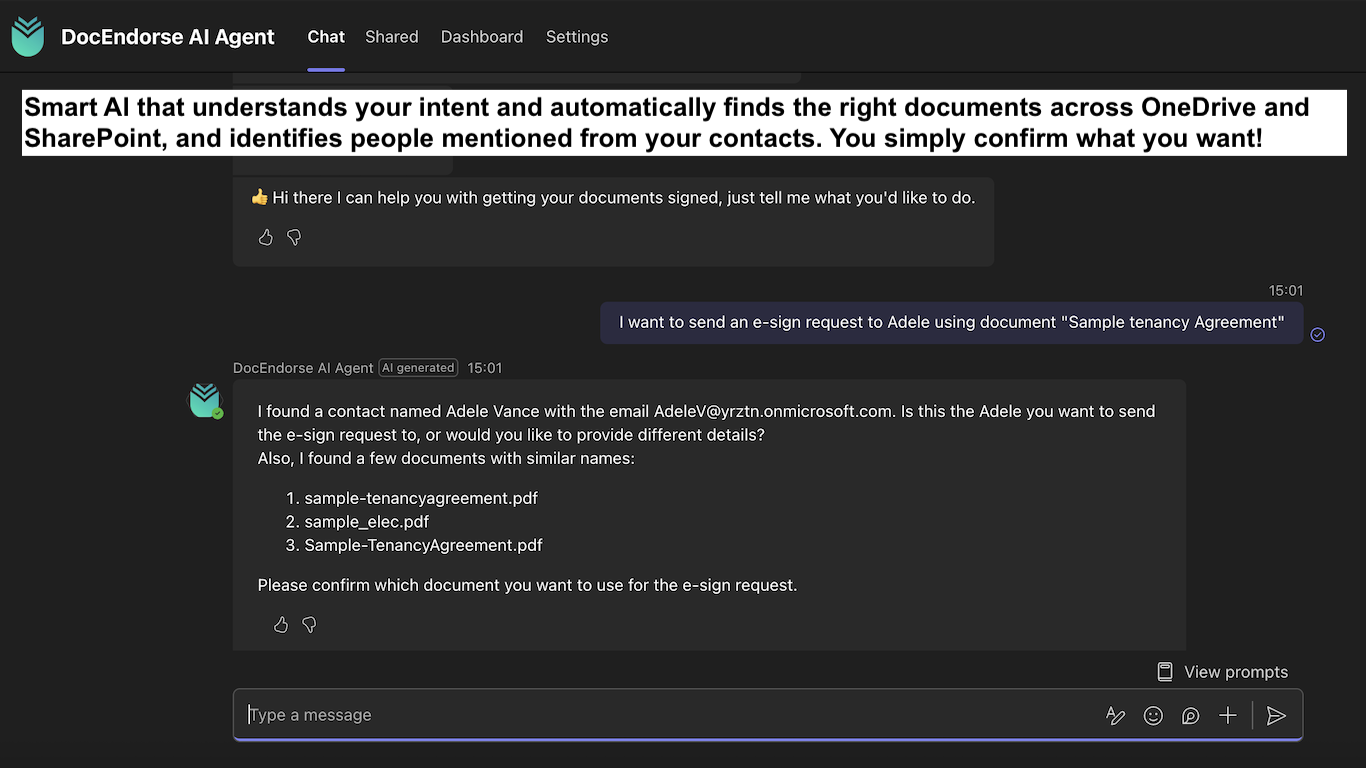

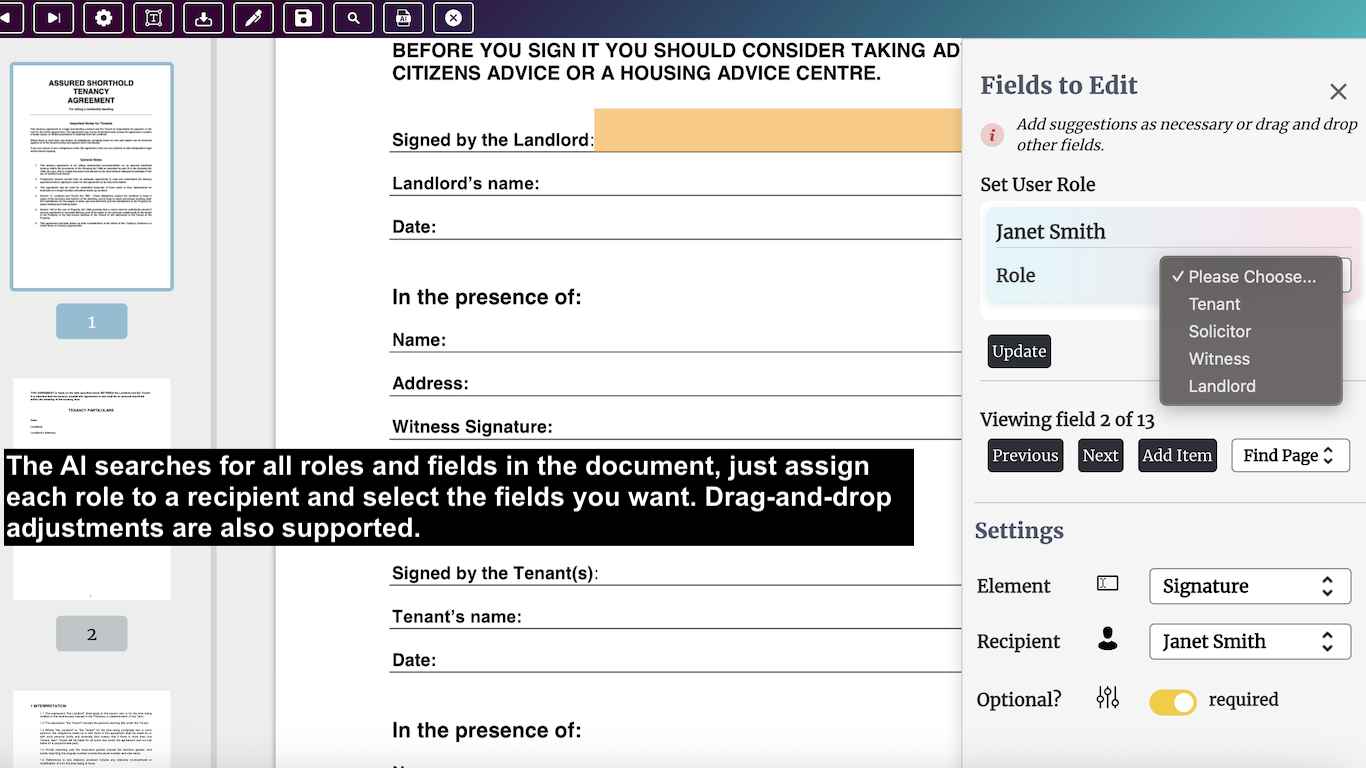

DocEndorse看似是电子签名赛道的又一入局者,但其真正锋芒在于对“平台内工作流闭环”的精准切入。它没有选择打造独立应用或泛用型AI工具,而是将自己深度嵌入Microsoft Teams这一已成气候的企业协作枢纽,将签名这一高频且中断性强的动作,转化为平台内的自然语言对话。这步棋的高明之处在于,它避开了与DocuSign等巨头在功能广度上的正面竞争,转而攻击其“体验缝隙”——即便使用专业工具,用户在Teams、邮件、文档库间的频繁切换与手动操作仍是效率黑洞。

产品介绍中强调的“Autonomous AI”是核心叙事,但其实际价值可能更接近于“情境感知自动化”。它利用AI理解用户意图并自动执行一连串预设操作(如从OneDrive拉取文档、识别签署域、分配角色),其技术门槛或许不在于前沿的AI突破,而在于对Teams生态、Office 365数据连接以及企业签名流程合规性逻辑的深度整合。用户评论中提及的ITSM审批流程集成,恰恰点明了其更大的想象空间:成为企业复杂审批流中关键的执行节点与自动化桥梁。

然而,其挑战同样明显。首先,重度依赖Teams生态既是护城河也是天花板,限制了其在混合协作环境(如同时使用Slack、Zoom)中的扩展。其次,将敏感的法律签署动作交由AI代理决策,其安全审计、责任界定与用户信任培养将是长期课题。最后,“自然语言聊天”的交互模式在处理复杂、多变量的签署场景时,是否真的比传统表单式界面更高效、更不易出错,仍需大量用户实践验证。

总体而言,DocEndorse展现了一种务实的AI产品化思路:不追求炫技,而是聚焦于一个具体、可被量化的效率场景,通过AI实现端到端的流程压缩。它的成功与否,将取决于其能否在“自动化程度”与“可控性、合规性”之间找到企业客户真正愿意买单的平衡点。

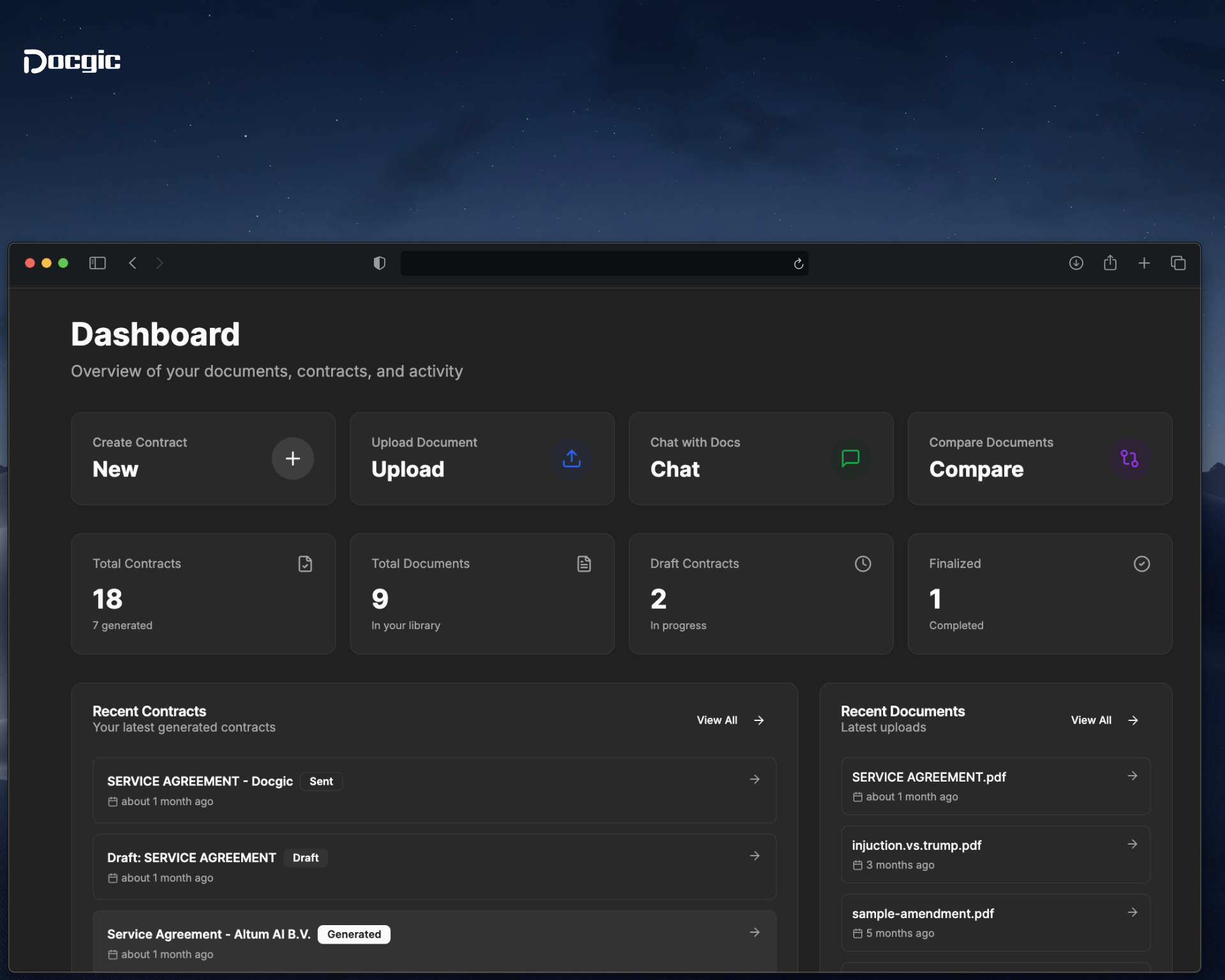

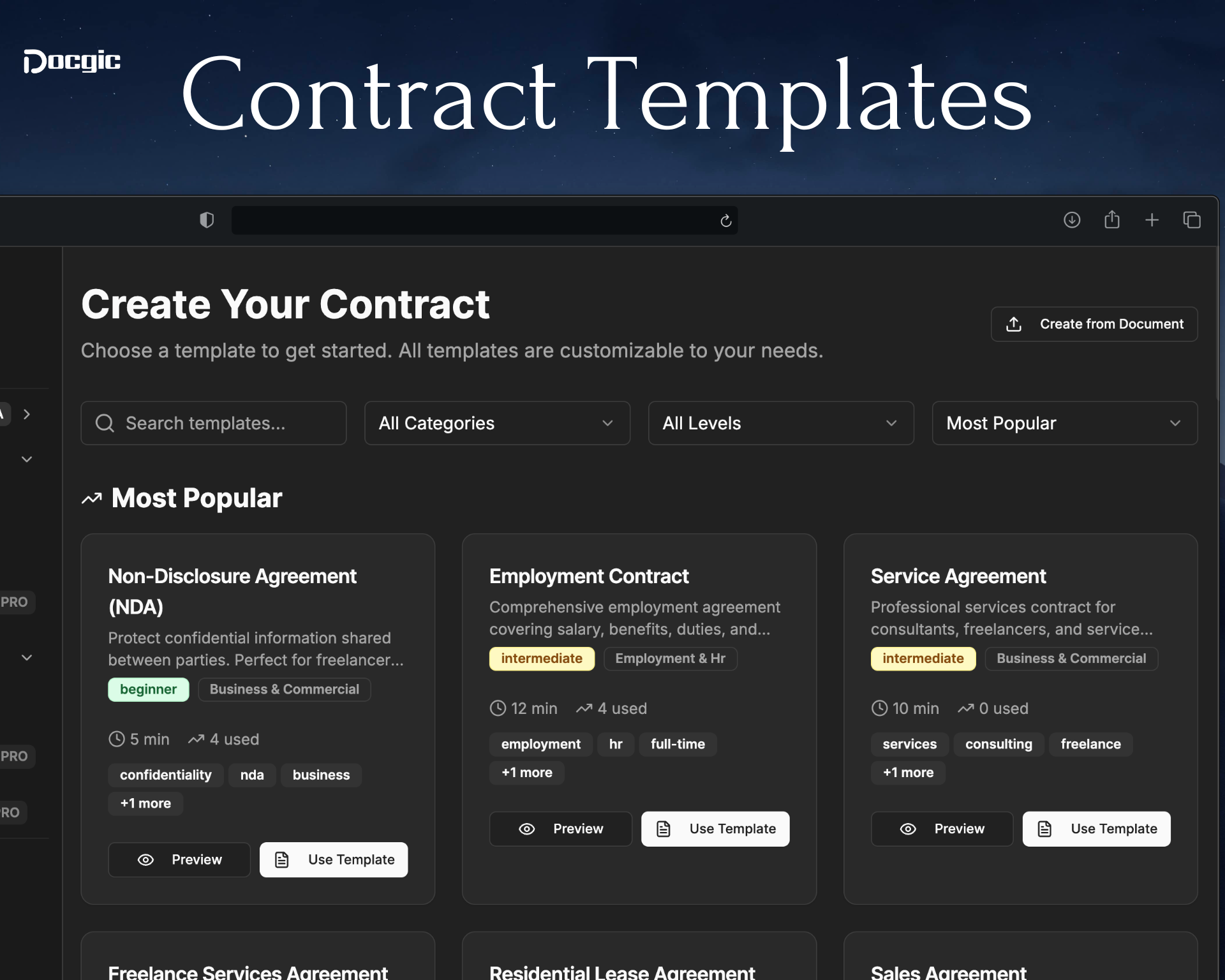

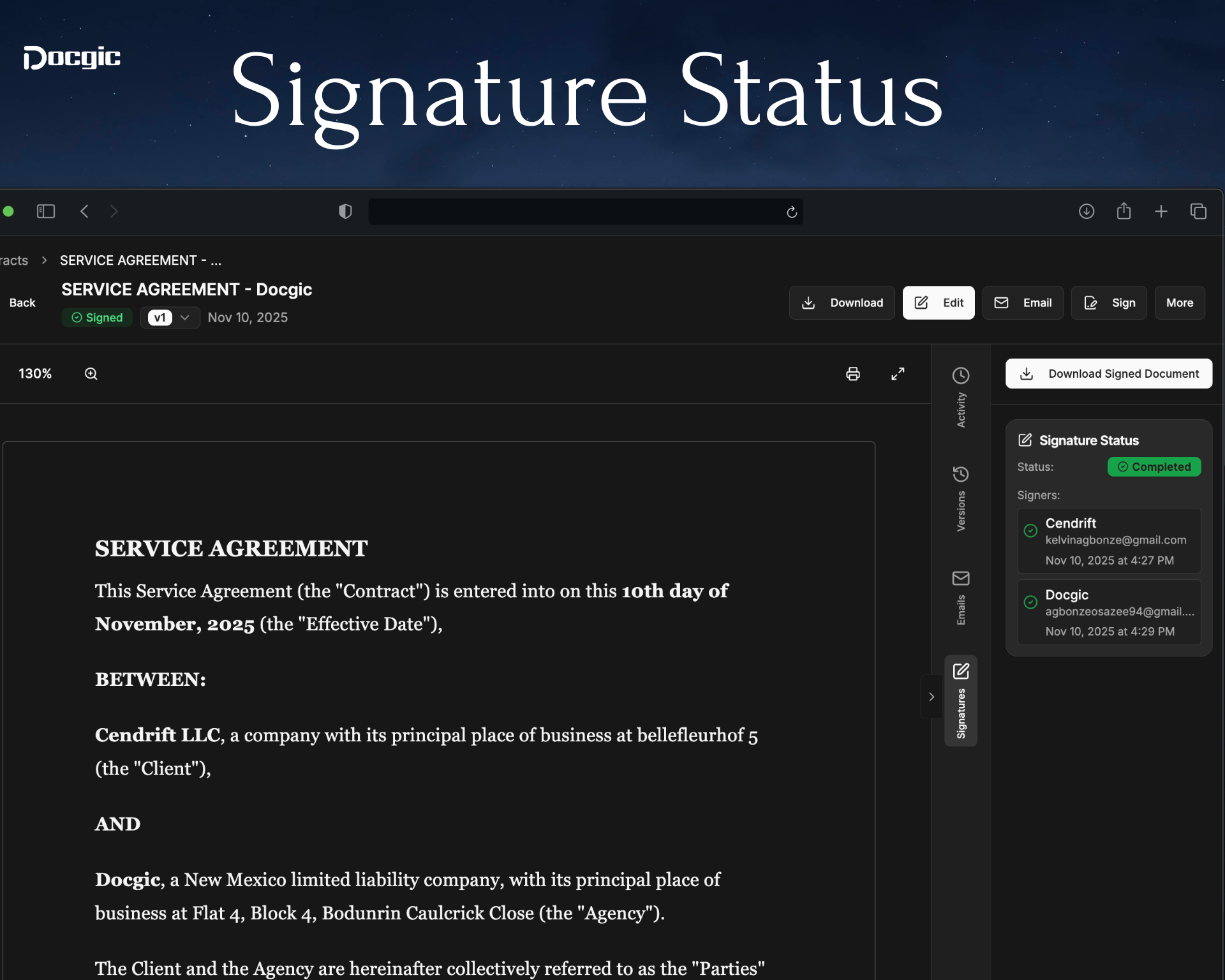

一句话介绍:Docgic是一个AI驱动的合同全生命周期管理平台,帮助创业者、自由职业者等用户快速生成、审核并签署合同,解决了传统法律服务流程繁琐、耗时昂贵、导致商业机会流失的核心痛点。

SaaS

Legal

Artificial Intelligence

AI合同生成

智能法律审核

电子签名

法律科技

SaaS

中小企业服务

效率工具

合同全生命周期管理

用户评论摘要:创始人详细阐述了产品源于个人签约时遭遇律师费用高、周期长的真实痛点。评论反馈整体积极,但有效互动较少。创始人主动寻求用户反馈,询问使用合同过程中的最大痛点及期望的必备功能。

AI 锐评

Docgic的叙事和定位精准地击中了法律服务市场中“效率”与“成本”的断层。它并非简单的功能堆砌,而是试图通过AI重构一个高度非标准化、依赖专业知识的服务流程,将其产品化为一个标准、即时、可预测的SaaS服务。其真正价值在于“流程压缩”,将传统上以“周”为单位、涉及多方(个人、律师、对方)的合同流程,压缩至个人在“分钟”级别内可闭环完成,这直接对应着商业世界中“时间即机会,延迟即损失”的残酷法则。

然而,其面临的挑战同样尖锐。首先,法律文件的严肃性与AI当前能力的“概率性”之间存在根本张力。宣传中的“律师审阅模板”和“红标检测”能在多大程度上提供真正的“法律安全”,而非仅是心理安慰,这需要极强的专业背书和风险提示,否则可能埋下隐患。其次,“All-in-One”的策略在早期虽能形成有力卖点,但每个垂直功能(生成、审核、签署)都面临领域内成熟巨头的竞争。其核心壁垒最终将取决于AI审核的精准度与可靠性,这需要持续、高昂的专业数据与算法投入。创始人来自尼日利亚的背景,既揭示了全球性的通用痛点,也可能在触及欧美成熟市场时面临更严格合规性质疑。

总体而言,Docgic描绘了一个诱人的未来图景:将法律服务像云计算一样按需、即时获取。但其成功不取决于功能集合,而取决于能否在“AI律师助理”这个核心角色上建立起足够深的信任度。它更像一个大胆的“效率实验”,其成败将验证在合同这个高风险领域,市场对“极速便捷”与“绝对可靠”的权衡取舍。

一句话介绍:一款通过文本或图片一键生成专业动态图形的AI工具,为不熟悉After Effects的创作者、设计师和营销人员解决了制作高质量动画耗时耗力的核心痛点。

Social Media

Artificial Intelligence

Video

AI视频生成

动态图形设计

一键动画

内容创作工具

社交媒体营销

设计原型

AIGC

效率工具

视频编辑

用户评论摘要:用户普遍认可其易用性、质量及“一键生成”的高效,认为对创作者是“游戏规则改变者”。主要建议集中在:1. 付费与积分机制复杂,存在过度推销;2. 生成结果需多次迭代才能用于最终成品,运动真实性有待提升;3. 期待视频编辑器增强基础编辑功能。

AI 锐评

Agent Opus推出的AI Motion Designer,表面上是将“文本/图像转动态图形”的门槛击穿,但其真正的野心在于构建一个服务于社交媒体的一站式AI视频智能体。它并非简单对标Runway或Pika,而是精准切入“动态图形”这一在短视频、广告中需求旺盛但技能壁垒高的细分场景,用“一键生成”将动画从专业生产变为大众消费。

从评论看,其“效率利器”的定位已获初步验证,用户愿意为“节省时间”买单。然而,赞誉背后暴露的正是当前AIGC工具的典型矛盾:介于“创意原型”与“生产就绪”之间的尴尬。用户称赞其用于“构思”和“原型”,却指出需多次迭代、运动不自然,这揭示了其核心价值目前更偏向“灵感加速与可视化”,而非完全替代专业动画制作。其“生产就绪”的宣传与用户实际体验存在差距。

更犀利的看点在于其商业模式与产品路径。有用户尖锐批评其积分与订阅体系“令人困惑”,并存在激进升级推销,这反映了工具类AI应用在探索商业化时普遍面临的用户体验折损风险。同时,用户要求加强基础视频编辑功能(如音视频分离),这警示团队:在追求AI炫技的同时,绝不能忽视作为创作工具的基础功能稳固性。否则,“一站式”的愿景将因基础体验的短板而坍塌。

总体而言,这是一款在正确赛道上的犀利产品,它降低了动态图形的创作阈值,但其长期成功将取决于:能否在AI生成质量上实现从“可用”到“专业”的跨越,以及能否在商业化与用户体验间找到优雅的平衡。它现在是一个出色的“副驾驶”,但距离成为真正的“驾驶员”还有很长的路要走。

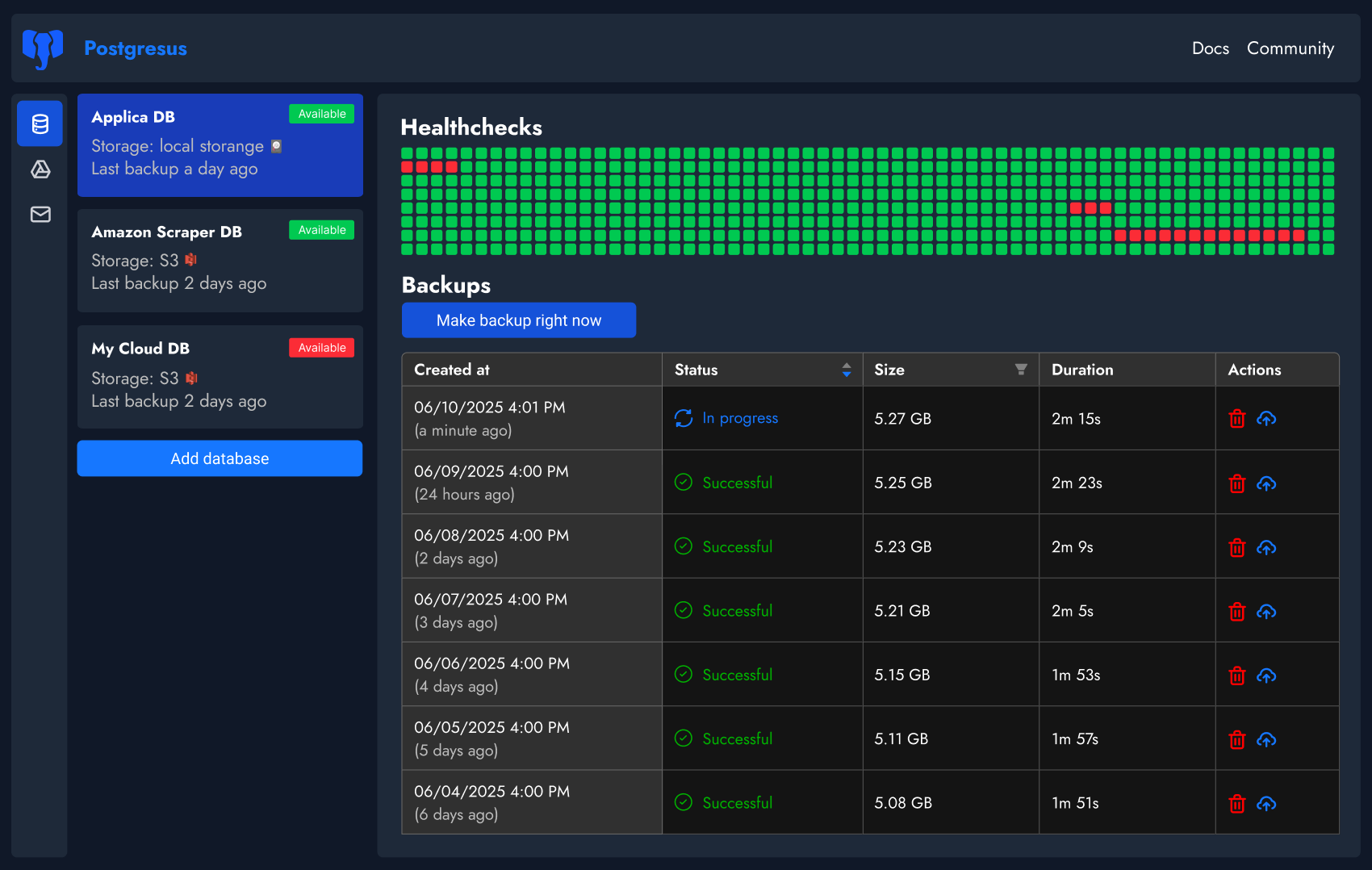

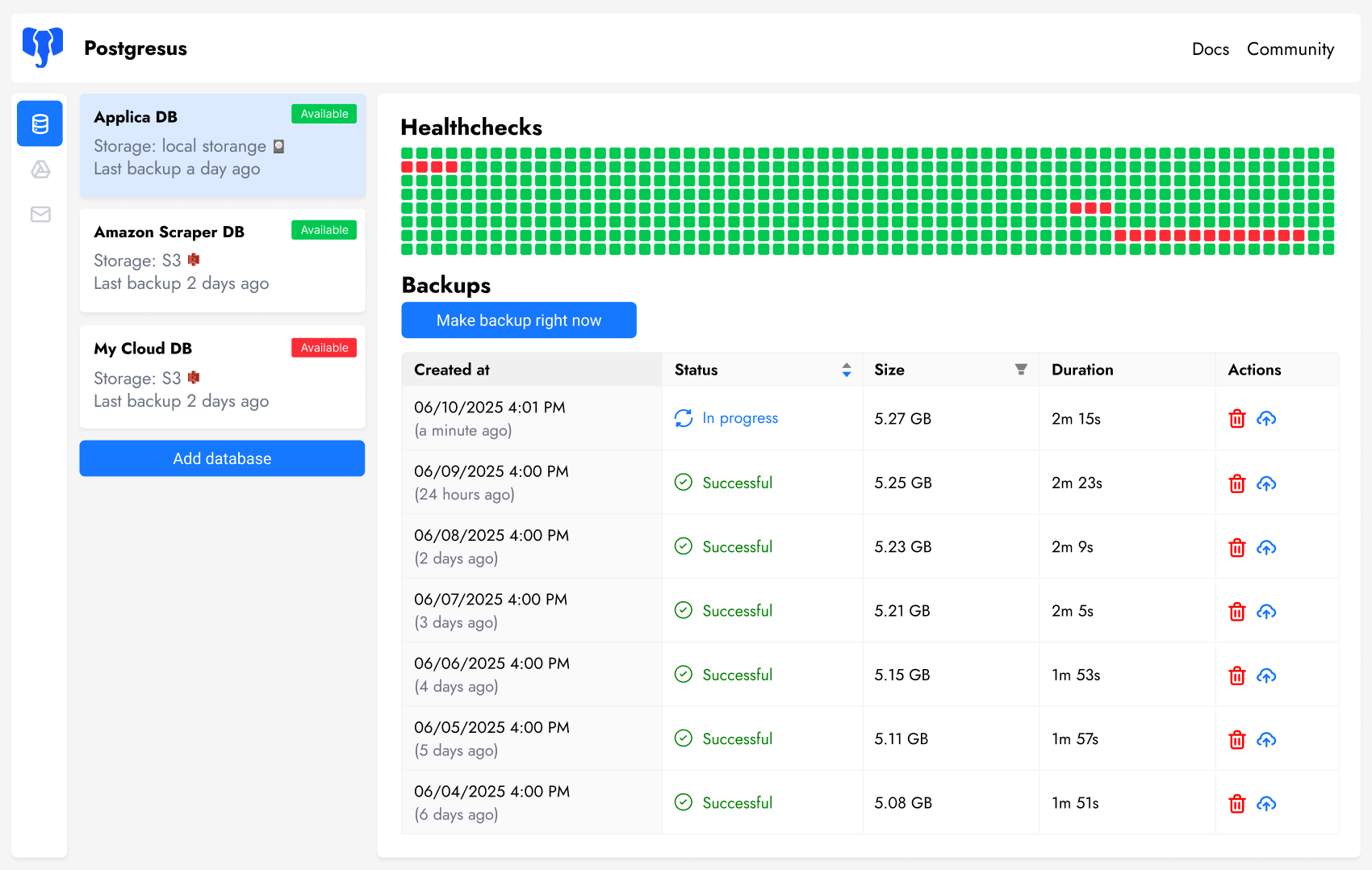

一句话介绍:Postgresus是一款开源、自托管的PostgreSQL数据库备份工具,通过支持多种存储后端和通知渠道,解决了开发者和运维团队在数据备份管理上复杂、分散的痛点。

Open Source

Developer Tools

GitHub

Database

开源软件

数据库备份

PostgreSQL

自托管

数据安全

运维工具

开发者工具

存储管理

用户评论摘要:开发者亲述项目源于自身需求,后开源服务于社区。用户普遍赞赏其解决单一问题的专注、简洁与实用,无营销或AI噱头。现有用户肯定其工具价值,社区规模(GitHub星标、Docker拉取数)成为重要信任背书。

AI 锐评

Postgresus的出现,精准刺中了云时代下一个被忽视的缝隙:在各大云厂商提供托管数据库备份服务之外,那些坚持或必须采用自托管PostgreSQL用户的核心诉求。它的价值不在于技术创新,而在于对“单一职责”的极致践行。

在“All in AI”和功能膨胀的行业背景下,它反其道而行之,剥离一切与备份无关的噪音,只聚焦于备份的调度、存储、通知和团队审计。这种克制,恰恰构成了其最犀利的竞争力。它本质上是一个“胶水”型工具,将成熟的存储服务(S3、Google Drive)和通信平台(Slack、Telegram)与PostgreSQL原生能力粘合,形成自动化流水线。其“企业级安全”特性,更多是作为开源自托管方案与商业云服务对抗时的必备筹码。

然而,其天花板也清晰可见。作为围绕单一数据库的垂直工具,其市场边界就是PostgreSQL的运维生态。虽然开发者怀揣“满足99%项目需求”的雄心,但面对超大规模或异构数据库环境,其能力可能捉襟见肘。评论中洋溢的“简洁赞美”,既是对其当前定位的肯定,也隐含了对其未来扩张的潜在担忧——增加功能可能破坏其纯粹的吸引力。

它的成功路径非常典型:开发者“挠自己的痒处”启动项目,通过开源社区验证和放大需求,最终吸引从个人到企业的用户。其真正的挑战在于,如何在保持核心简洁的同时,应对企业客户必然提出的复杂需求(如更细粒度的权限控制、备份验证与恢复演练集成),而不至于滑向另一个臃肿的“瑞士军刀”。在备份这个关乎数据生命线的领域,可靠与信任远比对花哨功能的追逐更重要,而这正是Postgresus目前建立起的护城河。

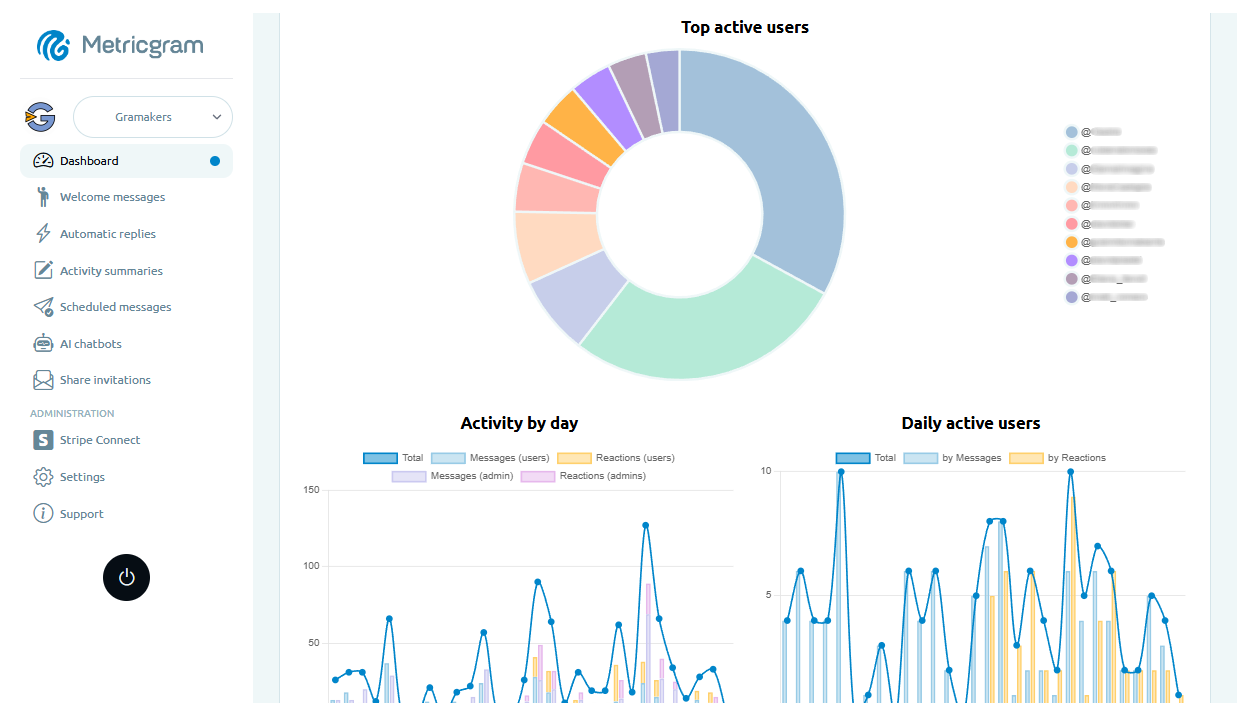

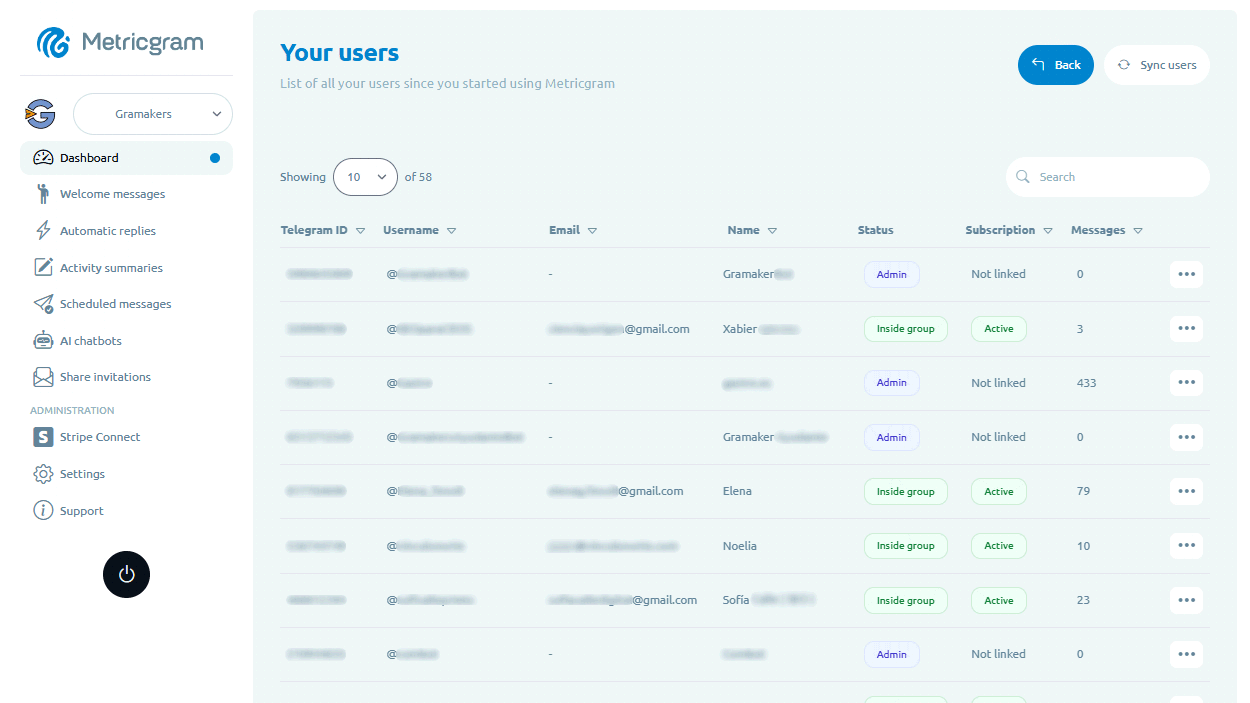

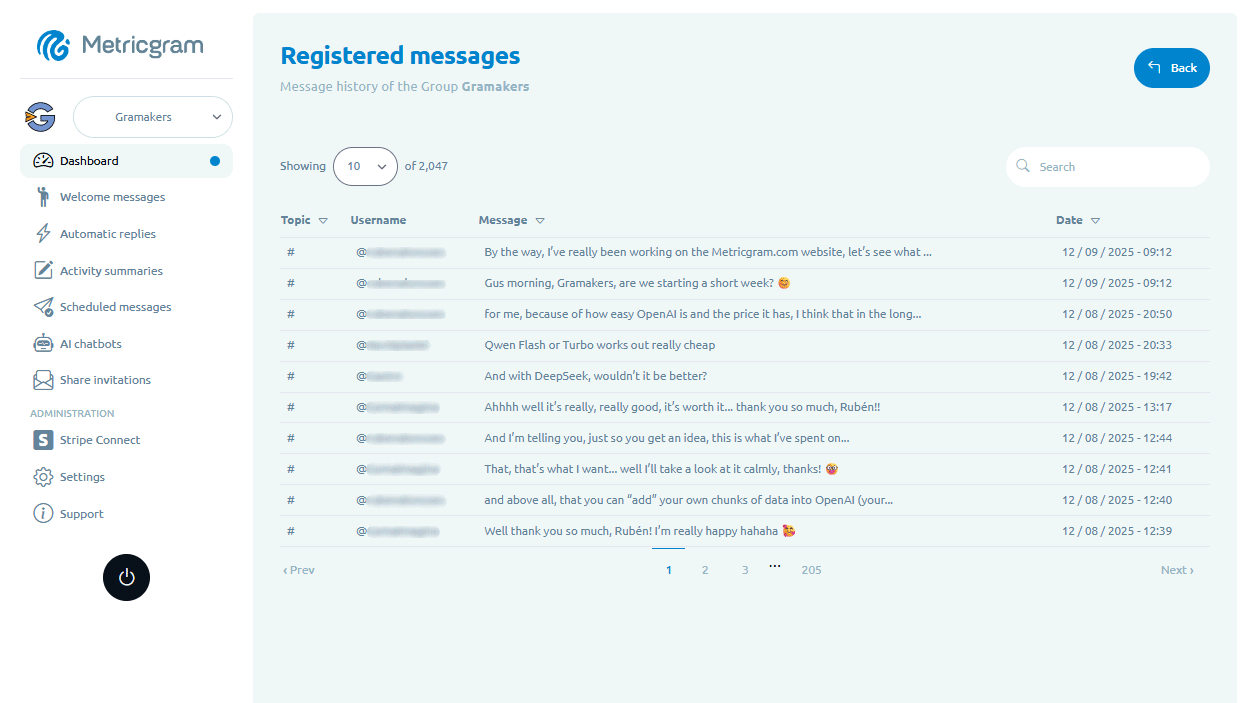

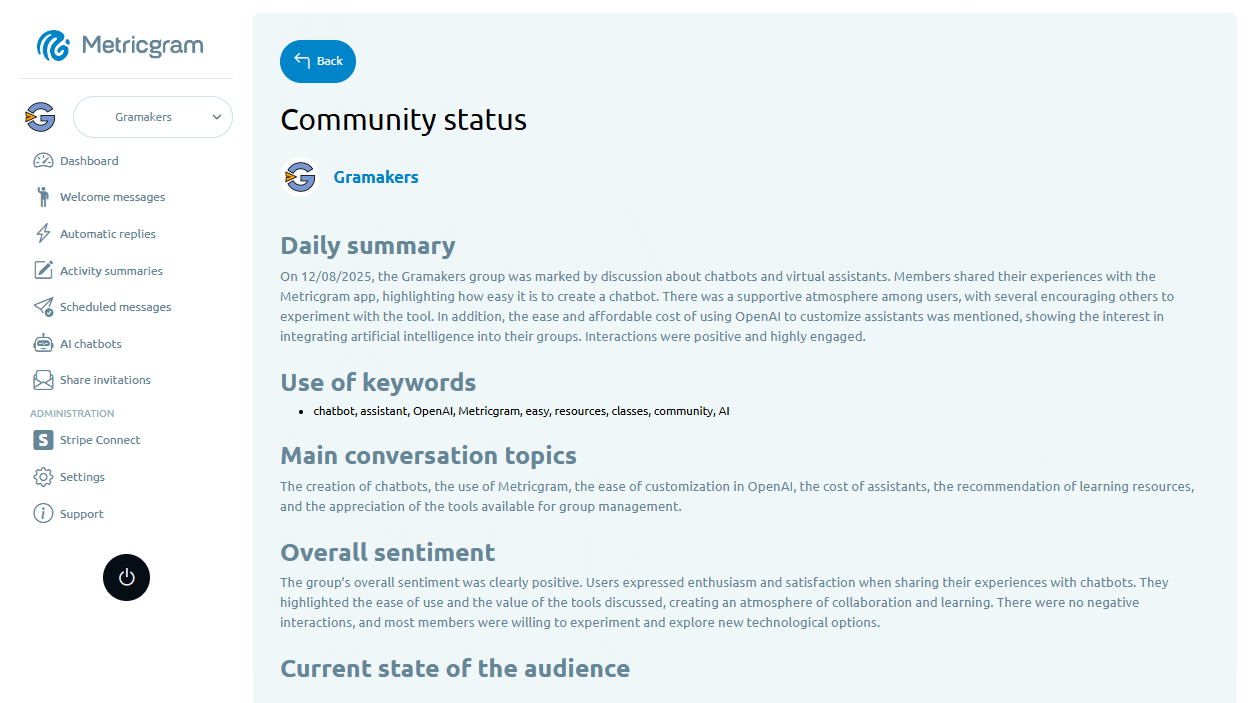

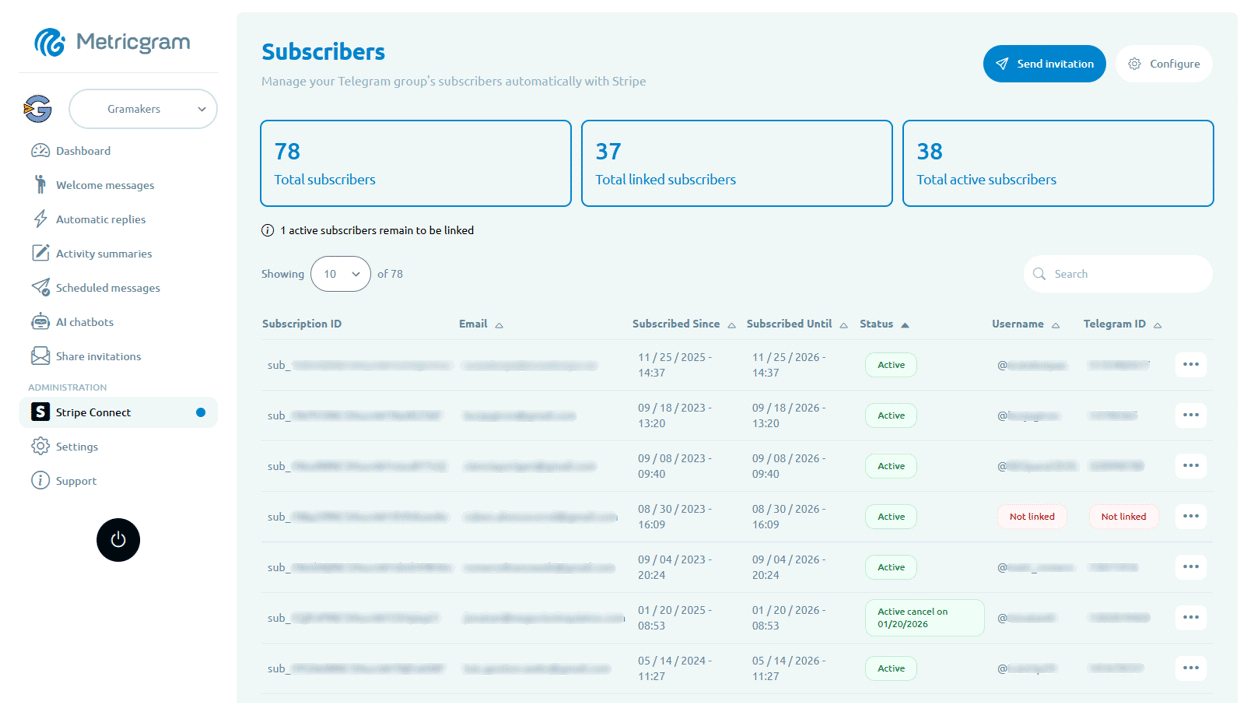

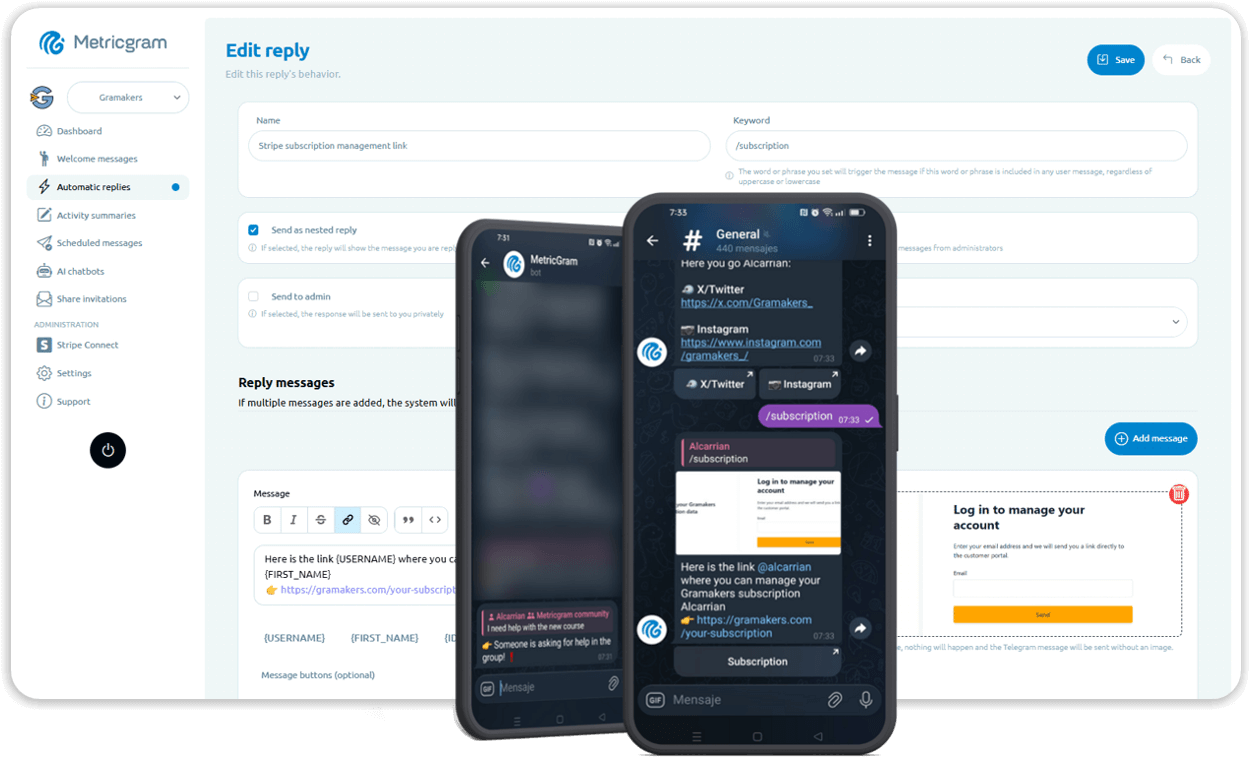

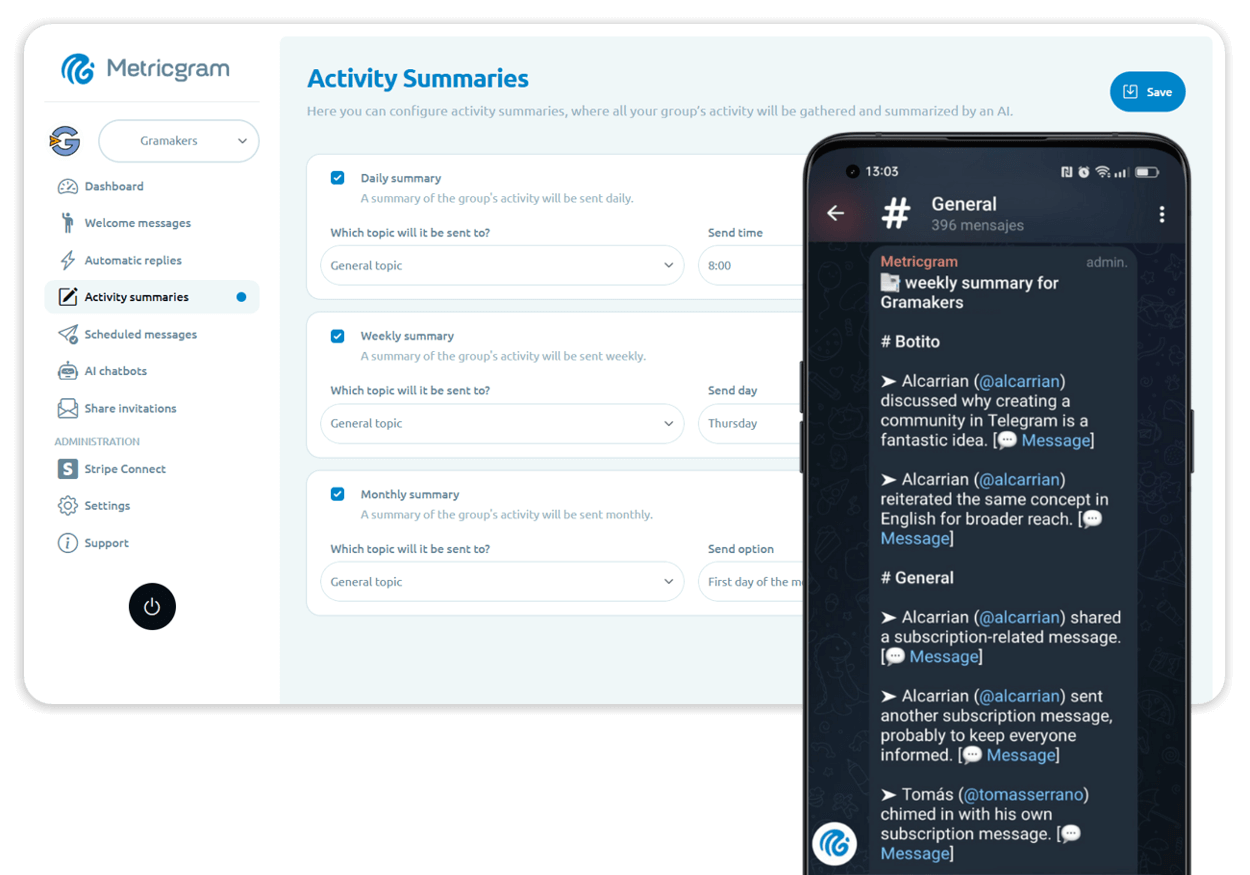

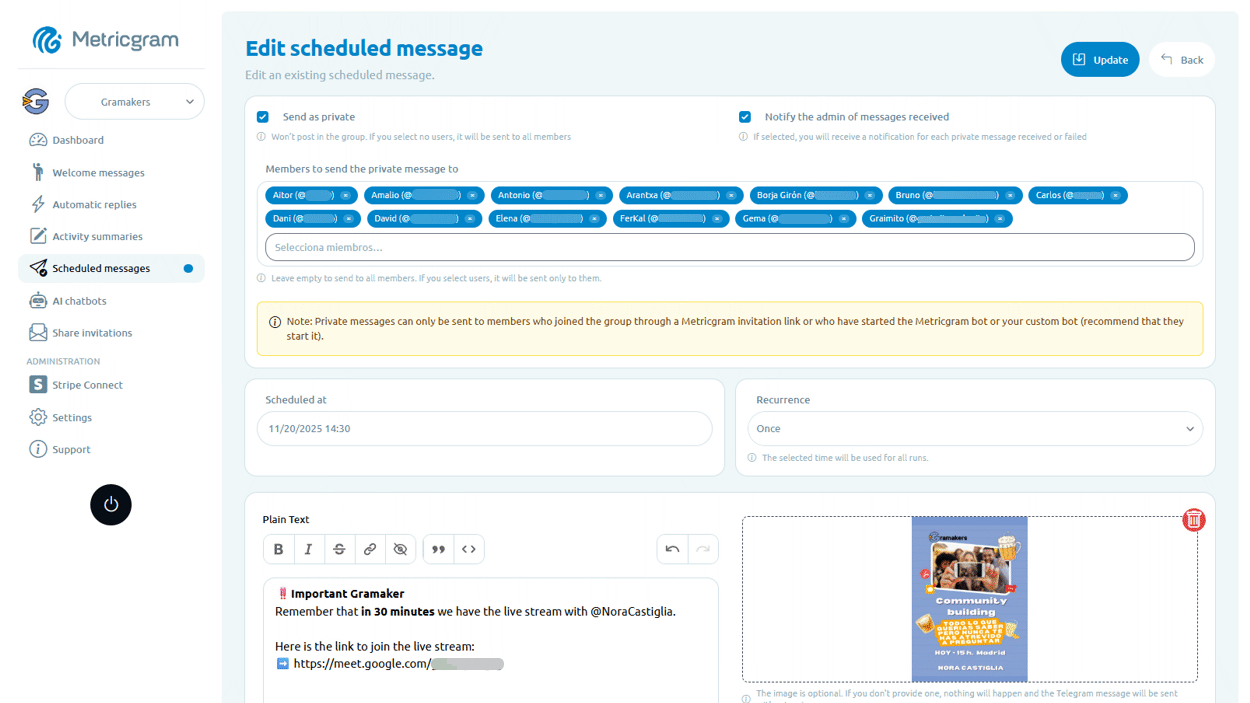

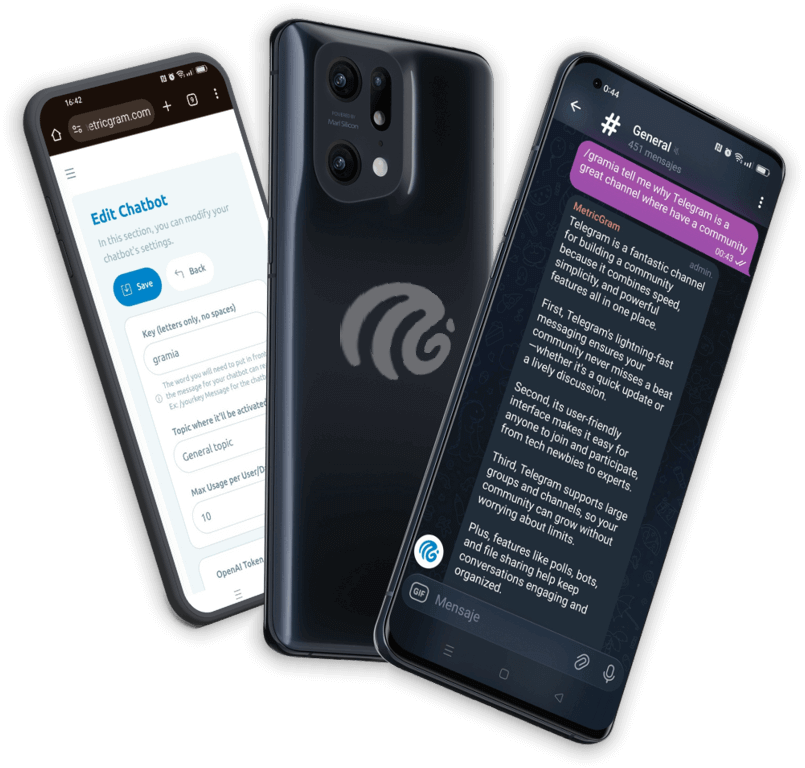

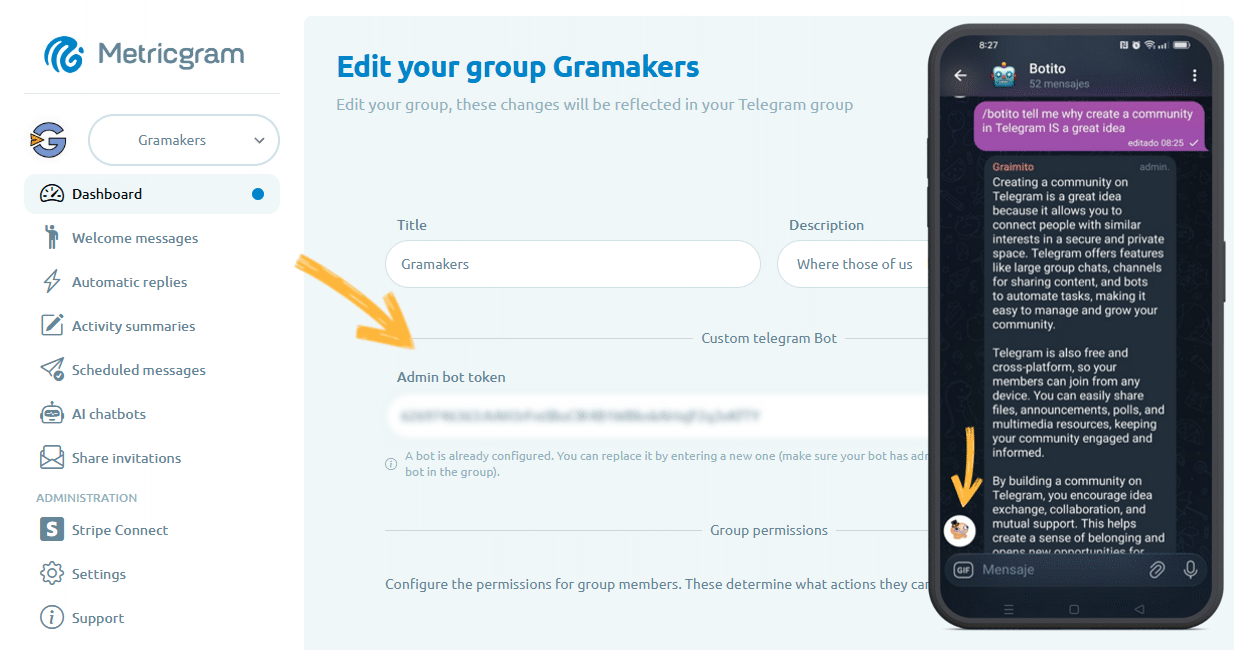

一句话介绍:Metricgram是一款Telegram社区管理平台,通过自动化工具、数据分析和AI助手,解决管理员在用户准入、内容发布、日常维护和信息过载中耗时低效的痛点。

Telegram

Messaging

Community

Telegram社区管理

SaaS工具

自动化运营

数据分析

AI聊天机器人

付费社群

消息调度

社群分析

效率工具

用户评论摘要:用户普遍认可产品解决了Telegram社群管理的真实痛点,赞扬其集成化与实用性。主要询问AI助手自定义能力,开发者回应可连接OpenAI创建个性化助手。部分用户分享在数百人社群中的成功使用体验。

AI 锐评

Metricgram切入的是社群运营工具市场中一个垂直但关键的场景——Telegram社群的专业化管理。其真正价值并非功能堆砌,而是将分散的运营动作(支付、调度、分析、互动)整合为可闭环的工作流,直击管理员从“建设者”沦为“杂务工”的异化困境。

产品巧妙抓住了三个趋势:一是付费社群的标准化管理需求,通过Stripe集成将商业闭环自动化;二是AI助理的平民化应用,将OpenAI能力转化为即插即用的社群助手;三是数据驱动运营的普及,用自动化报告降低分析门槛。但挑战同样明显:首先,重度依赖Telegram生态,平台政策风险不可忽视;其次,功能与Discord机器人、专用分析工具存在重叠,需持续证明其集成优势;最后,从“工具”到“平台”的跃迁,需要更开放的API和生态建设。

当前29票的冷启动数据表明市场验证仍处早期。成功关键在于能否从“效率工具”升级为“增长引擎”,例如深化成员行为分析、连接更多支付网关、提供跨平台洞察。若仅停留在自动化替代人工,其壁垒容易被复制。社群管理的本质是促进连接与价值交换,工具的价值最终应体现在社群活跃度与商业成果的提升上,而非仅仅节省管理员时间。

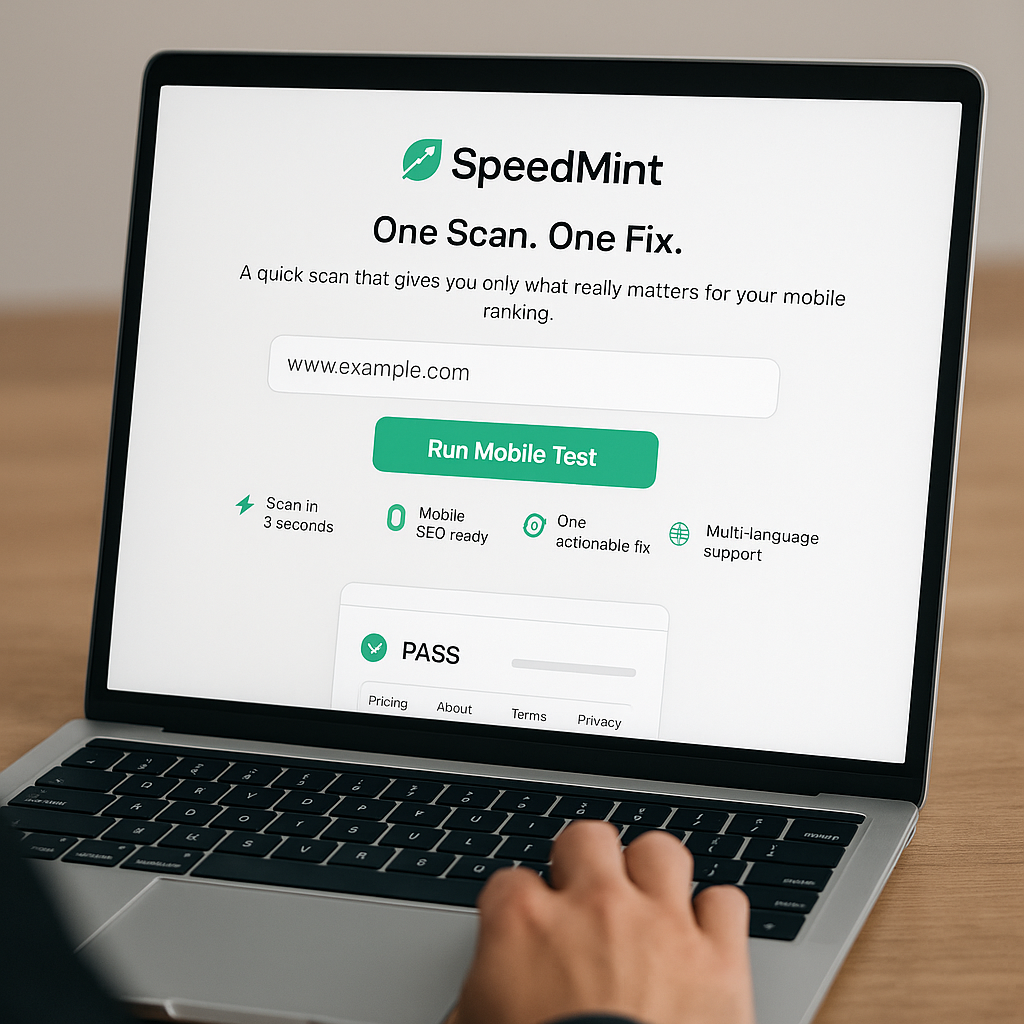

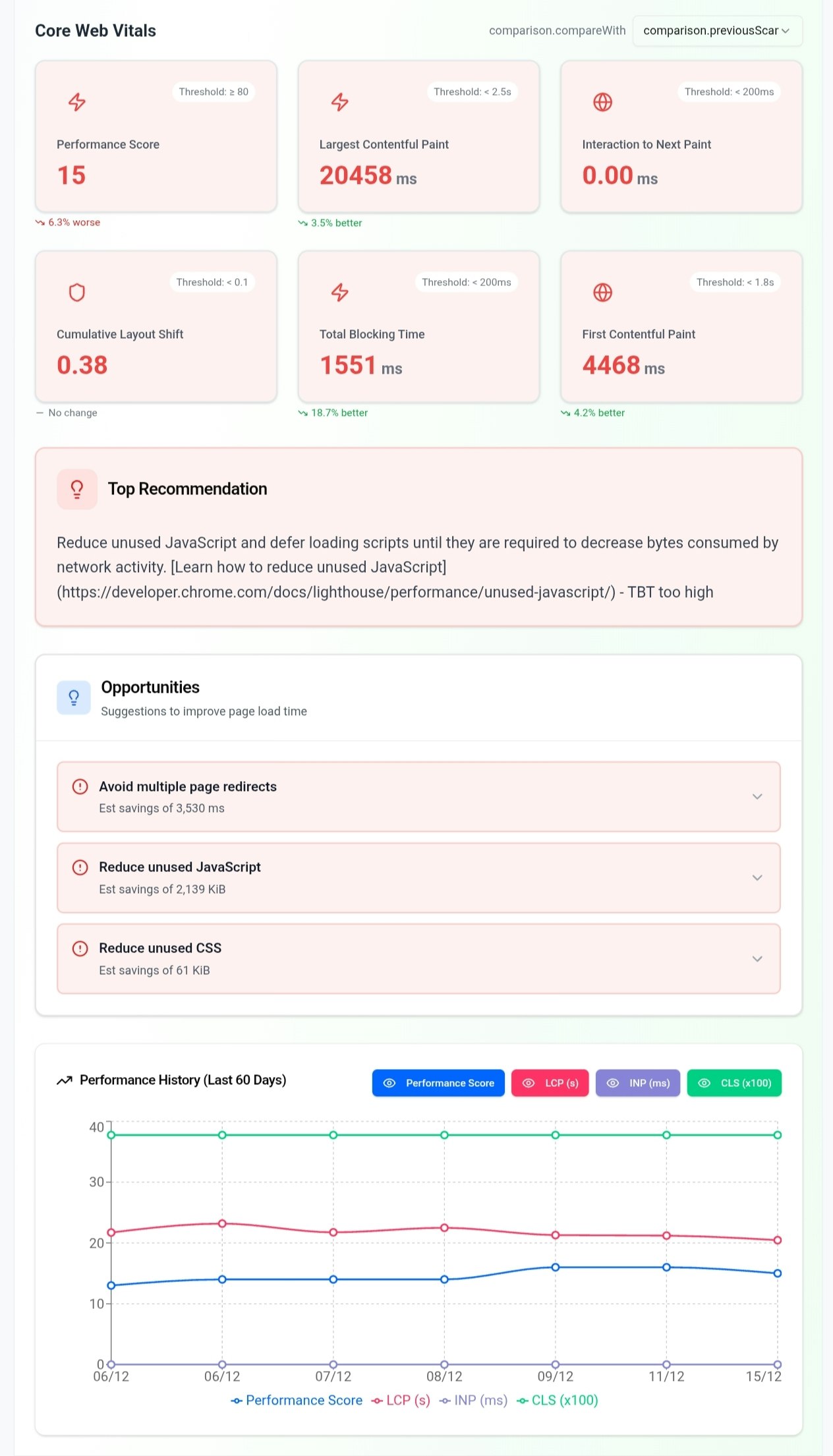

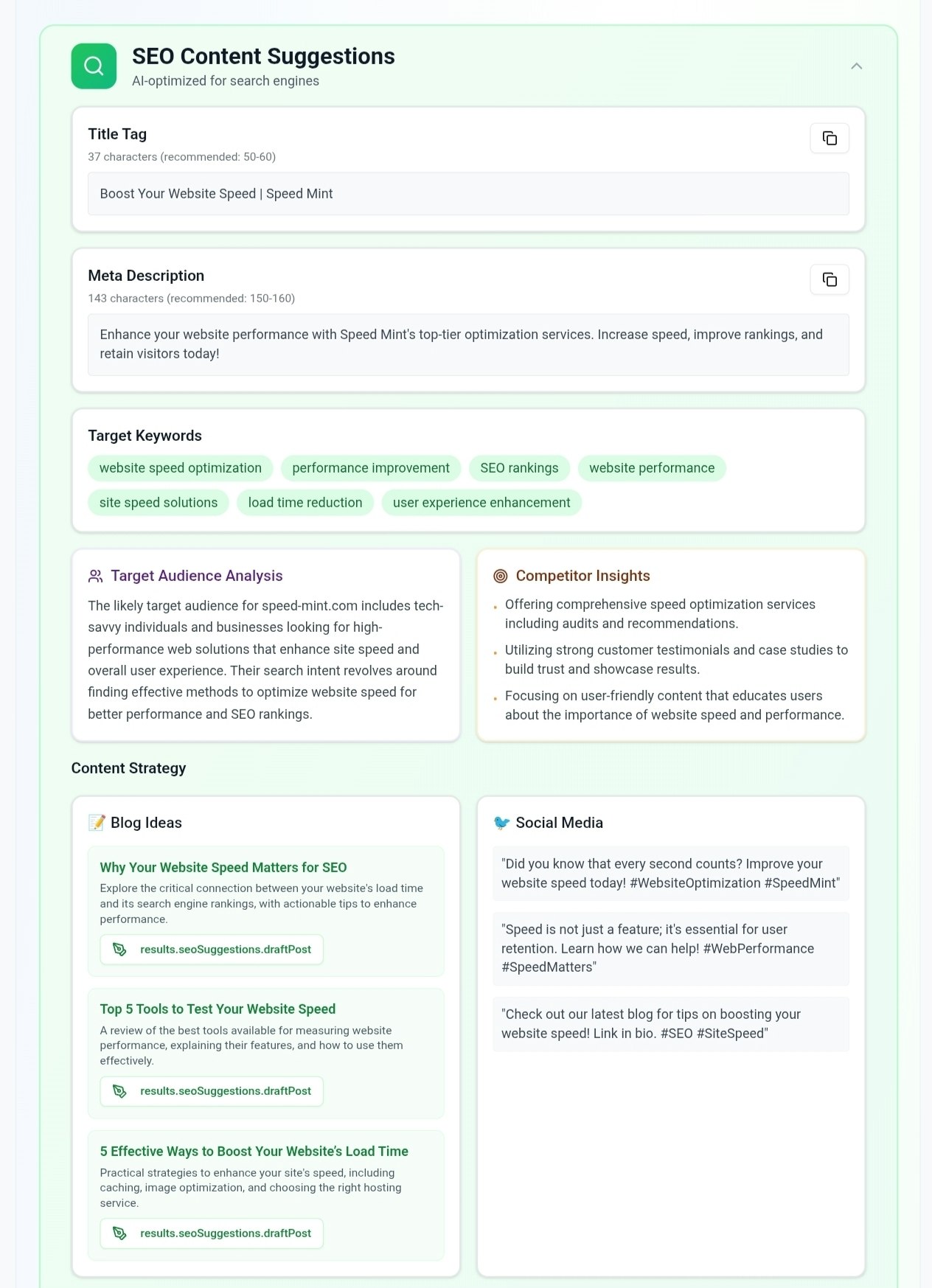

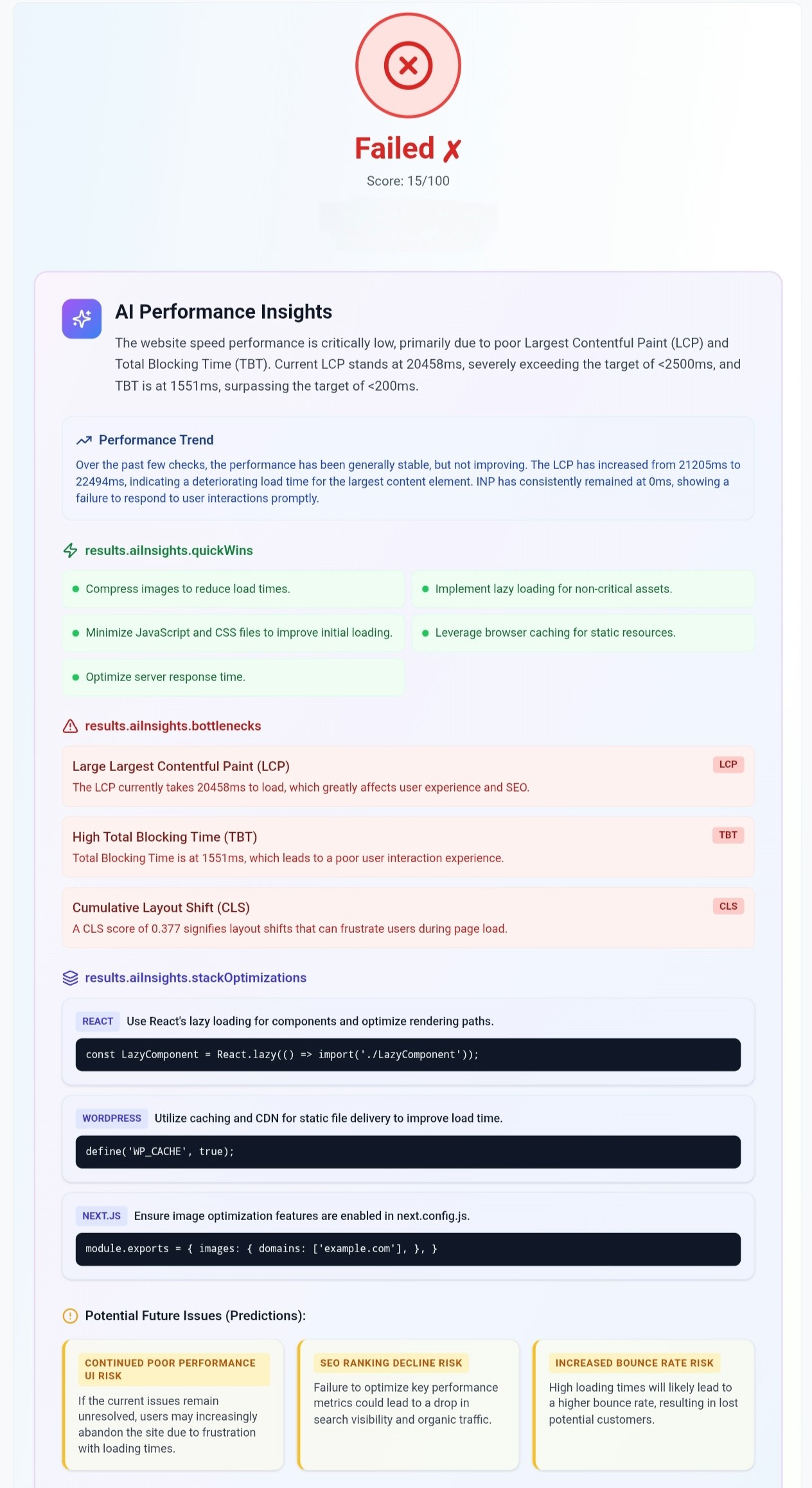

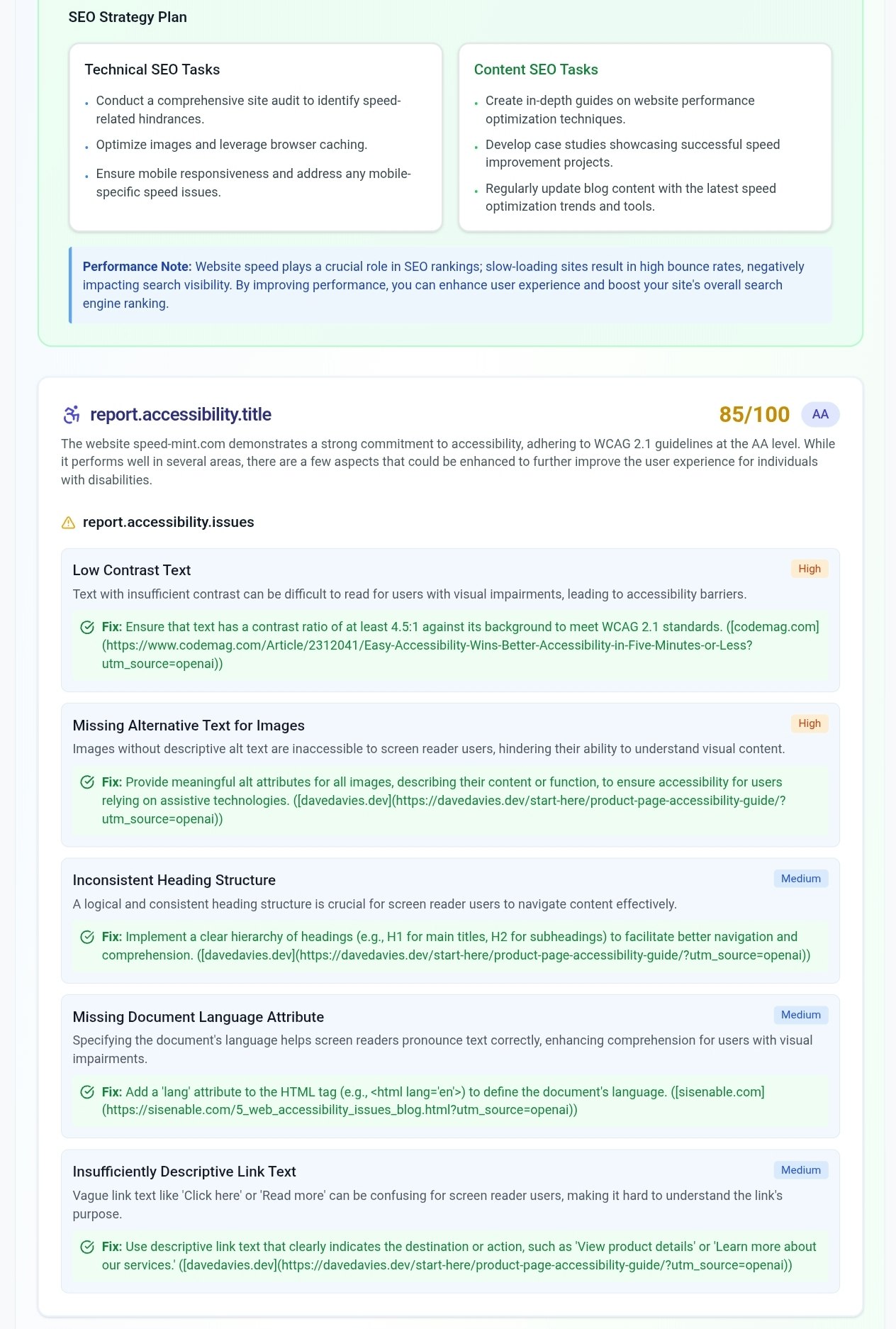

一句话介绍:SpeedMint通过提供单一、高影响力的修复建议,为网站所有者解决了面对复杂技术性能报告时无从下手的痛点,旨在快速提升移动端速度与SEO排名。

SEO

SaaS

Developer Tools

网站性能优化

SEO工具

移动端优化

一键修复

性能检测

站长工具

效率工具

Google排名

网页速度

技术简化

用户评论摘要:创始人阐述了产品源于对Google PageSpeed Insights报告过于技术化和令人困惑的挫败感。目前仅有一条用户评论,表示工具能立即生成清晰专业的报告,初步验证了产品核心价值。尚无具体问题或建议反馈。

AI 锐评

SpeedMint的核心理念是“减法”与“聚焦”,这在充斥着复杂数据的性能优化领域是一次精准的切入。它不试图成为另一个全面的监测平台,而是扮演“首席技术官”的角色,为用户做出优先级判断。其真正的价值不在于发现问题的广度,而在于决策的深度——将复杂的性能指标转化为一个当前最高效的行动指令。

然而,其商业模式与长期价值存疑。“单一修复”是一把双刃剑。对于轻度用户或小型网站,它提供了极低的启动门槛和即时成就感,堪称“止痛药”。但对于稍有规模的站点,一个关键修复之后呢?产品可能面临用户留存难题。它本质上解决的是“认知负担”和“启动阻力”,而非持续的性能管理需求。此外,将提升Google排名的希望寄托于一个“高影响力修复”,略显简化了SEO的复杂性,可能过度承诺。

从市场定位看,“Perfect for makers and site owners who want results, not headaches”的表述非常巧妙,直击非技术背景用户的焦虑。但若想从工具演变为可持续的业务,它必须构建从“单一修复”到“修复序列”或“持续优化”的路径,否则极易在用户首次使用后便被抛弃。当前29的投票数也反映出市场热度有限,产品需要更清晰地证明其建议的独特性和不可替代性,而不仅仅是现有报告工具的简化版。

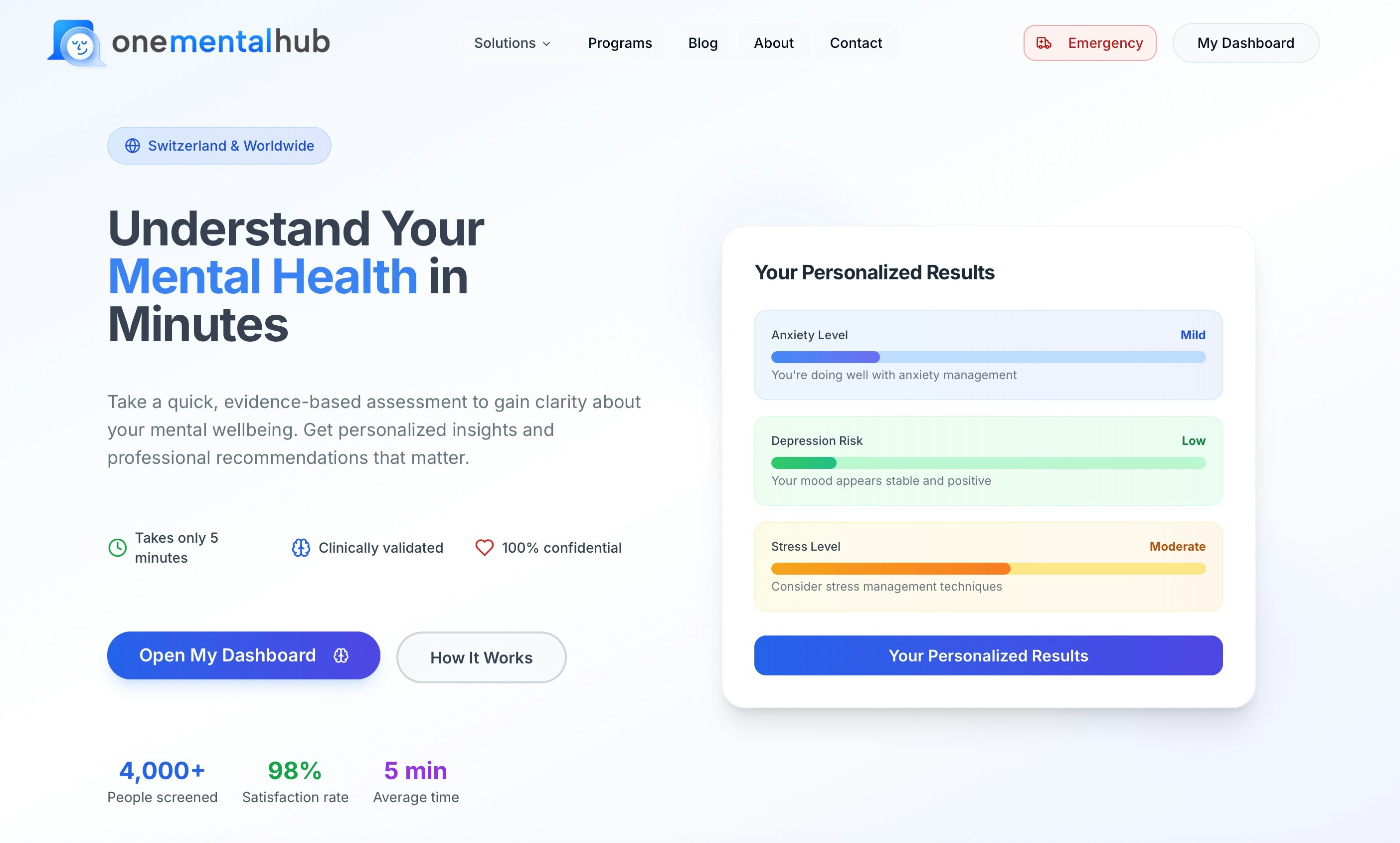

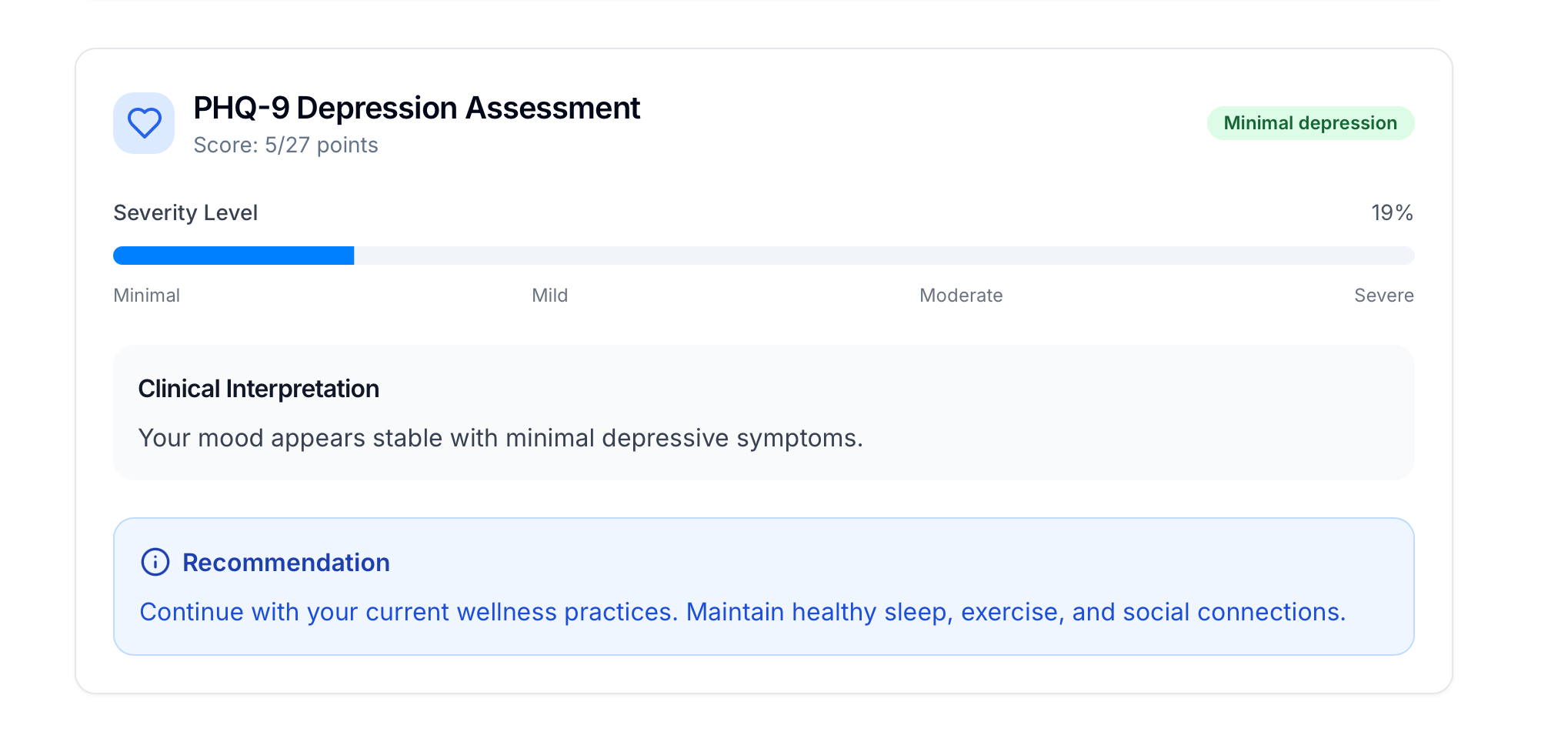

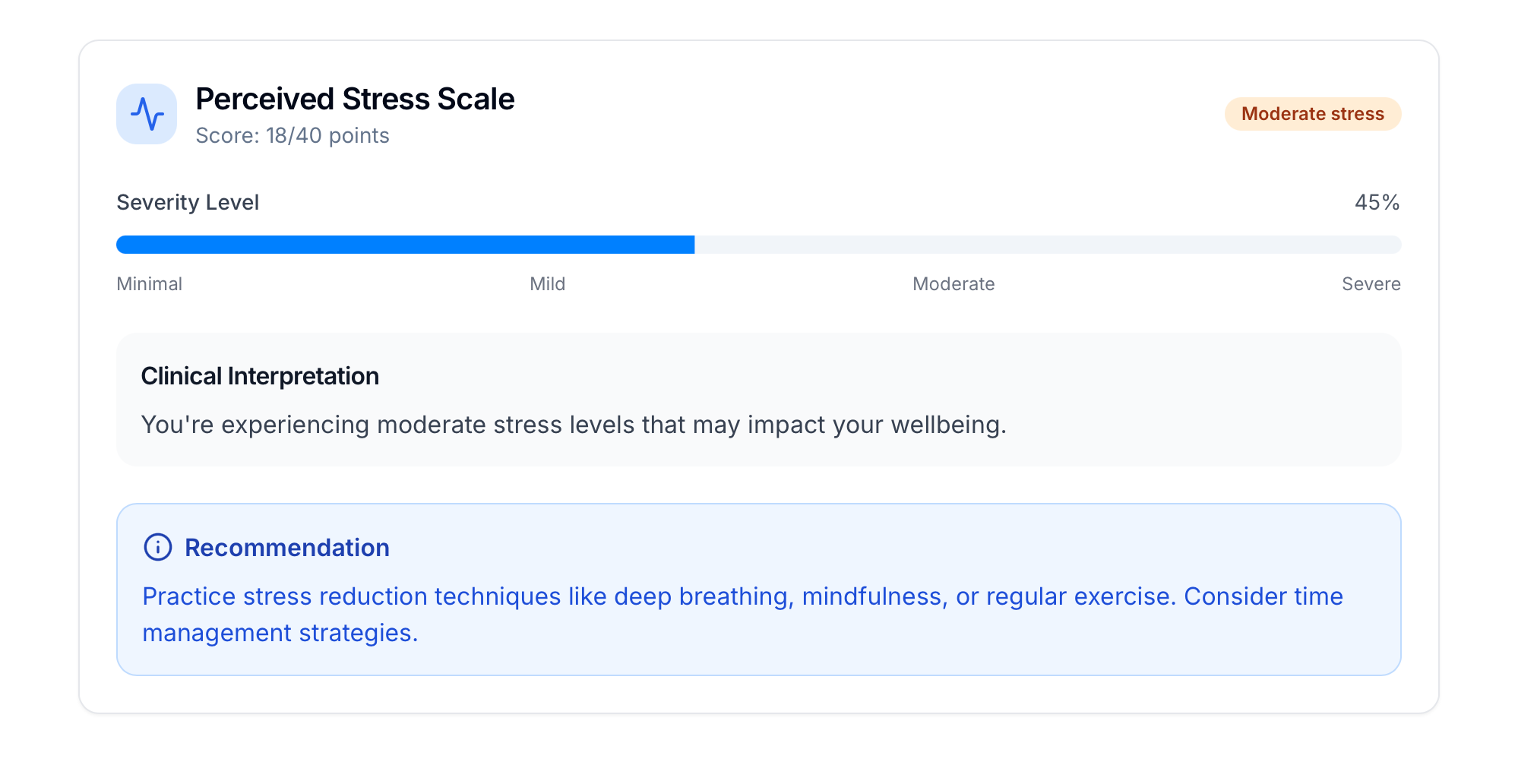

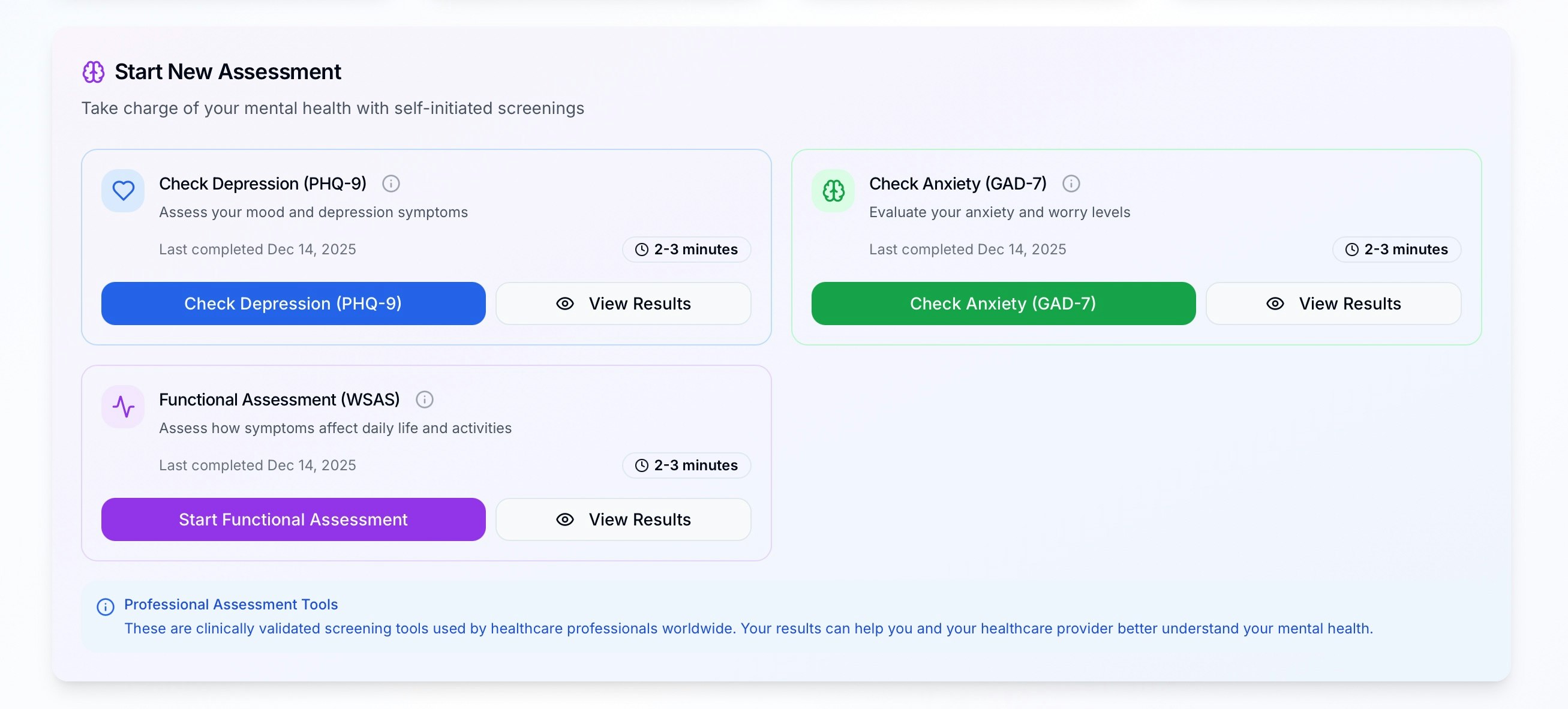

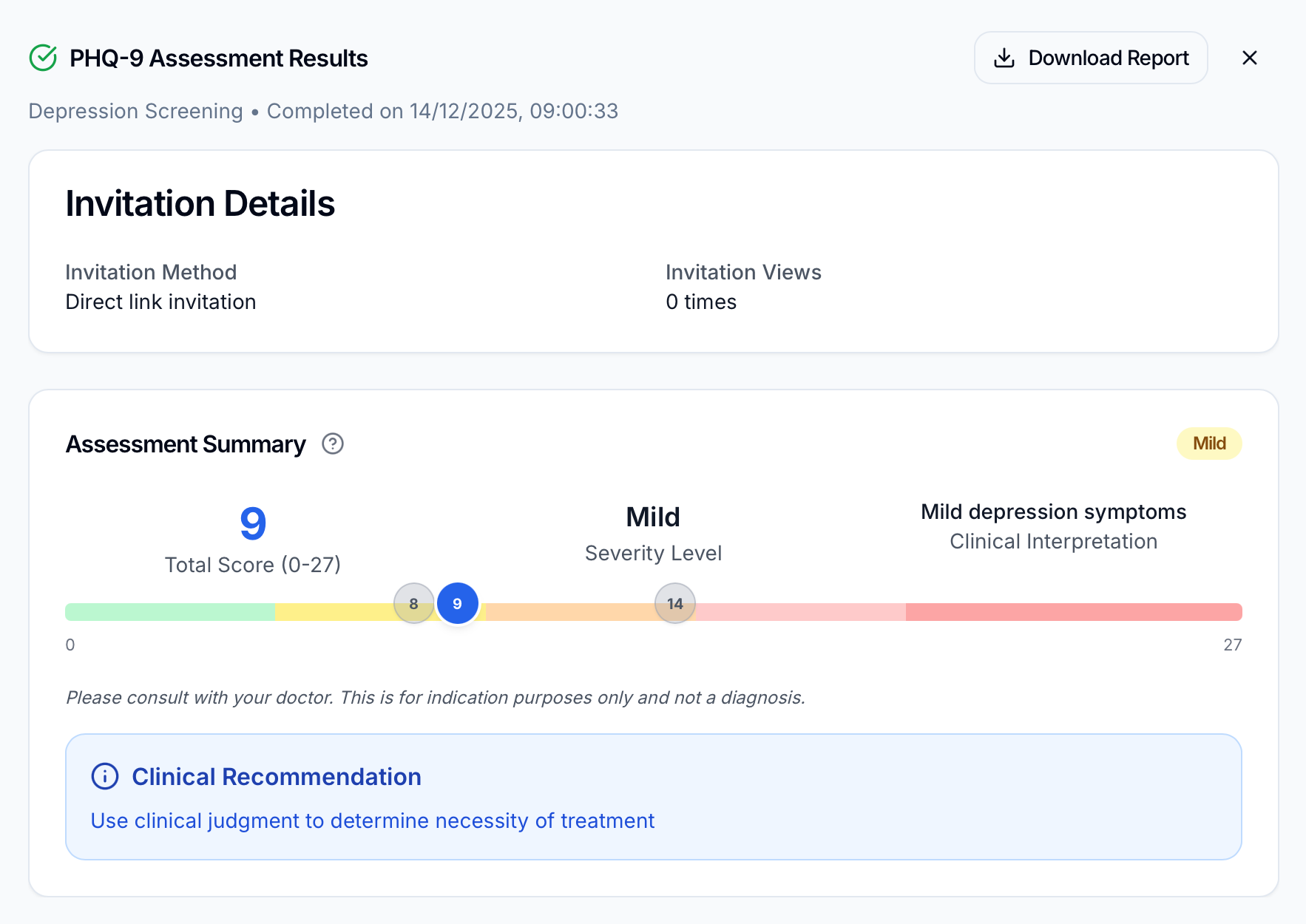

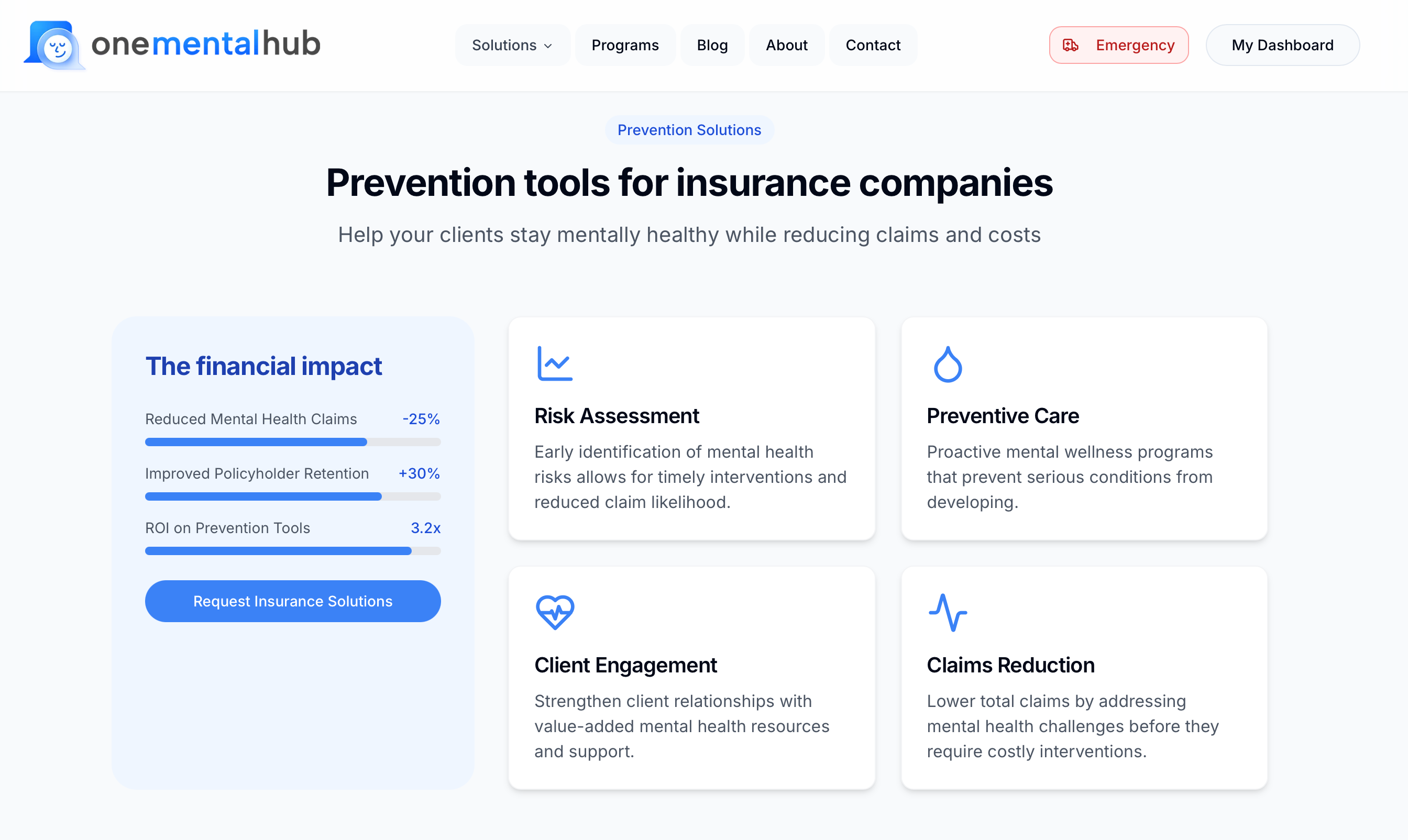

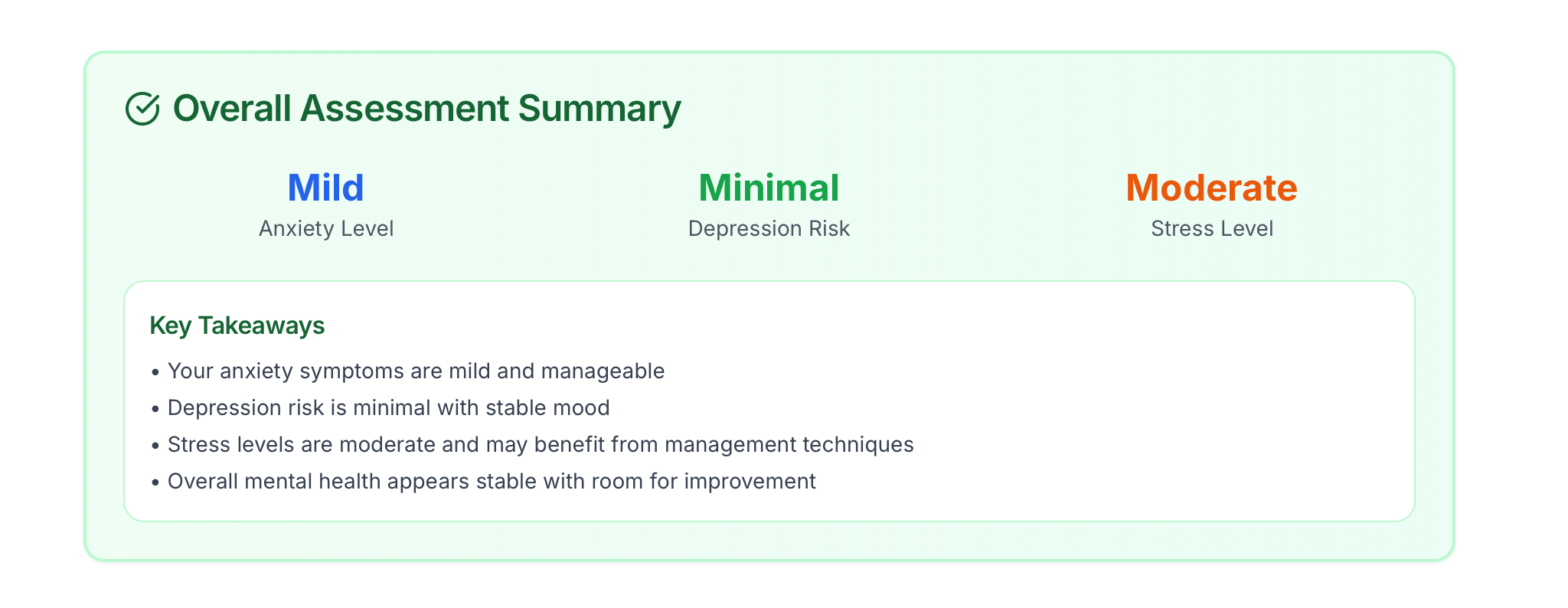

一句话介绍:One Mental Hub 是一款通过快速、专业的心理健康筛查,旨在降低心理健康护理门槛,为用户在初期自查和寻求支持的场景中提供便捷、私密的入口级工具。

Health & Fitness

Medical

Health

心理健康

自我筛查

数字健康

健康科技

预防保健

心理评估

移动医疗

健康管理

用户评论摘要:现有评论均为祝贺性言论,缺乏关于产品功能、使用体验或效果的具体反馈。有效评论为零,未能获取到用户问题或改进建议。

AI 锐评

One Mental Hub 切入的是“心理健康普惠”这一宏大而艰难的赛道。其宣称的愿景——消除壁垒、提供全程支持——与当前产品形态(快速筛查)之间存在巨大鸿沟。产品介绍充满理想主义色彩,但“几分钟内了解心理健康”的标语,恰恰暴露了其可能陷入的行业陷阱:将复杂的心理健康状况简化为一次快速的数字化问卷,这虽降低了初次接触的门槛,却极易引发误读或加剧用户焦虑,除非其背后有严谨的医学模型和清晰的结果解读指引。

从现有数据看,产品处于极早期,寥寥无几且无关痛痒的社交恭维式评论,说明其尚未触及真实用户核心圈层,或产品本身缺乏引发深度讨论的差异化价值。真正的挑战在于,作为入口工具,它如何构建可信度?筛查之后,是引导至线下专业服务,还是提供轻量干预?其商业模式与专业责任的边界又在哪里?若不能回答这些问题,它可能只是信息海洋中又一个“心理测试”H5的精致移动版,而非能真正改变护理路径的“Hub”(中心)。

其真正价值或许不在于筛查本身,而在于以极低的摩擦成本完成用户心理健康的“首次数字建档”,并以此为基础,未来可能构建一个连接评估、内容、社区与专业服务的平台。但这要求团队具备深厚的医学资源整合能力与长期的运营耐心,绝非一个轻量APP可轻易承载。在数字心理健康领域,善意与愿景是起点,但临床严谨性、数据隐私、有效的服务闭环才是生存与发展的基石。

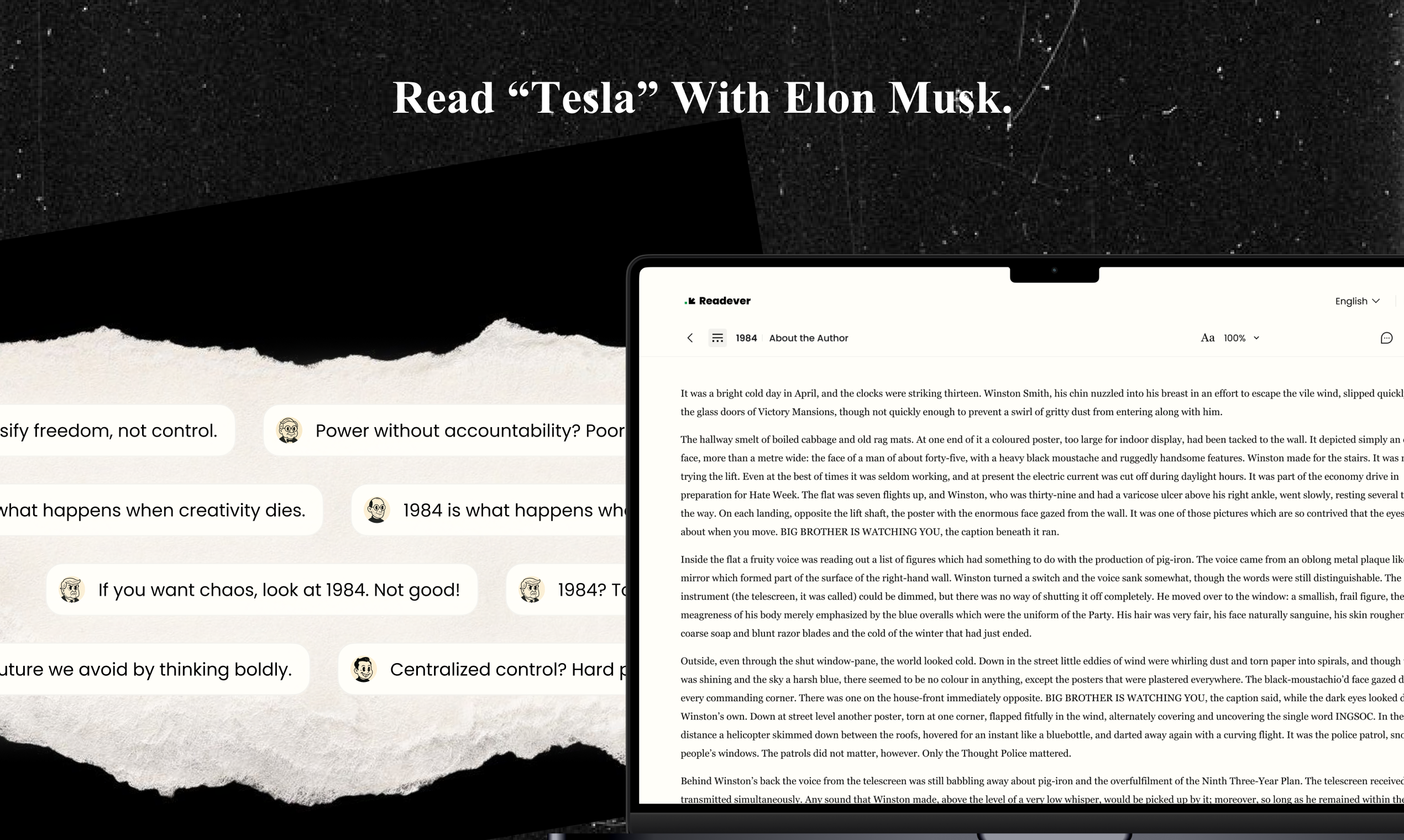

Hi PH! 👋 Makers here, launching Readever today. We built Readever because “AI for reading” often means summaries after the fact, but the real pain is getting stuck while reading. Readever helps you read inside the text:

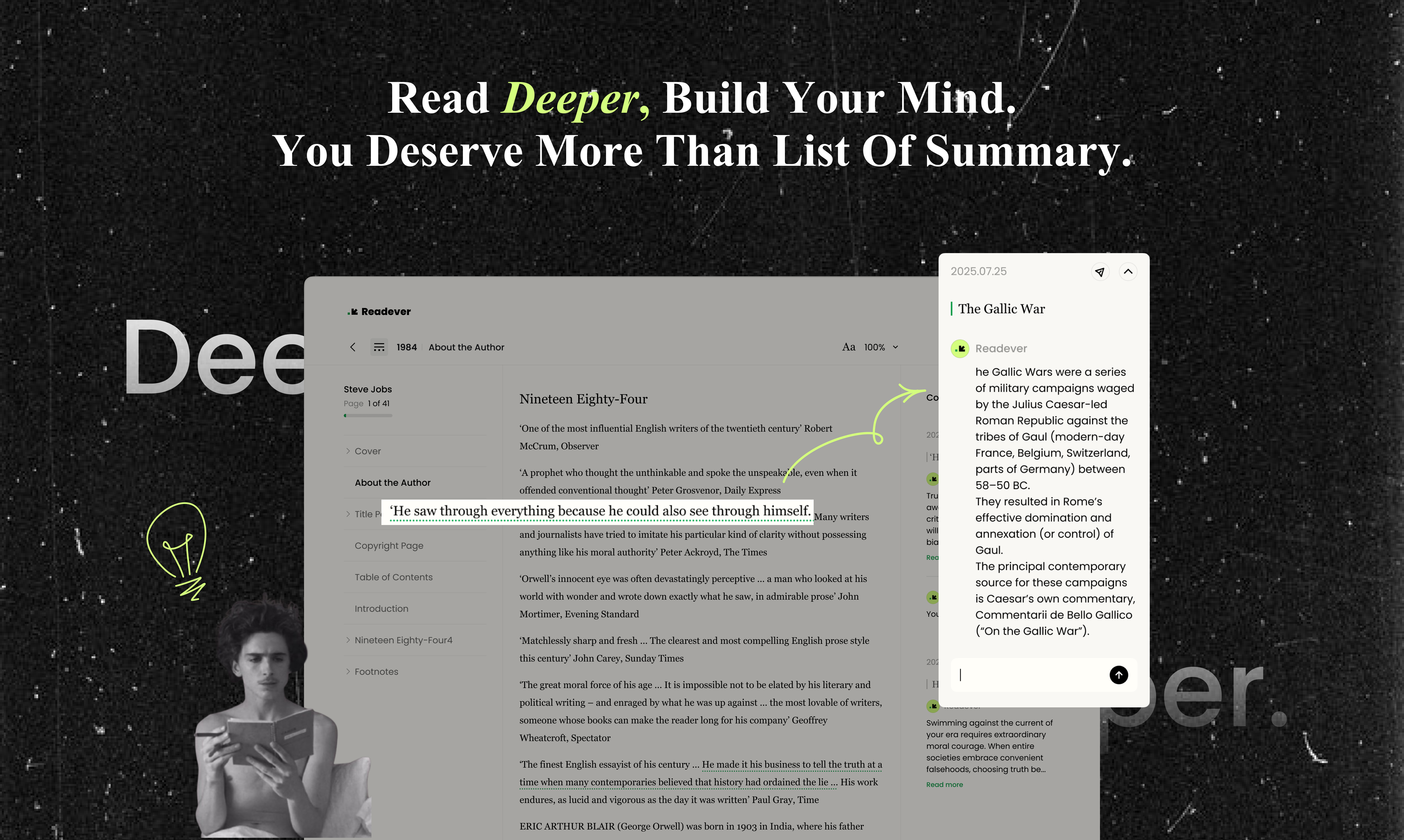

1. In-Context Q&A: Highlight any sentence while reading and ask questions where you’re stuck without leaving the page.

2. Proactive Reading Guidance: It adapts to your goals and level, proactively showing Highlight Cards so you get help even when you don’t know what to ask.

3. 5,000+ AI Reading Mentors: Read with thinkers, founders, writers, and historians. Ask them questions or let mentors debate to reveal different perspectives.

4. Built-in Translation: Read across languages without breaking your flow.

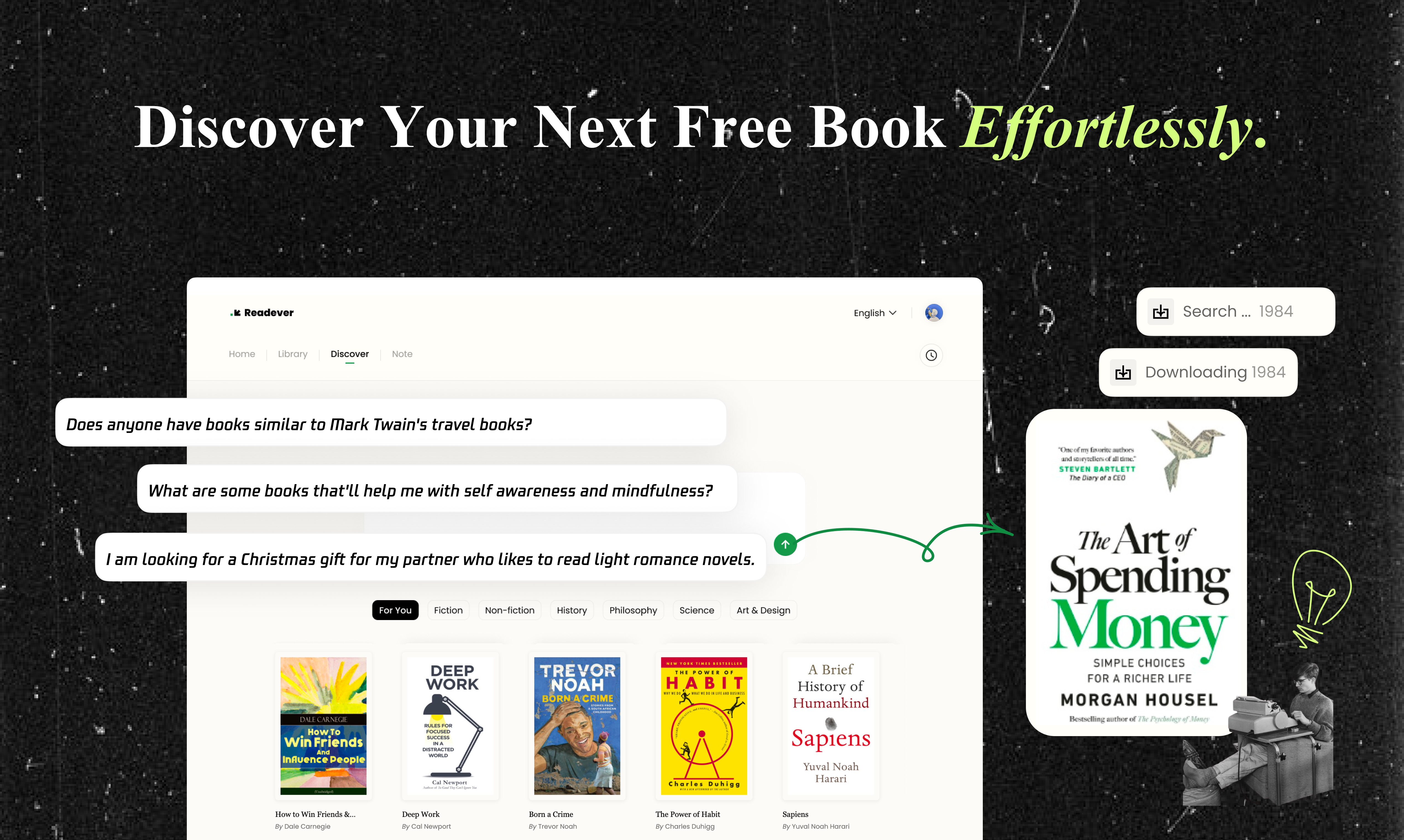

5. AI Book-Finding Agent: Describe what you want in natural language and get book recommendations tailored to your taste and intent. Readever is your next Knowledge Curator.

6. Memory system: Readever keeps you inside the text with fewer interruptions and faster comprehension, from the first page to the next book.

Readever isn’t for passive reading, it’s for understanding.

And yes, it’s totally free on the web!!!

The idea seems to quite new and creative for me. I really like to try and see how it works. Thanks for your work!

Is it possible to use an abstraction of a famous person's voice? How does that work if there's a monetisation aspect to it?

Great idea and a great product! Is there an option to read the book with the books author?

I liked the concept, but the ux needs a bit more work, It's very hard to understand what's going on and the use of already made comments on books.

I like the focus on visibility and control. If something is running on my Mac, I want to know about it no exception.

cool. AI highlighted some patterns I hadn’t noticed. How can I customize or choose my own character ?

Cool! Amazing for reading!

Congrats! Can users upload their own books, and does personalization still work with imported titles?

How does Readever personalize the reading journey for each user, especially readers with different goals?

I used it last night and genuinely felt accompanied while reading . a surprisingly warm experience.

"The 'dinner party' concept is such a cool hook. 🤯 I'm really curious to see how the AI mentors debate each other—does the AI actually mimic their specific rhetorical styles? Definitely trying this out. It makes reading feel less lonely."

What role do AI companions play during reading? Are they more like mentors, analysts, or reading buddies?

How large is the book catalog, and how does the public library integration function for users?

Congratulations on the launch of Readever! Honestly, your product positioning really caught my eye—it’s a rare and genuinely innovative product. I’ve already thought of many fun and interesting ways to use it, like having Jobs read his own biography with me and then asking him how he felt at different moments in his life. Keep it up!

I used it for fiction and loved how the AI companion picked up emotional shifts I totally missed.

How do you ensure accuracy when the AI comments on complex topics like economics, philosophy, or science?

Would Readever be useful for university students, researchers, or people writing a thesis?

From a product and AI perspective, how do you balance maintaining a consistent ‘persona voice’ while still adapting insights to different reading styles and genres?

Congrats on the launch! I'm curious about the workflow after reading, do you currently support exporting highlights or book notes to Notion or syncing with Readwise?

The memory system remembering my earlier confusion was surprisingly helpful.

How is Readever fundamentally different from NotebookLM, Readwise, or other reading/summarizer tools?

Really nice work, this looks clean and well done.

Just curious, have you ever worked with social media influencers to help show people how the software works and get more eyes on it?

Bro your launch video is something 😅😅

How does the system decide which concepts need explanation and which don’t?

Uploading my own book worked smoothly, and the annotations still felt tailored.

Short book summaries with narrated voices of famous personas could be a great addition)

How do you prevent the AI from overwhelming readers with too many notes or interruptions?